research

A newly developed user interface, the “i-Mouse,” in the K-Glass 2 tracks the user’s gaze and connects the device to the Internet through blinking eyes such as winks. This low-power interface provides smart glasses with an excellent user experience, with a long-lasting battery and augmented reality.

Smart glasses are wearable computers that will likely lead to the growth of the Internet of Things. Currently available smart glasses, however, reveal a set of problems for commercialization, such as short battery life and low energy efficiency. In addition, glasses that use voice commands have raised the issue of privacy concerns.

A research team led by Professor Hoi-Jun Yoo of the Electrical Engineering Department at the Korea Advanced Institute of Science and Technology (KAIST) has recently developed an upgraded model of the K-Glass (http://www.eurekalert.org/pub_releases/2014-02/tkai-kdl021714.php) called “K-Glass 2.”

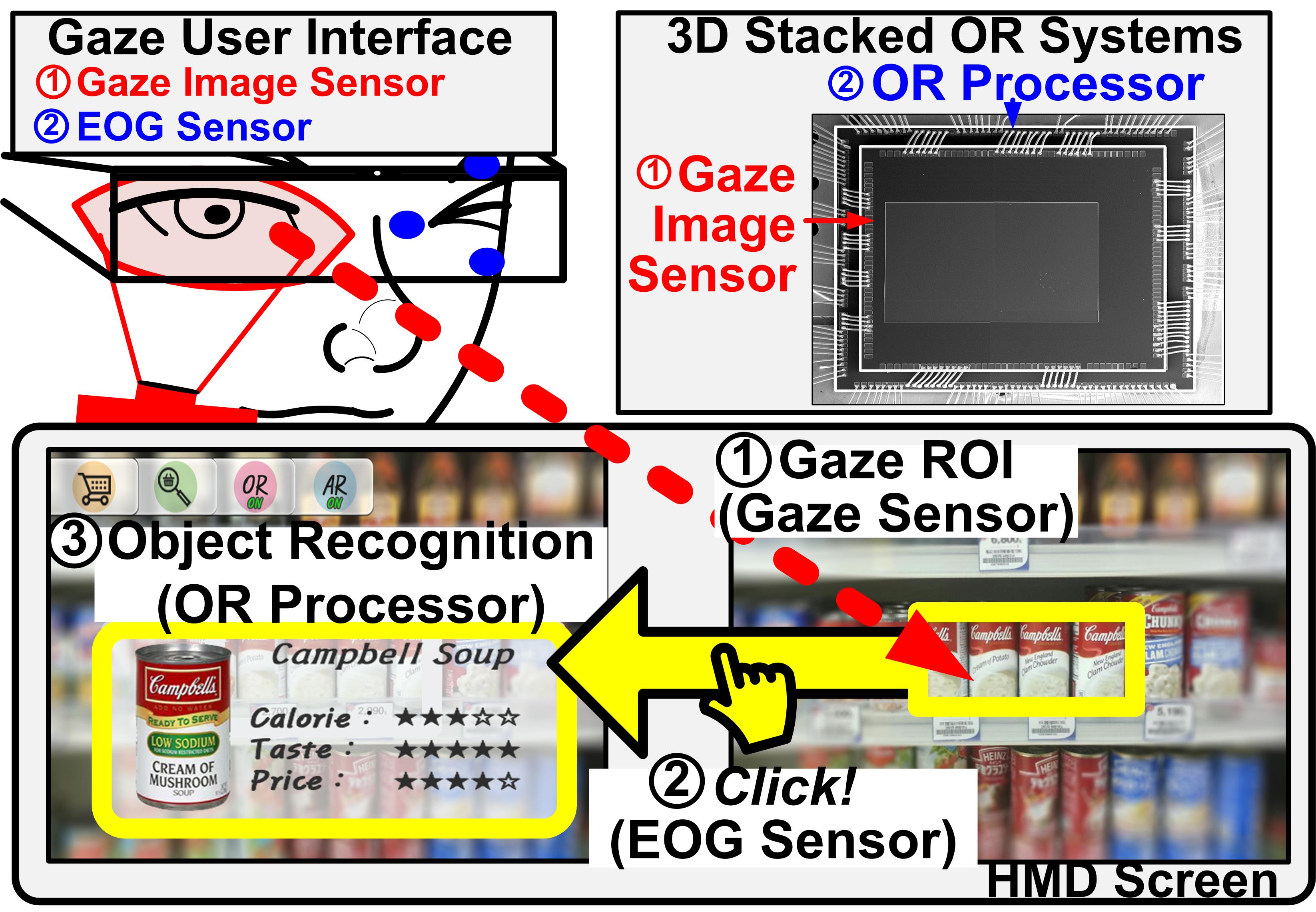

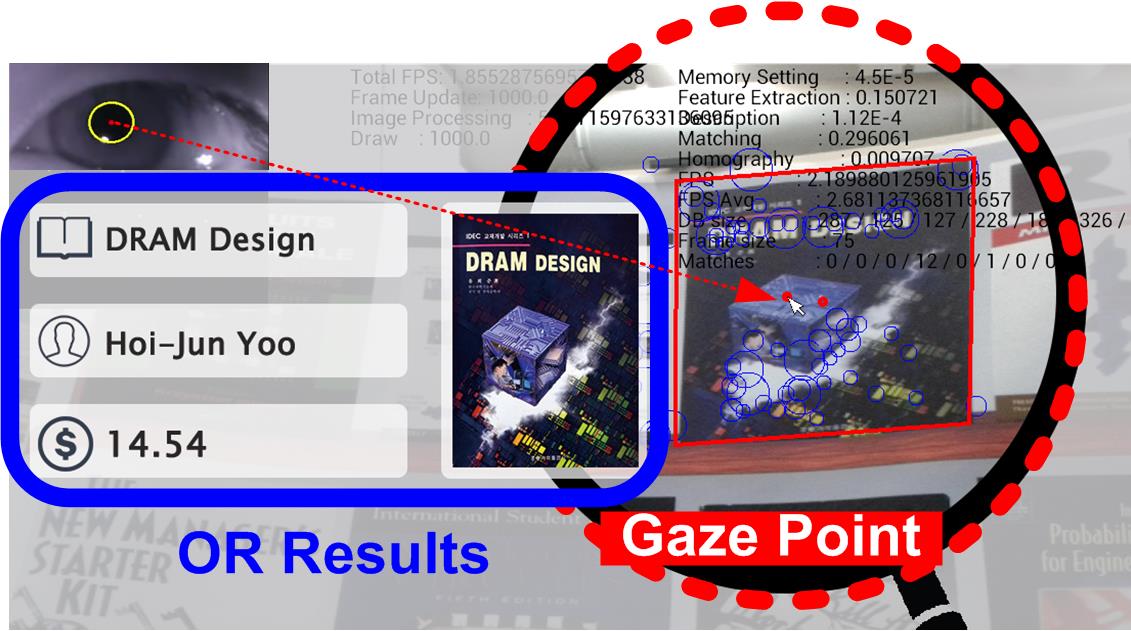

K-Glass 2 detects users’ eye movements to point the cursor to recognize computer icons or objects in the Internet, and uses winks for commands. The researchers call this interface the “i-Mouse,” which removes the need to use hands or voice to control a mouse or touchpad. Like its predecessor, K-Glass 2 also employs augmented reality, displaying in real time the relevant, complementary information in the form of text, 3D graphics, images, and audio over the target objects selected by users.

The research results were presented, and K-Glass 2’s successful operation was demonstrated on-site to the 2015 Institute of Electrical and Electronics Engineers (IEEE) International Solid-State Circuits Conference (ISSCC) held on February 23-25, 2015 in San Francisco. The title of the paper was “A 2.71nJ/Pixel 3D-Stacked Gaze-Activated Object Recognition System for Low-power Mobile HMD Applications” (http://ieeexplore.ieee.org/Xplore/home.jsp).

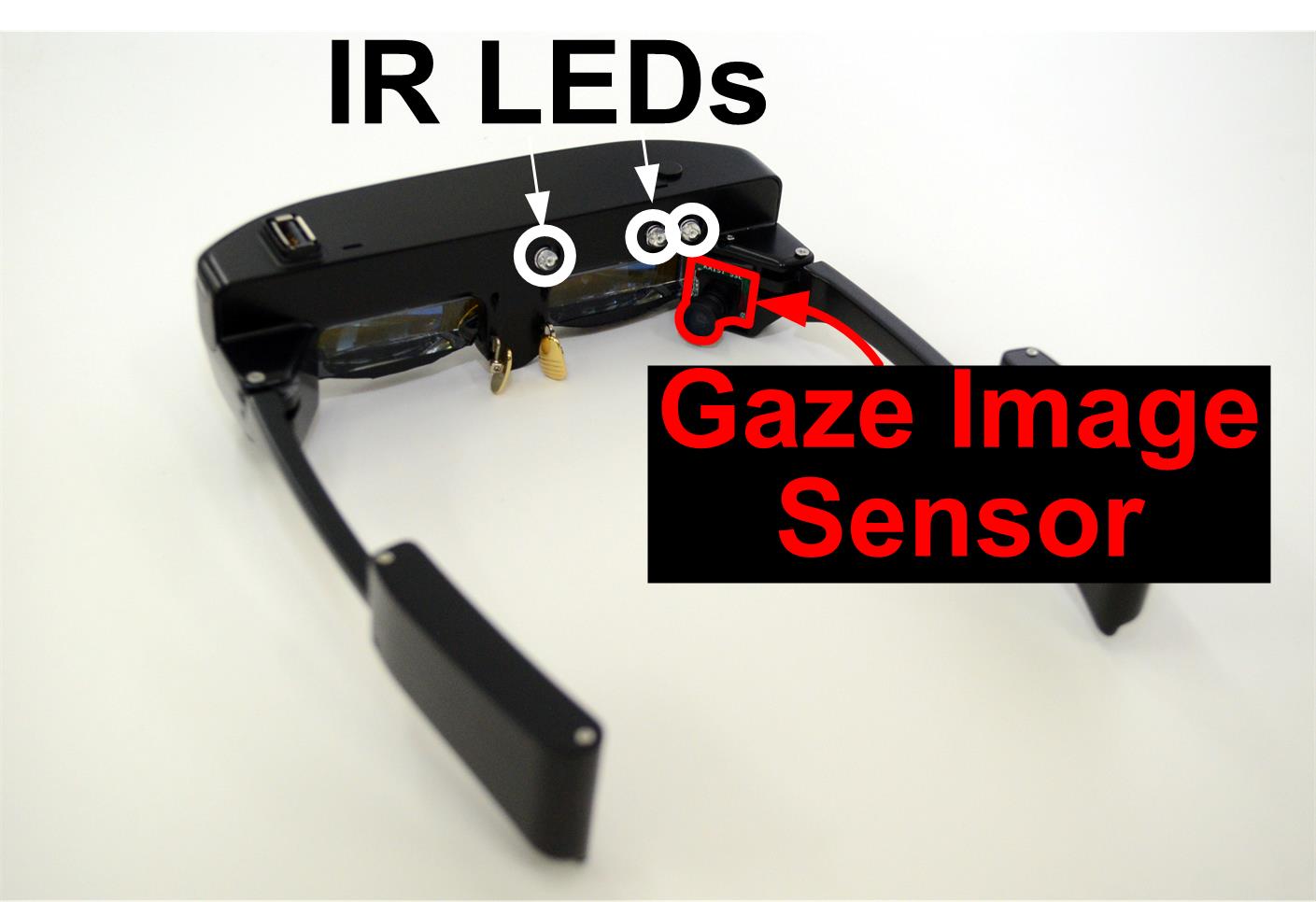

The i-Mouse is a new user interface for smart glasses in which the gaze-image sensor (GIS) and object recognition processor (ORP) are stacked vertically to form a small chip. When three infrared LEDs (light-emitting diodes) built into the K-Glass 2 are projected into the user’s eyes, GIS recognizes their focal point and estimates the possible locations of the gaze as the user glances over the display screen. Then the electro-oculography sensor embedded on the nose pads reads the user’s eyelid movements, for example, winks, to click the selection. It is worth noting that the ORP is wired to perform only within the selected region of interest (ROI) by users. This results in a significant saving of battery life. Compared to the previous ORP chips, this chip uses 3.4 times less power, consuming on average 75 milliwatts (mW), thereby helping K-Glass 2 to run for almost 24 hours on a single charge.

Professor Yoo said, “The smart glass industry will surely grow as we see the Internet of Things becomes commonplace in the future. In order to expedite the commercial use of smart glasses, improving the user interface (UI) and the user experience (UX) are just as important as the development of compact-size, low-power wearable platforms with high energy efficiency. We have demonstrated such advancement through our K-Glass 2. Using the i-Mouse, K-Glass 2 can provide complicated augmented reality with low power through eye clicking.”

Professor Yoo and his doctoral student, Injoon Hong, conducted this research under the sponsorship of the Brain-mimicking Artificial Intelligence Many-core Processor project by the Ministry of Science, ICT and Future Planning in the Republic of Korea.

Youtube Link:

https://www.youtube.com/watchv=JaYtYK9E7p0&list=PLXmuftxI6pTW2jdIf69teY7QDXdI3Ougr

Picture 1: K-Glass 2

K-Glass 2 can detect eye movements and click computer icons via users’ winking.

Picture 2: Object Recognition Processor Chip

This picture shows a gaze-activated object-recognition system.

Picture 3: Augmented Reality Integrated into K-Glass 2

Users receive additional visual information overlaid on the objects they select.