NI

-

PICASSO Technique Drives Biological Molecules into Technicolor

The new imaging approach brings current imaging colors from four to more than 15 for mapping overlapping proteins

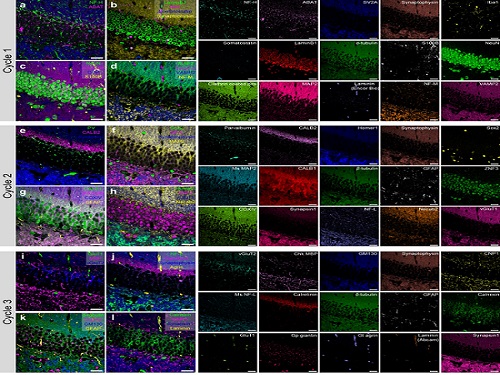

Pablo Picasso’s surreal cubist artistic style shifted common features into unrecognizable scenes, but a new imaging approach bearing his namesake may elucidate the most complicated subject: the brain. Employing artificial intelligence to clarify spectral color blending of tiny molecules used to stain specific proteins and other items of research interest, the PICASSO technique, allows researchers to use more than 15 colors to image and parse our overlapping proteins.

The PICASSO developers, based in Korea, published their approach on May 5 in Nature Communications.

Fluorophores — the staining molecules — emit specific colors when excited by a light, but if more than four fluorophores are used, their emitted colors overlap and blend. Researchers previously developed techniques to correct this spectral overlap by precisely defining the matrix of mixed and unmixed images. This measurement depends on reference spectra, found by identifying clear images of only one fluorophore-stained specimen or of multiple, identically prepared specimens that only contain a single fluorophore each.

“Such reference spectra measurement could be complicated to perform in highly heterogeneous specimens, such as the brain, due to the highly varied emission spectra of fluorophores depending on the subregions from which the spectra were measured,” said co-corresponding author Young-Gyu Yoon, professor in the School of Electrical Engineering at KAIST. He explained that the subregions would each need their own spectra reference measurements, making for an inefficient, time-consuming process. “To address this problem, we developed an approach that does not require reference spectra measurements.”

The approach is the “Process of ultra-multiplexed Imaging of biomolecules viA the unmixing of the Signals of Spectrally Overlapping fluorophores,” also known as PICASSO. Ultra-multiplexed imaging refers to visualizing the numerous individual components of a unit. Like a cinema multiplex in which each theater plays a different movie, each protein in a cell has a different role. By staining with fluorophores, researchers can begin to understand those roles.

“We devised a strategy based on information theory; unmixing is performed by iteratively minimizing the mutual information between mixed images,” said co-corresponding author Jae-Byum Chang, professor in the Department of Materials Science and Engineering, KAIST. “This allows us to get away with the assumption that the spatial distribution of different proteins is mutually exclusive and enables accurate information unmixing.”

To demonstrate PICASSO’s capabilities, the researchers applied the technique to imaging a mouse brain. With a single round of staining, they performed 15-color multiplexed imaging of a mouse brain. Although small, mouse brains are still complex, multifaceted organs that can take significant resources to map. According to the researchers, PICASSO can improve the capabilities of other imaging techniques and allow for the use of even more fluorophore colors.

Using one such imaging technique in combination with PICASSO, the team achieved 45-color multiplexed imaging of the mouse brain in only three staining and imaging cycles, according to Yoon.

“PICASSO is a versatile tool for the multiplexed biomolecule imaging of cultured cells, tissue slices and clinical specimens,” Chang said. “We anticipate that PICASSO will be useful for a broad range of applications for which biomolecules’ spatial information is important. One such application the tool would be useful for is revealing the cellular heterogeneities of tumor microenvironments, especially the heterogeneous populations of immune cells, which are closely related to cancer prognoses and the efficacy of cancer therapies.”

The Samsung Research Funding & Incubation Center for Future Technology supported this work. Spectral imaging was performed at the Korea Basic Science Institute Western Seoul Center.

-PublicationJunyoung Seo, Yeonbo Sim, Jeewon Kim, Hyunwoo Kim, In Cho, Hoyeon Nam, Yong-Gyu Yoon, Jae-Byum Chang, “PICASSO allows ultra-multiplexed fluorescence imaging of spatiallyoverlapping proteins without reference spectra measurements,” May 5, Nature Communications (doi.org/10.1038/s41467-022-30168-z)

-ProfileProfessor Jae-Byum ChangDepartment of Materials Science and EngineeringCollege of EngineeringKAIST

Professor Young-Gyu YoonSchool of Electrical EngineeringCollege of EngineeringKAIST

2022.06.22 View 10837

PICASSO Technique Drives Biological Molecules into Technicolor

The new imaging approach brings current imaging colors from four to more than 15 for mapping overlapping proteins

Pablo Picasso’s surreal cubist artistic style shifted common features into unrecognizable scenes, but a new imaging approach bearing his namesake may elucidate the most complicated subject: the brain. Employing artificial intelligence to clarify spectral color blending of tiny molecules used to stain specific proteins and other items of research interest, the PICASSO technique, allows researchers to use more than 15 colors to image and parse our overlapping proteins.

The PICASSO developers, based in Korea, published their approach on May 5 in Nature Communications.

Fluorophores — the staining molecules — emit specific colors when excited by a light, but if more than four fluorophores are used, their emitted colors overlap and blend. Researchers previously developed techniques to correct this spectral overlap by precisely defining the matrix of mixed and unmixed images. This measurement depends on reference spectra, found by identifying clear images of only one fluorophore-stained specimen or of multiple, identically prepared specimens that only contain a single fluorophore each.

“Such reference spectra measurement could be complicated to perform in highly heterogeneous specimens, such as the brain, due to the highly varied emission spectra of fluorophores depending on the subregions from which the spectra were measured,” said co-corresponding author Young-Gyu Yoon, professor in the School of Electrical Engineering at KAIST. He explained that the subregions would each need their own spectra reference measurements, making for an inefficient, time-consuming process. “To address this problem, we developed an approach that does not require reference spectra measurements.”

The approach is the “Process of ultra-multiplexed Imaging of biomolecules viA the unmixing of the Signals of Spectrally Overlapping fluorophores,” also known as PICASSO. Ultra-multiplexed imaging refers to visualizing the numerous individual components of a unit. Like a cinema multiplex in which each theater plays a different movie, each protein in a cell has a different role. By staining with fluorophores, researchers can begin to understand those roles.

“We devised a strategy based on information theory; unmixing is performed by iteratively minimizing the mutual information between mixed images,” said co-corresponding author Jae-Byum Chang, professor in the Department of Materials Science and Engineering, KAIST. “This allows us to get away with the assumption that the spatial distribution of different proteins is mutually exclusive and enables accurate information unmixing.”

To demonstrate PICASSO’s capabilities, the researchers applied the technique to imaging a mouse brain. With a single round of staining, they performed 15-color multiplexed imaging of a mouse brain. Although small, mouse brains are still complex, multifaceted organs that can take significant resources to map. According to the researchers, PICASSO can improve the capabilities of other imaging techniques and allow for the use of even more fluorophore colors.

Using one such imaging technique in combination with PICASSO, the team achieved 45-color multiplexed imaging of the mouse brain in only three staining and imaging cycles, according to Yoon.

“PICASSO is a versatile tool for the multiplexed biomolecule imaging of cultured cells, tissue slices and clinical specimens,” Chang said. “We anticipate that PICASSO will be useful for a broad range of applications for which biomolecules’ spatial information is important. One such application the tool would be useful for is revealing the cellular heterogeneities of tumor microenvironments, especially the heterogeneous populations of immune cells, which are closely related to cancer prognoses and the efficacy of cancer therapies.”

The Samsung Research Funding & Incubation Center for Future Technology supported this work. Spectral imaging was performed at the Korea Basic Science Institute Western Seoul Center.

-PublicationJunyoung Seo, Yeonbo Sim, Jeewon Kim, Hyunwoo Kim, In Cho, Hoyeon Nam, Yong-Gyu Yoon, Jae-Byum Chang, “PICASSO allows ultra-multiplexed fluorescence imaging of spatiallyoverlapping proteins without reference spectra measurements,” May 5, Nature Communications (doi.org/10.1038/s41467-022-30168-z)

-ProfileProfessor Jae-Byum ChangDepartment of Materials Science and EngineeringCollege of EngineeringKAIST

Professor Young-Gyu YoonSchool of Electrical EngineeringCollege of EngineeringKAIST

2022.06.22 View 10837 -

Now You Can See Floral Scents!

Optical interferometry visualizes how often lilies emit volatile organic compounds

Have you ever thought about when flowers emit their scents?

KAIST mechanical engineers and biological scientists directly visualized how often a lily releases a floral scent using a laser interferometry method. These measurement results can provide new insights for understanding and further exploring the biosynthesis and emission mechanisms of floral volatiles.

Why is it important to know this? It is well known that the fragrance of flowers affects their interactions with pollinators, microorganisms, and florivores. For instance, many flowering plants can tune their scent emission rates when pollinators are active for pollination. Petunias and the wild tobacco Nicotiana attenuata emit floral scents at night to attract night-active pollinators. Thus, visualizing scent emissions can help us understand the ecological evolution of plant-pollinator interactions.

Many groups have been trying to develop methods for scent analysis. Mass spectrometry has been one widely used method for investigating the fragrance of flowers. Although mass spectrometry reveals the quality and quantity of floral scents, it is impossible to directly measure the releasing frequency. A laser-based gas detection system and a smartphone-based detection system using chemo-responsive dyes have also been used to measure volatile organic compounds (VOCs) in real-time, but it is still hard to measure the time-dependent emission rate of floral scents.

However, the KAIST research team co-led by Professor Hyoungsoo Kim from the Department of Mechanical Engineering and Professor Sang-Gyu Kim from the Department of Biological Sciences measured a refractive index difference between the vapor of the VOCs of lilies and the air to measure the emission frequency. The floral scent vapor was detected and the refractive index of air was 1.0 while that of the major floral scent of a linalool lily was 1.46.

Professor Hyoungsoo Kim said, “We expect this technology to be further applicable to various industrial sectors such as developing it to detect hazardous substances in a space.” The research team also plans to identify the DNA mechanism that controls floral scent secretion.

The current work entitled “Real-time visualization of scent accumulation reveals the frequency of floral scent emissions” was published in ‘Frontiers in Plant Science’ on April 18, 2022. (https://doi.org/10.3389/fpls.2022.835305).

This research was supported by the Basic Science Research Program through the National Research Foundation of Korea (NRF-2021R1A2C2007835), the Rural Development Administration (PJ016403), and the KAIST-funded Global Singularity Research PREP-Program.

-Publication:H. Kim, G. Lee, J. Song, and S.-G. Kim, "Real-time visualization of scent accumulation reveals the frequency of floral scent emissions," Frontiers in Plant Science 18, 835305 (2022) (https://doi.org/10.3389/fpls.2022.835305)

-Profile:Professor Hyoungsoo Kimhttp://fil.kaist.ac.kr

@MadeInH on TwitterDepartment of Mechanical EngineeringKAIST

Professor Sang-Gyu Kimhttps://sites.google.com/view/kimlab/home Department of Biological SciencesKAIST

2022.05.25 View 10201

Now You Can See Floral Scents!

Optical interferometry visualizes how often lilies emit volatile organic compounds

Have you ever thought about when flowers emit their scents?

KAIST mechanical engineers and biological scientists directly visualized how often a lily releases a floral scent using a laser interferometry method. These measurement results can provide new insights for understanding and further exploring the biosynthesis and emission mechanisms of floral volatiles.

Why is it important to know this? It is well known that the fragrance of flowers affects their interactions with pollinators, microorganisms, and florivores. For instance, many flowering plants can tune their scent emission rates when pollinators are active for pollination. Petunias and the wild tobacco Nicotiana attenuata emit floral scents at night to attract night-active pollinators. Thus, visualizing scent emissions can help us understand the ecological evolution of plant-pollinator interactions.

Many groups have been trying to develop methods for scent analysis. Mass spectrometry has been one widely used method for investigating the fragrance of flowers. Although mass spectrometry reveals the quality and quantity of floral scents, it is impossible to directly measure the releasing frequency. A laser-based gas detection system and a smartphone-based detection system using chemo-responsive dyes have also been used to measure volatile organic compounds (VOCs) in real-time, but it is still hard to measure the time-dependent emission rate of floral scents.

However, the KAIST research team co-led by Professor Hyoungsoo Kim from the Department of Mechanical Engineering and Professor Sang-Gyu Kim from the Department of Biological Sciences measured a refractive index difference between the vapor of the VOCs of lilies and the air to measure the emission frequency. The floral scent vapor was detected and the refractive index of air was 1.0 while that of the major floral scent of a linalool lily was 1.46.

Professor Hyoungsoo Kim said, “We expect this technology to be further applicable to various industrial sectors such as developing it to detect hazardous substances in a space.” The research team also plans to identify the DNA mechanism that controls floral scent secretion.

The current work entitled “Real-time visualization of scent accumulation reveals the frequency of floral scent emissions” was published in ‘Frontiers in Plant Science’ on April 18, 2022. (https://doi.org/10.3389/fpls.2022.835305).

This research was supported by the Basic Science Research Program through the National Research Foundation of Korea (NRF-2021R1A2C2007835), the Rural Development Administration (PJ016403), and the KAIST-funded Global Singularity Research PREP-Program.

-Publication:H. Kim, G. Lee, J. Song, and S.-G. Kim, "Real-time visualization of scent accumulation reveals the frequency of floral scent emissions," Frontiers in Plant Science 18, 835305 (2022) (https://doi.org/10.3389/fpls.2022.835305)

-Profile:Professor Hyoungsoo Kimhttp://fil.kaist.ac.kr

@MadeInH on TwitterDepartment of Mechanical EngineeringKAIST

Professor Sang-Gyu Kimhttps://sites.google.com/view/kimlab/home Department of Biological SciencesKAIST

2022.05.25 View 10201 -

Sumi Jo Performing Arts Research Center Opens

Distinguished visiting scholar soprano Sumi Jo gave a special lecture on May 13 at the KAIST auditorium. During the lecture, she talked about new technologies that will be introduced for future performing art stages while sharing some of the challenges she experienced before reaching to the stardom of the world stage. She also joined the KAIST student choral club ‘Chorus’ to perform the KAIST school song.

Professor Jo also opened the Sumi Jo Performing Arts Research Center on the same day along with President Kwang Hyung Lee and faculty members from the Graduate School of Culture Technology. The center will conduct AI and metaverse-based performing art technologies such as performer modeling via AI playing and motion creation, interactions between virtual and human players via sound analysis and motion recognition, as well as virtual stage and performing center modeling. The center will also carry out extensive stage production research applied to media convergence technologies.

Professor Juhan Nam, who heads the research center, said that the center is seeking collaborations with other universities such as Seoul National University and the Korea National University of Arts as well as top performing artists at home and abroad. He looks forward to the center growing into a collaborative center for future performing arts.

Professor Jo added that she will spare no effort to offer her experience and advice for the center’s future-forward performing arts research projects.

2022.05.16 View 7171

Sumi Jo Performing Arts Research Center Opens

Distinguished visiting scholar soprano Sumi Jo gave a special lecture on May 13 at the KAIST auditorium. During the lecture, she talked about new technologies that will be introduced for future performing art stages while sharing some of the challenges she experienced before reaching to the stardom of the world stage. She also joined the KAIST student choral club ‘Chorus’ to perform the KAIST school song.

Professor Jo also opened the Sumi Jo Performing Arts Research Center on the same day along with President Kwang Hyung Lee and faculty members from the Graduate School of Culture Technology. The center will conduct AI and metaverse-based performing art technologies such as performer modeling via AI playing and motion creation, interactions between virtual and human players via sound analysis and motion recognition, as well as virtual stage and performing center modeling. The center will also carry out extensive stage production research applied to media convergence technologies.

Professor Juhan Nam, who heads the research center, said that the center is seeking collaborations with other universities such as Seoul National University and the Korea National University of Arts as well as top performing artists at home and abroad. He looks forward to the center growing into a collaborative center for future performing arts.

Professor Jo added that she will spare no effort to offer her experience and advice for the center’s future-forward performing arts research projects.

2022.05.16 View 7171 -

Professor Hyo-Sang Shin at Cranfield University Named the 18th Jeong Hun Cho Awardee

Professor Hyo-Sang Shin at Cranfield University in the UK was named the 18th Jeong Hun Cho Award recipient. PhD candidate Kyu-Sob Kim from the Department of Aerospace Engineering at KAIST, Master’s candidate from Korea University Kon-Hee Chang, Jae-Woo Chang from Kongju National University High School were also selected.

Professor Shin, a PhD graduate from the KAIST Department of Aerospace Engineering in 2016 works at Cranfield University. Professor Shin, whose main research focus covers guidance, navigation, and control, conducts research on information-based control. He has published 66 articles in SCI journals and presented approximately 70 papers at academic conference with more than 12 patent registrations. He is known for his expertise in areas related to unmanned aerospace systems and urban aero traffic automation. Professor Shin is participating in various aerospace engineering development projects run by the UK government.

The award recognizes promising young scientists who have made significant achievements in the field of aerospace engineering in honor of Jeong Hun Cho, the former PhD candidate in KAIST’s Department of Aerospace Engineering. Cho died in a lab accident in May 2003. Cho’s family endowed the award and scholarship to honor him and a recipient from each of his three alma maters (Kongju National High School, Korea University, and KAIST) are selected every year.

Professor Shin was awarded 25 million KRW in prize money. KAIST student Kim and Korea University student Chang received four million KRW while Kongju National University High School student Chang received three million KRW.

2022.05.16 View 7314

Professor Hyo-Sang Shin at Cranfield University Named the 18th Jeong Hun Cho Awardee

Professor Hyo-Sang Shin at Cranfield University in the UK was named the 18th Jeong Hun Cho Award recipient. PhD candidate Kyu-Sob Kim from the Department of Aerospace Engineering at KAIST, Master’s candidate from Korea University Kon-Hee Chang, Jae-Woo Chang from Kongju National University High School were also selected.

Professor Shin, a PhD graduate from the KAIST Department of Aerospace Engineering in 2016 works at Cranfield University. Professor Shin, whose main research focus covers guidance, navigation, and control, conducts research on information-based control. He has published 66 articles in SCI journals and presented approximately 70 papers at academic conference with more than 12 patent registrations. He is known for his expertise in areas related to unmanned aerospace systems and urban aero traffic automation. Professor Shin is participating in various aerospace engineering development projects run by the UK government.

The award recognizes promising young scientists who have made significant achievements in the field of aerospace engineering in honor of Jeong Hun Cho, the former PhD candidate in KAIST’s Department of Aerospace Engineering. Cho died in a lab accident in May 2003. Cho’s family endowed the award and scholarship to honor him and a recipient from each of his three alma maters (Kongju National High School, Korea University, and KAIST) are selected every year.

Professor Shin was awarded 25 million KRW in prize money. KAIST student Kim and Korea University student Chang received four million KRW while Kongju National University High School student Chang received three million KRW.

2022.05.16 View 7314 -

Machine Learning-Based Algorithm to Speed up DNA Sequencing

The algorithm presents the first full-fledged, short-read alignment software that leverages learned indices for solving the exact match search problem for efficient seeding

The human genome consists of a complete set of DNA, which is about 6.4 billion letters long. Because of its size, reading the whole genome sequence at once is challenging. So scientists use DNA sequencers to produce hundreds of millions of DNA sequence fragments, or short reads, up to 300 letters long. Then the DNA sequencer assembles all the short reads like a giant jigsaw puzzle to reconstruct the entire genome sequence. Even with very fast computers, this job can take hours to complete.

A research team at KAIST has achieved up to 3.45x faster speeds by developing the first short-read alignment software that uses a recent advance in machine-learning called a learned index.

The research team reported their findings on March 7, 2022 in the journal Bioinformatics. The software has been released as open source and can be found on github (https://github.com/kaist-ina/BWA-MEME).

Next-generation sequencing (NGS) is a state-of-the-art DNA sequencing method. Projects are underway with the goal of producing genome sequencing at population scale. Modern NGS hardware is capable of generating billions of short reads in a single run. Then the short reads have to be aligned with the reference DNA sequence. With large-scale DNA sequencing operations running hundreds of next-generation sequences, the need for an efficient short read alignment tool has become even more critical. Accelerating the DNA sequence alignment would be a step toward achieving the goal of population-scale sequencing. However, existing algorithms are limited in their performance because of their frequent memory accesses.

BWA-MEM2 is a popular short-read alignment software package currently used to sequence the DNA. However, it has its limitations. The state-of-the-art alignment has two phases – seeding and extending. During the seeding phase, searches find exact matches of short reads in the reference DNA sequence. During the extending phase, the short reads from the seeding phase are extended. In the current process, bottlenecks occur in the seeding phase. Finding the exact matches slows the process.

The researchers set out to solve the problem of accelerating the DNA sequence alignment. To speed the process, they applied machine learning techniques to create an algorithmic improvement. Their algorithm, BWA-MEME (BWA-MEM emulated) leverages learned indices to solve the exact match search problem. The original software compared one character at a time for an exact match search. The team’s new algorithm achieves up to 3.45x faster speeds in seeding throughput over BWA-MEM2 by reducing the number of instructions by 4.60x and memory accesses by 8.77x. “Through this study, it has been shown that full genome big data analysis can be performed faster and less costly than conventional methods by applying machine learning technology,” said Professor Dongsu Han from the School of Electrical Engineering at KAIST.

The researchers’ ultimate goal was to develop efficient software that scientists from academia and industry could use on a daily basis for analyzing big data in genomics. “With the recent advances in artificial intelligence and machine learning, we see so many opportunities for designing better software for genomic data analysis. The potential is there for accelerating existing analysis as well as enabling new types of analysis, and our goal is to develop such software,” added Han.

Whole genome sequencing has traditionally been used for discovering genomic mutations and identifying the root causes of diseases, which leads to the discovery and development of new drugs and cures. There could be many potential applications. Whole genome sequencing is used not only for research, but also for clinical purposes. “The science and technology for analyzing genomic data is making rapid progress to make it more accessible for scientists and patients. This will enhance our understanding about diseases and develop a better cure for patients of various diseases.”

The research was funded by the National Research Foundation of the Korean government’s Ministry of Science and ICT.

-PublicationYoungmok Jung, Dongsu Han, “BWA-MEME:BWA-MEM emulated with a machine learning approach,” Bioinformatics, Volume 38, Issue 9, May 2022

(https://doi.org/10.1093/bioinformatics/btac137)

-ProfileProfessor Dongsu HanSchool of Electrical EngineeringKAIST

2022.05.10 View 9971

Machine Learning-Based Algorithm to Speed up DNA Sequencing

The algorithm presents the first full-fledged, short-read alignment software that leverages learned indices for solving the exact match search problem for efficient seeding

The human genome consists of a complete set of DNA, which is about 6.4 billion letters long. Because of its size, reading the whole genome sequence at once is challenging. So scientists use DNA sequencers to produce hundreds of millions of DNA sequence fragments, or short reads, up to 300 letters long. Then the DNA sequencer assembles all the short reads like a giant jigsaw puzzle to reconstruct the entire genome sequence. Even with very fast computers, this job can take hours to complete.

A research team at KAIST has achieved up to 3.45x faster speeds by developing the first short-read alignment software that uses a recent advance in machine-learning called a learned index.

The research team reported their findings on March 7, 2022 in the journal Bioinformatics. The software has been released as open source and can be found on github (https://github.com/kaist-ina/BWA-MEME).

Next-generation sequencing (NGS) is a state-of-the-art DNA sequencing method. Projects are underway with the goal of producing genome sequencing at population scale. Modern NGS hardware is capable of generating billions of short reads in a single run. Then the short reads have to be aligned with the reference DNA sequence. With large-scale DNA sequencing operations running hundreds of next-generation sequences, the need for an efficient short read alignment tool has become even more critical. Accelerating the DNA sequence alignment would be a step toward achieving the goal of population-scale sequencing. However, existing algorithms are limited in their performance because of their frequent memory accesses.

BWA-MEM2 is a popular short-read alignment software package currently used to sequence the DNA. However, it has its limitations. The state-of-the-art alignment has two phases – seeding and extending. During the seeding phase, searches find exact matches of short reads in the reference DNA sequence. During the extending phase, the short reads from the seeding phase are extended. In the current process, bottlenecks occur in the seeding phase. Finding the exact matches slows the process.

The researchers set out to solve the problem of accelerating the DNA sequence alignment. To speed the process, they applied machine learning techniques to create an algorithmic improvement. Their algorithm, BWA-MEME (BWA-MEM emulated) leverages learned indices to solve the exact match search problem. The original software compared one character at a time for an exact match search. The team’s new algorithm achieves up to 3.45x faster speeds in seeding throughput over BWA-MEM2 by reducing the number of instructions by 4.60x and memory accesses by 8.77x. “Through this study, it has been shown that full genome big data analysis can be performed faster and less costly than conventional methods by applying machine learning technology,” said Professor Dongsu Han from the School of Electrical Engineering at KAIST.

The researchers’ ultimate goal was to develop efficient software that scientists from academia and industry could use on a daily basis for analyzing big data in genomics. “With the recent advances in artificial intelligence and machine learning, we see so many opportunities for designing better software for genomic data analysis. The potential is there for accelerating existing analysis as well as enabling new types of analysis, and our goal is to develop such software,” added Han.

Whole genome sequencing has traditionally been used for discovering genomic mutations and identifying the root causes of diseases, which leads to the discovery and development of new drugs and cures. There could be many potential applications. Whole genome sequencing is used not only for research, but also for clinical purposes. “The science and technology for analyzing genomic data is making rapid progress to make it more accessible for scientists and patients. This will enhance our understanding about diseases and develop a better cure for patients of various diseases.”

The research was funded by the National Research Foundation of the Korean government’s Ministry of Science and ICT.

-PublicationYoungmok Jung, Dongsu Han, “BWA-MEME:BWA-MEM emulated with a machine learning approach,” Bioinformatics, Volume 38, Issue 9, May 2022

(https://doi.org/10.1093/bioinformatics/btac137)

-ProfileProfessor Dongsu HanSchool of Electrical EngineeringKAIST

2022.05.10 View 9971 -

VP Sang Yup Lee Receives Honorary Doctorate from DTU

Vice President for Research, Distinguished Professor Sang Yup Lee at the Department of Chemical & Biomolecular Engineering, was awarded an honorary doctorate from the Technical University of Denmark (DTU) during the DTU Commemoration Day 2022 on April 29. The event drew distinguished guests, students, and faculty including HRH The Crown Prince Frederik Andre Henrik Christian and DTU President Anders Bjarklev.

Professor Lee was recognized for his exceptional scholarship in the field of systems metabolic engineering, which led to the development of microcell factories capable of producing a wide range of fuels, chemicals, materials, and natural compounds, many for the first time.

Professor Lee said in his acceptance speech that KAIST’s continued partnership with DTU in the field of biotechnology will lead to significant contributions in the global efforts to respond to climate change and promote green growth.

DTU CPO and CSO Dina Petronovic Nielson, who heads DTU Biosustain, also lauded Professor Lee saying, “It is not only a great honor for Professor Lee to be induced at DTU but also great honor for DTU to have him.”

Professor Lee also gave commemorative lectures at DTU Biosustain in Lingby and the Bio Innovation Research Institute at the Novo Nordisk Foundation in Copenhagen while in Denmark.

DTU, one of the leading science and technology universities in Europe, has been awarding honorary doctorates since 1921, including to Nobel laureate in chemistry Professor Frances Arnold at Caltech. Professor Lee is the first Korean to receive an honorary doctorate from DTU.

2022.05.03 View 11182

VP Sang Yup Lee Receives Honorary Doctorate from DTU

Vice President for Research, Distinguished Professor Sang Yup Lee at the Department of Chemical & Biomolecular Engineering, was awarded an honorary doctorate from the Technical University of Denmark (DTU) during the DTU Commemoration Day 2022 on April 29. The event drew distinguished guests, students, and faculty including HRH The Crown Prince Frederik Andre Henrik Christian and DTU President Anders Bjarklev.

Professor Lee was recognized for his exceptional scholarship in the field of systems metabolic engineering, which led to the development of microcell factories capable of producing a wide range of fuels, chemicals, materials, and natural compounds, many for the first time.

Professor Lee said in his acceptance speech that KAIST’s continued partnership with DTU in the field of biotechnology will lead to significant contributions in the global efforts to respond to climate change and promote green growth.

DTU CPO and CSO Dina Petronovic Nielson, who heads DTU Biosustain, also lauded Professor Lee saying, “It is not only a great honor for Professor Lee to be induced at DTU but also great honor for DTU to have him.”

Professor Lee also gave commemorative lectures at DTU Biosustain in Lingby and the Bio Innovation Research Institute at the Novo Nordisk Foundation in Copenhagen while in Denmark.

DTU, one of the leading science and technology universities in Europe, has been awarding honorary doctorates since 1921, including to Nobel laureate in chemistry Professor Frances Arnold at Caltech. Professor Lee is the first Korean to receive an honorary doctorate from DTU.

2022.05.03 View 11182 -

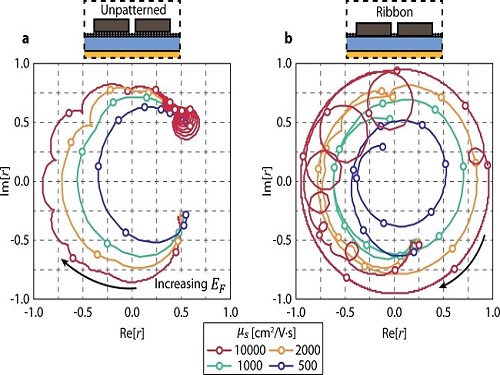

A New Strategy for Active Metasurface Design Provides a Full 360° Phase Tunable Metasurface

The new strategy displays an unprecedented upper limit of dynamic phase modulation with no significant variations in optical amplitude

An international team of researchers led by Professor Min Seok Jang of KAIST and Professor Victor W. Brar of the University of Wisconsin-Madison has demonstrated a widely applicable methodology enabling a full 360° active phase modulation for metasurfaces while maintaining significant levels of uniform light amplitude. This strategy can be fundamentally applied to any spectral region with any structures and resonances that fit the bill.

Metasurfaces are optical components with specialized functionalities indispensable for real-life applications ranging from LIDAR and spectroscopy to futuristic technologies such as invisibility cloaks and holograms. They are known for their compact and micro/nano-sized nature, which enables them to be integrated into electronic computerized systems with sizes that are ever decreasing as predicted by Moore’s law.

In order to allow for such innovations, metasurfaces must be capable of manipulating the impinging light, doing so by manipulating either the light’s amplitude or phase (or both) and emitting it back out. However, dynamically modulating the phase with the full circle range has been a notoriously difficult task, with very few works managing to do so by sacrificing a substantial amount of amplitude control.

Challenged by these limitations, the team proposed a general methodology that enables metasurfaces to implement a dynamic phase modulation with the complete 360° phase range, all the while uniformly maintaining significant levels of amplitude.

The underlying reason for the difficulty achieving such a feat is that there is a fundamental trade-off regarding dynamically controlling the optical phase of light. Metasurfaces generally perform such a function through optical resonances, an excitation of electrons inside the metasurface structure that harmonically oscillate together with the incident light. In order to be able to modulate through the entire range of 0-360°, the optical resonance frequency (the center of the spectrum) must be tuned by a large amount while the linewidth (the width of the spectrum) is kept to a minimum. However, to electrically tune the optical resonance frequency of the metasurface on demand, there needs to be a controllable influx and outflux of electrons into the metasurface and this inevitably leads to a larger linewidth of the aforementioned optical resonance.

The problem is further compounded by the fact that the phase and the amplitude of optical resonances are closely correlated in a complex, non-linear fashion, making it very difficult to hold substantial control over the amplitude while changing the phase.

The team’s work circumvented both problems by using two optical resonances, each with specifically designated properties. One resonance provides the decoupling between the phase and amplitude so that the phase is able to be tuned while significant and uniform levels of amplitude are maintained, as well as providing a narrow linewidth.

The other resonance provides the capability of being sufficiently tuned to a large degree so that the complete full circle range of phase modulation is achievable. The quintessence of the work is then to combine the different properties of the two resonances through a phenomenon called avoided crossing, so that the interactions between the two resonances lead to an amalgamation of the desired traits that achieves and even surpasses the full 360° phase modulation with uniform amplitude.

Professor Jang said, “Our research proposes a new methodology in dynamic phase modulation that breaks through the conventional limits and trade-offs, while being broadly applicable in diverse types of metasurfaces. We hope that this idea helps researchers implement and realize many key applications of metasurfaces, such as LIDAR and holograms, so that the nanophotonics industry keeps growing and provides a brighter technological future.”

The research paper authored by Ju Young Kim and Juho Park, et al., and titled "Full 2π Tunable Phase Modulation Using Avoided Crossing of Resonances" was published in Nature Communications on April 19. The research was funded by the Samsung Research Funding & Incubation Center of Samsung Electronics.

-Publication:Ju Young Kim, Juho Park, Gregory R. Holdman, Jacob T. Heiden, Shinho Kim, Victor W. Brar, and Min Seok Jang, “Full 2π Tunable Phase Modulation Using Avoided Crossing ofResonances” Nature Communications on April 19 (2022). doi.org/10.1038/s41467-022-29721-7

-ProfileProfessor Min Seok JangSchool of Electrical EngineeringKAIST

2022.05.02 View 8518

A New Strategy for Active Metasurface Design Provides a Full 360° Phase Tunable Metasurface

The new strategy displays an unprecedented upper limit of dynamic phase modulation with no significant variations in optical amplitude

An international team of researchers led by Professor Min Seok Jang of KAIST and Professor Victor W. Brar of the University of Wisconsin-Madison has demonstrated a widely applicable methodology enabling a full 360° active phase modulation for metasurfaces while maintaining significant levels of uniform light amplitude. This strategy can be fundamentally applied to any spectral region with any structures and resonances that fit the bill.

Metasurfaces are optical components with specialized functionalities indispensable for real-life applications ranging from LIDAR and spectroscopy to futuristic technologies such as invisibility cloaks and holograms. They are known for their compact and micro/nano-sized nature, which enables them to be integrated into electronic computerized systems with sizes that are ever decreasing as predicted by Moore’s law.

In order to allow for such innovations, metasurfaces must be capable of manipulating the impinging light, doing so by manipulating either the light’s amplitude or phase (or both) and emitting it back out. However, dynamically modulating the phase with the full circle range has been a notoriously difficult task, with very few works managing to do so by sacrificing a substantial amount of amplitude control.

Challenged by these limitations, the team proposed a general methodology that enables metasurfaces to implement a dynamic phase modulation with the complete 360° phase range, all the while uniformly maintaining significant levels of amplitude.

The underlying reason for the difficulty achieving such a feat is that there is a fundamental trade-off regarding dynamically controlling the optical phase of light. Metasurfaces generally perform such a function through optical resonances, an excitation of electrons inside the metasurface structure that harmonically oscillate together with the incident light. In order to be able to modulate through the entire range of 0-360°, the optical resonance frequency (the center of the spectrum) must be tuned by a large amount while the linewidth (the width of the spectrum) is kept to a minimum. However, to electrically tune the optical resonance frequency of the metasurface on demand, there needs to be a controllable influx and outflux of electrons into the metasurface and this inevitably leads to a larger linewidth of the aforementioned optical resonance.

The problem is further compounded by the fact that the phase and the amplitude of optical resonances are closely correlated in a complex, non-linear fashion, making it very difficult to hold substantial control over the amplitude while changing the phase.

The team’s work circumvented both problems by using two optical resonances, each with specifically designated properties. One resonance provides the decoupling between the phase and amplitude so that the phase is able to be tuned while significant and uniform levels of amplitude are maintained, as well as providing a narrow linewidth.

The other resonance provides the capability of being sufficiently tuned to a large degree so that the complete full circle range of phase modulation is achievable. The quintessence of the work is then to combine the different properties of the two resonances through a phenomenon called avoided crossing, so that the interactions between the two resonances lead to an amalgamation of the desired traits that achieves and even surpasses the full 360° phase modulation with uniform amplitude.

Professor Jang said, “Our research proposes a new methodology in dynamic phase modulation that breaks through the conventional limits and trade-offs, while being broadly applicable in diverse types of metasurfaces. We hope that this idea helps researchers implement and realize many key applications of metasurfaces, such as LIDAR and holograms, so that the nanophotonics industry keeps growing and provides a brighter technological future.”

The research paper authored by Ju Young Kim and Juho Park, et al., and titled "Full 2π Tunable Phase Modulation Using Avoided Crossing of Resonances" was published in Nature Communications on April 19. The research was funded by the Samsung Research Funding & Incubation Center of Samsung Electronics.

-Publication:Ju Young Kim, Juho Park, Gregory R. Holdman, Jacob T. Heiden, Shinho Kim, Victor W. Brar, and Min Seok Jang, “Full 2π Tunable Phase Modulation Using Avoided Crossing ofResonances” Nature Communications on April 19 (2022). doi.org/10.1038/s41467-022-29721-7

-ProfileProfessor Min Seok JangSchool of Electrical EngineeringKAIST

2022.05.02 View 8518 -

Quantum Technology: the Next Game Changer?

The 6th KAIST Global Strategy Institute Forum explores how quantum technology has evolved into a new growth engine for the future

The participants of the 6th KAIST Global Strategy Institute (GSI) Forum on April 20 agreed that the emerging technology of quantum computing will be a game changer of the future. As KAIST President Kwang Hyung Lee said in his opening remarks, the future is quantum and that future is rapidly approaching. Keynote speakers and panelists presented their insights on the disruptive innovations we are already experiencing.

The three keynote speakers included Dr. Jerry M. Chow, IBM fellow and director of quantum infrastructure, Professor John Preskill from Caltech, and Professor Jungsang Kim from Duke University. They discussed the academic impact and industrial applications of quantum technology, and its prospects for the future.

Dr. Chow leads IBM Quantum’s hardware system development efforts, focusing on research and system deployment. Professor Preskill is one of the leading quantum information science and quantum computation scholars. He coined the term “quantum supremacy.” Professor Kim is the co-founder and CTO of IonQ Inc., which develops general-purpose trapped ion quantum computers and software to generate, optimize, and execute quantum circuits.

Two leading quantum scholars from KAIST, Professor June-Koo Kevin Rhee and Professor Youngik Sohn, and Professor Andreas Heinrich from the IBS Center for Quantum Nanoscience also participated in the forum as panelists. Professor Rhee is the founder of the nation’s first quantum computing software company and leads the AI Quantum Computing IT Research Center at KAIST.

During the panel session, Professor Rhee said that although it is undeniable the quantum computing will be a game changer, there are several challenges. For instance, the first actual quantum computer is NISQ (Noisy intermediate-scale quantum era), which is still incomplete. However, it is expected to outperform a supercomputer. Until then, it is important to advance the accuracy of quantum computation in order to offer super computation speeds.

Professor Sohn, who worked at PsiQuantum, detailed how quantum computers will affect our society. He said that PsiQuantum is developing silicon photonics that will control photons. We can’t begin to imagine how silicon photonics will transform our society. Things will change slowly but the scale would be massive.

The keynote speakers presented how quantum cryptography communications and its sensing technology will serve as disruptive innovations. Dr. Chow stressed that to realize the potential growth and innovation, additional R&D is needed. More research groups and scholars should be nurtured. Only then will the rich R&D resources be able to create breakthroughs in quantum-related industries. Lastly, the commercialization of quantum computing must be advanced, which will enable the provision of diverse services. In addition, more technological and industrial infrastructure must be built to better accommodate quantum computing.

Professor Preskill believes that quantum computing will benefit humanity. He cited two basic reasons for his optimistic prediction: quantum complexity and quantum error corrections. He explained why quantum computing is so powerful: quantum computer can easily solve the problems classically considered difficult, such as factorization. In addition, quantum computer has the potential to efficiently simulate all of the physical processes taking place in nature.

Despite such dramatic advantages, why does it seem so difficult? Professor Preskill believes this is because we want qubits (quantum bits or ‘qubits’ are the basic unit of quantum information) to interact very strongly with each other. Because computations can fail, we don’t want qubits to interact with the environment unless we can control and predict them.

As for quantum computing in the NISQ era, he said that NISQ will be an exciting tool for exploring physics. Professor Preskill does not believe that NISQ will change the world alone, rather it is a step forward toward more powerful quantum technologies in the future. He added that a potentially transformable, scalable quantum computer could still be decades away.

Professor Preskill said that a large number of qubits, higher accuracy, and better quality will require a significant investment. He said if we expand with better ideas, we can make a better system. In the longer term, quantum technology will bring significant benefits to the technological sectors and society in the fields of materials, physics, chemistry, and energy production.

Professor Kim from Duke University presented on the practical applications of quantum computing, especially in the startup environment. He said that although there is no right answer for the early applications of quantum computing, developing new approaches to solve difficult problems and raising the accessibility of the technology will be important for ensuring the growth of technology-based startups.

2022.04.21 View 12445

Quantum Technology: the Next Game Changer?

The 6th KAIST Global Strategy Institute Forum explores how quantum technology has evolved into a new growth engine for the future

The participants of the 6th KAIST Global Strategy Institute (GSI) Forum on April 20 agreed that the emerging technology of quantum computing will be a game changer of the future. As KAIST President Kwang Hyung Lee said in his opening remarks, the future is quantum and that future is rapidly approaching. Keynote speakers and panelists presented their insights on the disruptive innovations we are already experiencing.

The three keynote speakers included Dr. Jerry M. Chow, IBM fellow and director of quantum infrastructure, Professor John Preskill from Caltech, and Professor Jungsang Kim from Duke University. They discussed the academic impact and industrial applications of quantum technology, and its prospects for the future.

Dr. Chow leads IBM Quantum’s hardware system development efforts, focusing on research and system deployment. Professor Preskill is one of the leading quantum information science and quantum computation scholars. He coined the term “quantum supremacy.” Professor Kim is the co-founder and CTO of IonQ Inc., which develops general-purpose trapped ion quantum computers and software to generate, optimize, and execute quantum circuits.

Two leading quantum scholars from KAIST, Professor June-Koo Kevin Rhee and Professor Youngik Sohn, and Professor Andreas Heinrich from the IBS Center for Quantum Nanoscience also participated in the forum as panelists. Professor Rhee is the founder of the nation’s first quantum computing software company and leads the AI Quantum Computing IT Research Center at KAIST.

During the panel session, Professor Rhee said that although it is undeniable the quantum computing will be a game changer, there are several challenges. For instance, the first actual quantum computer is NISQ (Noisy intermediate-scale quantum era), which is still incomplete. However, it is expected to outperform a supercomputer. Until then, it is important to advance the accuracy of quantum computation in order to offer super computation speeds.

Professor Sohn, who worked at PsiQuantum, detailed how quantum computers will affect our society. He said that PsiQuantum is developing silicon photonics that will control photons. We can’t begin to imagine how silicon photonics will transform our society. Things will change slowly but the scale would be massive.

The keynote speakers presented how quantum cryptography communications and its sensing technology will serve as disruptive innovations. Dr. Chow stressed that to realize the potential growth and innovation, additional R&D is needed. More research groups and scholars should be nurtured. Only then will the rich R&D resources be able to create breakthroughs in quantum-related industries. Lastly, the commercialization of quantum computing must be advanced, which will enable the provision of diverse services. In addition, more technological and industrial infrastructure must be built to better accommodate quantum computing.

Professor Preskill believes that quantum computing will benefit humanity. He cited two basic reasons for his optimistic prediction: quantum complexity and quantum error corrections. He explained why quantum computing is so powerful: quantum computer can easily solve the problems classically considered difficult, such as factorization. In addition, quantum computer has the potential to efficiently simulate all of the physical processes taking place in nature.

Despite such dramatic advantages, why does it seem so difficult? Professor Preskill believes this is because we want qubits (quantum bits or ‘qubits’ are the basic unit of quantum information) to interact very strongly with each other. Because computations can fail, we don’t want qubits to interact with the environment unless we can control and predict them.

As for quantum computing in the NISQ era, he said that NISQ will be an exciting tool for exploring physics. Professor Preskill does not believe that NISQ will change the world alone, rather it is a step forward toward more powerful quantum technologies in the future. He added that a potentially transformable, scalable quantum computer could still be decades away.

Professor Preskill said that a large number of qubits, higher accuracy, and better quality will require a significant investment. He said if we expand with better ideas, we can make a better system. In the longer term, quantum technology will bring significant benefits to the technological sectors and society in the fields of materials, physics, chemistry, and energy production.

Professor Kim from Duke University presented on the practical applications of quantum computing, especially in the startup environment. He said that although there is no right answer for the early applications of quantum computing, developing new approaches to solve difficult problems and raising the accessibility of the technology will be important for ensuring the growth of technology-based startups.

2022.04.21 View 12445 -

Professor Hyunjoo Jenny Lee to Co-Chair IEEE MEMS 2025

Professor Hyunjoo Jenny Lee from the School of Electrical Engineering has been appointed General Chair of the 38th IEEE MEMS 2025 (International Conference on Micro Electro Mechanical Systems). Professor Lee, who is 40, is the conference’s youngest General Chair to date and will work jointly with Professor Sheng-Shian Li of Taiwan’s National Tsing Hua University as co-chairs in 2025.

IEEE MEMS is a top-tier international conference on microelectromechanical systems and it serves as a core academic showcase for MEMS research and technology in areas such as microsensors and actuators.

With over 800 MEMS paper submissions each year, the conference only accepts and publishes about 250 of them after a rigorous review process recognized for its world-class prestige. Of all the submissions, fewer than 10% are chosen for oral presentations.

2022.04.18 View 7734

Professor Hyunjoo Jenny Lee to Co-Chair IEEE MEMS 2025

Professor Hyunjoo Jenny Lee from the School of Electrical Engineering has been appointed General Chair of the 38th IEEE MEMS 2025 (International Conference on Micro Electro Mechanical Systems). Professor Lee, who is 40, is the conference’s youngest General Chair to date and will work jointly with Professor Sheng-Shian Li of Taiwan’s National Tsing Hua University as co-chairs in 2025.

IEEE MEMS is a top-tier international conference on microelectromechanical systems and it serves as a core academic showcase for MEMS research and technology in areas such as microsensors and actuators.

With over 800 MEMS paper submissions each year, the conference only accepts and publishes about 250 of them after a rigorous review process recognized for its world-class prestige. Of all the submissions, fewer than 10% are chosen for oral presentations.

2022.04.18 View 7734 -

KAIST Partners with Korea National Sport University

KAIST President Kwang Hyung Lee signed an MOU with Korea National Sport University (KNSU) President Yong-Kyu Ahn for collaboration in education and research in the fields of sports science and technology on April 5 at the KAIST main campus. The agreement also extends to student and credit exchanges between the two universities.

With this signing, KAIST plans to develop programs in which KAIST students can participate in the diverse sports classes and activities offered at KNSU.

Officials from KNSU said that this collaboration with KAIST will provide a new opportunity to recognize the importance of sports science more extensively. They added that KNSU will continue to foster more competitive sports talents who understand the convergence between sports science and technology.

The two universities also plan to conduct research on body mechanics optimizing athletes’ best performance, analyze how the muscles of different events’ athletes move, and will propose creative new solutions utilizing robot rehabilitation and AR technologies. It is expected that the research will extend to the physical performance betterment of the general public, especially for aged groups and the development of training solutions for musculoskeletal injury prevention as Korean society deals with its growing aging population.

President Lee said, “I look forward to the synergic impact when KAIST works together with the nation’s top sports university. We will make every effort to spearhead the wellbeing of the general public in our aging society as well as for growth of sports.”

President Ahn said, “The close collaboration between KAIST and KNSU will revitalize the sports community that has been staggering due to the Covid-19 pandemic and will contribute to the advancement of sports science in Korea.”

2022.04.07 View 5948

KAIST Partners with Korea National Sport University

KAIST President Kwang Hyung Lee signed an MOU with Korea National Sport University (KNSU) President Yong-Kyu Ahn for collaboration in education and research in the fields of sports science and technology on April 5 at the KAIST main campus. The agreement also extends to student and credit exchanges between the two universities.

With this signing, KAIST plans to develop programs in which KAIST students can participate in the diverse sports classes and activities offered at KNSU.

Officials from KNSU said that this collaboration with KAIST will provide a new opportunity to recognize the importance of sports science more extensively. They added that KNSU will continue to foster more competitive sports talents who understand the convergence between sports science and technology.

The two universities also plan to conduct research on body mechanics optimizing athletes’ best performance, analyze how the muscles of different events’ athletes move, and will propose creative new solutions utilizing robot rehabilitation and AR technologies. It is expected that the research will extend to the physical performance betterment of the general public, especially for aged groups and the development of training solutions for musculoskeletal injury prevention as Korean society deals with its growing aging population.

President Lee said, “I look forward to the synergic impact when KAIST works together with the nation’s top sports university. We will make every effort to spearhead the wellbeing of the general public in our aging society as well as for growth of sports.”

President Ahn said, “The close collaboration between KAIST and KNSU will revitalize the sports community that has been staggering due to the Covid-19 pandemic and will contribute to the advancement of sports science in Korea.”

2022.04.07 View 5948 -

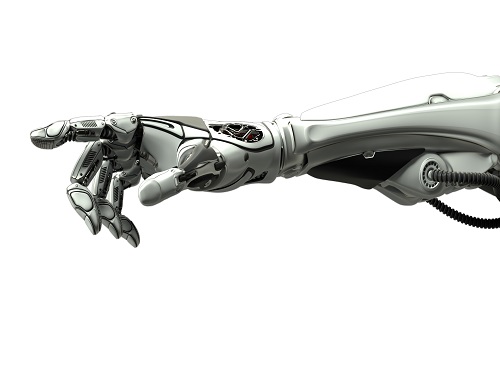

Decoding Brain Signals to Control a Robotic Arm

Advanced brain-machine interface system successfully interprets arm movement directions from neural signals in the brain

Researchers have developed a mind-reading system for decoding neural signals from the brain during arm movement. The method, described in the journal Applied Soft Computing, can be used by a person to control a robotic arm through a brain-machine interface (BMI).

A BMI is a device that translates nerve signals into commands to control a machine, such as a computer or a robotic limb. There are two main techniques for monitoring neural signals in BMIs: electroencephalography (EEG) and electrocorticography (ECoG).

The EEG exhibits signals from electrodes on the surface of the scalp and is widely employed because it is non-invasive, relatively cheap, safe and easy to use. However, the EEG has low spatial resolution and detects irrelevant neural signals, which makes it difficult to interpret the intentions of individuals from the EEG.

On the other hand, the ECoG is an invasive method that involves placing electrodes directly on the surface of the cerebral cortex below the scalp. Compared with the EEG, the ECoG can monitor neural signals with much higher spatial resolution and less background noise. However, this technique has several drawbacks.

“The ECoG is primarily used to find potential sources of epileptic seizures, meaning the electrodes are placed in different locations for different patients and may not be in the optimal regions of the brain for detecting sensory and movement signals,” explained Professor Jaeseung Jeong, a brain scientist at KAIST. “This inconsistency makes it difficult to decode brain signals to predict movements.”

To overcome these problems, Professor Jeong’s team developed a new method for decoding ECoG neural signals during arm movement. The system is based on a machine-learning system for analysing and predicting neural signals called an ‘echo-state network’ and a mathematical probability model called the Gaussian distribution.

In the study, the researchers recorded ECoG signals from four individuals with epilepsy while they were performing a reach-and-grasp task. Because the ECoG electrodes were placed according to the potential sources of each patient’s epileptic seizures, only 22% to 44% of the electrodes were located in the regions of the brain responsible for controlling movement.

During the movement task, the participants were given visual cues, either by placing a real tennis ball in front of them, or via a virtual reality headset showing a clip of a human arm reaching forward in first-person view. They were asked to reach forward, grasp an object, then return their hand and release the object, while wearing motion sensors on their wrists and fingers. In a second task, they were instructed to imagine reaching forward without moving their arms.

The researchers monitored the signals from the ECoG electrodes during real and imaginary arm movements, and tested whether the new system could predict the direction of this movement from the neural signals. They found that the novel decoder successfully classified arm movements in 24 directions in three-dimensional space, both in the real and virtual tasks, and that the results were at least five times more accurate than chance. They also used a computer simulation to show that the novel ECoG decoder could control the movements of a robotic arm.

Overall, the results suggest that the new machine learning-based BCI system successfully used ECoG signals to interpret the direction of the intended movements. The next steps will be to improve the accuracy and efficiency of the decoder. In the future, it could be used in a real-time BMI device to help people with movement or sensory impairments.

This research was supported by the KAIST Global Singularity Research Program of 2021, Brain Research Program of the National Research Foundation of Korea funded by the Ministry of Science, ICT, and Future Planning, and the Basic Science Research Program through the National Research Foundation of Korea funded by the Ministry of Education.

-PublicationHoon-Hee Kim, Jaeseung Jeong, “An electrocorticographic decoder for arm movement for brain-machine interface using an echo state network and Gaussian readout,” Applied SoftComputing online December 31, 2021 (doi.org/10.1016/j.asoc.2021.108393)

-ProfileProfessor Jaeseung JeongDepartment of Bio and Brain EngineeringCollege of EngineeringKAIST

2022.03.18 View 12909

Decoding Brain Signals to Control a Robotic Arm

Advanced brain-machine interface system successfully interprets arm movement directions from neural signals in the brain

Researchers have developed a mind-reading system for decoding neural signals from the brain during arm movement. The method, described in the journal Applied Soft Computing, can be used by a person to control a robotic arm through a brain-machine interface (BMI).

A BMI is a device that translates nerve signals into commands to control a machine, such as a computer or a robotic limb. There are two main techniques for monitoring neural signals in BMIs: electroencephalography (EEG) and electrocorticography (ECoG).

The EEG exhibits signals from electrodes on the surface of the scalp and is widely employed because it is non-invasive, relatively cheap, safe and easy to use. However, the EEG has low spatial resolution and detects irrelevant neural signals, which makes it difficult to interpret the intentions of individuals from the EEG.

On the other hand, the ECoG is an invasive method that involves placing electrodes directly on the surface of the cerebral cortex below the scalp. Compared with the EEG, the ECoG can monitor neural signals with much higher spatial resolution and less background noise. However, this technique has several drawbacks.

“The ECoG is primarily used to find potential sources of epileptic seizures, meaning the electrodes are placed in different locations for different patients and may not be in the optimal regions of the brain for detecting sensory and movement signals,” explained Professor Jaeseung Jeong, a brain scientist at KAIST. “This inconsistency makes it difficult to decode brain signals to predict movements.”

To overcome these problems, Professor Jeong’s team developed a new method for decoding ECoG neural signals during arm movement. The system is based on a machine-learning system for analysing and predicting neural signals called an ‘echo-state network’ and a mathematical probability model called the Gaussian distribution.

In the study, the researchers recorded ECoG signals from four individuals with epilepsy while they were performing a reach-and-grasp task. Because the ECoG electrodes were placed according to the potential sources of each patient’s epileptic seizures, only 22% to 44% of the electrodes were located in the regions of the brain responsible for controlling movement.

During the movement task, the participants were given visual cues, either by placing a real tennis ball in front of them, or via a virtual reality headset showing a clip of a human arm reaching forward in first-person view. They were asked to reach forward, grasp an object, then return their hand and release the object, while wearing motion sensors on their wrists and fingers. In a second task, they were instructed to imagine reaching forward without moving their arms.

The researchers monitored the signals from the ECoG electrodes during real and imaginary arm movements, and tested whether the new system could predict the direction of this movement from the neural signals. They found that the novel decoder successfully classified arm movements in 24 directions in three-dimensional space, both in the real and virtual tasks, and that the results were at least five times more accurate than chance. They also used a computer simulation to show that the novel ECoG decoder could control the movements of a robotic arm.

Overall, the results suggest that the new machine learning-based BCI system successfully used ECoG signals to interpret the direction of the intended movements. The next steps will be to improve the accuracy and efficiency of the decoder. In the future, it could be used in a real-time BMI device to help people with movement or sensory impairments.

This research was supported by the KAIST Global Singularity Research Program of 2021, Brain Research Program of the National Research Foundation of Korea funded by the Ministry of Science, ICT, and Future Planning, and the Basic Science Research Program through the National Research Foundation of Korea funded by the Ministry of Education.

-PublicationHoon-Hee Kim, Jaeseung Jeong, “An electrocorticographic decoder for arm movement for brain-machine interface using an echo state network and Gaussian readout,” Applied SoftComputing online December 31, 2021 (doi.org/10.1016/j.asoc.2021.108393)

-ProfileProfessor Jaeseung JeongDepartment of Bio and Brain EngineeringCollege of EngineeringKAIST

2022.03.18 View 12909 -

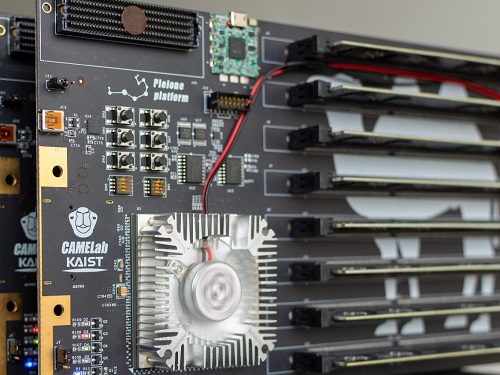

CXL-Based Memory Disaggregation Technology Opens Up a New Direction for Big Data Solution Frameworks

A KAIST team’s compute express link (CXL) provides new insights on memory disaggregation and ensures direct access and high-performance capabilities

A team from the Computer Architecture and Memory Systems Laboratory (CAMEL) at KAIST presented a new compute express link (CXL) solution whose directly accessible, and high-performance memory disaggregation opens new directions for big data memory processing. Professor Myoungsoo Jung said the team’s technology significantly improves performance compared to existing remote direct memory access (RDMA)-based memory disaggregation.

CXL is a peripheral component interconnect-express (PCIe)-based new dynamic multi-protocol made for efficiently utilizing memory devices and accelerators. Many enterprise data centers and memory vendors are paying attention to it as the next-generation multi-protocol for the era of big data.

Emerging big data applications such as machine learning, graph analytics, and in-memory databases require large memory capacities. However, scaling out the memory capacity via a prior memory interface like double data rate (DDR) is limited by the number of the central processing units (CPUs) and memory controllers. Therefore, memory disaggregation, which allows connecting a host to another host’s memory or memory nodes, has appeared.

RDMA is a way that a host can directly access another host’s memory via InfiniBand, the commonly used network protocol in data centers. Nowadays, most existing memory disaggregation technologies employ RDMA to get a large memory capacity. As a result, a host can share another host’s memory by transferring the data between local and remote memory.

Although RDMA-based memory disaggregation provides a large memory capacity to a host, two critical problems exist. First, scaling out the memory still needs an extra CPU to be added. Since passive memory such as dynamic random-access memory (DRAM), cannot operate by itself, it should be controlled by the CPU. Second, redundant data copies and software fabric interventions for RDMA-based memory disaggregation cause longer access latency. For example, remote memory access latency in RDMA-based memory disaggregation is multiple orders of magnitude longer than local memory access.

To address these issues, Professor Jung’s team developed the CXL-based memory disaggregation framework, including CXL-enabled customized CPUs, CXL devices, CXL switches, and CXL-aware operating system modules. The team’s CXL device is a pure passive and directly accessible memory node that contains multiple DRAM dual inline memory modules (DIMMs) and a CXL memory controller. Since the CXL memory controller supports the memory in the CXL device, a host can utilize the memory node without processor or software intervention. The team’s CXL switch enables scaling out a host’s memory capacity by hierarchically connecting multiple CXL devices to the CXL switch allowing more than hundreds of devices. Atop the switches and devices, the team’s CXL-enabled operating system removes redundant data copy and protocol conversion exhibited by conventional RDMA, which can significantly decrease access latency to the memory nodes.

In a test comparing loading 64B (cacheline) data from memory pooling devices, CXL-based memory disaggregation showed 8.2 times higher data load performance than RDMA-based memory disaggregation and even similar performance to local DRAM memory. In the team’s evaluations for a big data benchmark such as a machine learning-based test, CXL-based memory disaggregation technology also showed a maximum of 3.7 times higher performance than prior RDMA-based memory disaggregation technologies.

“Escaping from the conventional RDMA-based memory disaggregation, our CXL-based memory disaggregation framework can provide high scalability and performance for diverse datacenters and cloud service infrastructures,” said Professor Jung. He went on to stress, “Our CXL-based memory disaggregation research will bring about a new paradigm for memory solutions that will lead the era of big data.”

-Profile: Professor Myoungsoo Jung Computer Architecture and Memory Systems Laboratory (CAMEL)http://camelab.org School of Electrical EngineeringKAIST

2022.03.16 View 23521

CXL-Based Memory Disaggregation Technology Opens Up a New Direction for Big Data Solution Frameworks

A KAIST team’s compute express link (CXL) provides new insights on memory disaggregation and ensures direct access and high-performance capabilities

A team from the Computer Architecture and Memory Systems Laboratory (CAMEL) at KAIST presented a new compute express link (CXL) solution whose directly accessible, and high-performance memory disaggregation opens new directions for big data memory processing. Professor Myoungsoo Jung said the team’s technology significantly improves performance compared to existing remote direct memory access (RDMA)-based memory disaggregation.

CXL is a peripheral component interconnect-express (PCIe)-based new dynamic multi-protocol made for efficiently utilizing memory devices and accelerators. Many enterprise data centers and memory vendors are paying attention to it as the next-generation multi-protocol for the era of big data.

Emerging big data applications such as machine learning, graph analytics, and in-memory databases require large memory capacities. However, scaling out the memory capacity via a prior memory interface like double data rate (DDR) is limited by the number of the central processing units (CPUs) and memory controllers. Therefore, memory disaggregation, which allows connecting a host to another host’s memory or memory nodes, has appeared.

RDMA is a way that a host can directly access another host’s memory via InfiniBand, the commonly used network protocol in data centers. Nowadays, most existing memory disaggregation technologies employ RDMA to get a large memory capacity. As a result, a host can share another host’s memory by transferring the data between local and remote memory.

Although RDMA-based memory disaggregation provides a large memory capacity to a host, two critical problems exist. First, scaling out the memory still needs an extra CPU to be added. Since passive memory such as dynamic random-access memory (DRAM), cannot operate by itself, it should be controlled by the CPU. Second, redundant data copies and software fabric interventions for RDMA-based memory disaggregation cause longer access latency. For example, remote memory access latency in RDMA-based memory disaggregation is multiple orders of magnitude longer than local memory access.

To address these issues, Professor Jung’s team developed the CXL-based memory disaggregation framework, including CXL-enabled customized CPUs, CXL devices, CXL switches, and CXL-aware operating system modules. The team’s CXL device is a pure passive and directly accessible memory node that contains multiple DRAM dual inline memory modules (DIMMs) and a CXL memory controller. Since the CXL memory controller supports the memory in the CXL device, a host can utilize the memory node without processor or software intervention. The team’s CXL switch enables scaling out a host’s memory capacity by hierarchically connecting multiple CXL devices to the CXL switch allowing more than hundreds of devices. Atop the switches and devices, the team’s CXL-enabled operating system removes redundant data copy and protocol conversion exhibited by conventional RDMA, which can significantly decrease access latency to the memory nodes.

In a test comparing loading 64B (cacheline) data from memory pooling devices, CXL-based memory disaggregation showed 8.2 times higher data load performance than RDMA-based memory disaggregation and even similar performance to local DRAM memory. In the team’s evaluations for a big data benchmark such as a machine learning-based test, CXL-based memory disaggregation technology also showed a maximum of 3.7 times higher performance than prior RDMA-based memory disaggregation technologies.

“Escaping from the conventional RDMA-based memory disaggregation, our CXL-based memory disaggregation framework can provide high scalability and performance for diverse datacenters and cloud service infrastructures,” said Professor Jung. He went on to stress, “Our CXL-based memory disaggregation research will bring about a new paradigm for memory solutions that will lead the era of big data.”

-Profile: Professor Myoungsoo Jung Computer Architecture and Memory Systems Laboratory (CAMEL)http://camelab.org School of Electrical EngineeringKAIST

2022.03.16 View 23521