interaction

-

Image Analysis to Automatically Quantify Gender Bias in Movies

Many commercial films worldwide continue to express womanhood in a stereotypical manner, a recent study using image analysis showed. A KAIST research team developed a novel image analysis method for automatically quantifying the degree of gender bias in films.

The ‘Bechdel Test’ has been the most representative and general method of evaluating gender bias in films. This test indicates the degree of gender bias in a film by measuring how active the presence of women is in a film. A film passes the Bechdel Test if the film (1) has at least two female characters, (2) who talk to each other, and (3) their conversation is not related to the male characters.

However, the Bechdel Test has fundamental limitations regarding the accuracy and practicality of the evaluation. Firstly, the Bechdel Test requires considerable human resources, as it is performed subjectively by a person. More importantly, the Bechdel Test analyzes only a single aspect of the film, the dialogues between characters in the script, and provides only a dichotomous result of passing the test, neglecting the fact that a film is a visual art form reflecting multi-layered and complicated gender bias phenomena. It is also difficult to fully represent today’s various discourse on gender bias, which is much more diverse than in 1985 when the Bechdel Test was first presented.

Inspired by these limitations, a KAIST research team led by Professor Byungjoo Lee from the Graduate School of Culture Technology proposed an advanced system that uses computer vision technology to automatically analyzes the visual information of each frame of the film. This allows the system to more accurately and practically evaluate the degree to which female and male characters are discriminatingly depicted in a film in quantitative terms, and further enables the revealing of gender bias that conventional analysis methods could not yet detect.

Professor Lee and his researchers Ji Yoon Jang and Sangyoon Lee analyzed 40 films from Hollywood and South Korea released between 2017 and 2018. They downsampled the films from 24 to 3 frames per second, and used Microsoft’s Face API facial recognition technology and object detection technology YOLO9000 to verify the details of the characters and their surrounding objects in the scenes.

Using the new system, the team computed eight quantitative indices that describe the representation of a particular gender in the films. They are: emotional diversity, spatial staticity, spatial occupancy, temporal occupancy, mean age, intellectual image, emphasis on appearance, and type and frequency of surrounding objects.

Figure 1. System Diagram

Figure 2. 40 Hollywood and Korean Films Analyzed in the Study

According to the emotional diversity index, the depicted women were found to be more prone to expressing passive emotions, such as sadness, fear, and surprise. In contrast, male characters in the same films were more likely to demonstrate active emotions, such as anger and hatred.

Figure 3. Difference in Emotional Diversity between Female and Male Characters

The type and frequency of surrounding objects index revealed that female characters and automobiles were tracked together only 55.7 % as much as that of male characters, while they were more likely to appear with furniture and in a household, with 123.9% probability.

In cases of temporal occupancy and mean age, female characters appeared less frequently in films than males at the rate of 56%, and were on average younger in 79.1% of the cases. These two indices were especially conspicuous in Korean films.

Professor Lee said, “Our research confirmed that many commercial films depict women from a stereotypical perspective. I hope this result promotes public awareness of the importance of taking prudence when filmmakers create characters in films.”

This study was supported by KAIST College of Liberal Arts and Convergence Science as part of the Venture Research Program for Master’s and PhD Students, and will be presented at the 22nd ACM Conference on Computer-Supported Cooperative Work and Social Computing (CSCW) on November 11 to be held in Austin, Texas.

Publication:

Ji Yoon Jang, Sangyoon Lee, and Byungjoo Lee. 2019. Quantification of Gender Representation Bias in Commercial Films based on Image Analysis. In Proceedings of the 22nd ACM Conference on Computer-Supported Cooperative Work and Social Computing (CSCW). ACM, New York, NY, USA, Article 198, 29 pages. https://doi.org/10.1145/3359300

Link to download the full-text paper:

https://files.cargocollective.com/611692/cscw198-jangA--1-.pdf

Profile: Prof. Byungjoo Lee, MD, PhD

byungjoo.lee@kaist.ac.kr

http://kiml.org/

Assistant Professor

Graduate School of Culture Technology (CT)

Korea Advanced Institute of Science and Technology (KAIST)

https://www.kaist.ac.kr Daejeon 34141, Korea

Profile: Ji Yoon Jang, M.S.

yoone3422@kaist.ac.kr

Interactive Media Lab

Graduate School of Culture Technology (CT)

Korea Advanced Institute of Science and Technology (KAIST)

https://www.kaist.ac.kr Daejeon 34141, Korea

Profile: Sangyoon Lee, M.S. Candidate

sl2820@kaist.ac.kr

Interactive Media Lab

Graduate School of Culture Technology (CT)

Korea Advanced Institute of Science and Technology (KAIST)

https://www.kaist.ac.kr Daejeon 34141, Korea

(END)

2019.10.17 View 26809

Image Analysis to Automatically Quantify Gender Bias in Movies

Many commercial films worldwide continue to express womanhood in a stereotypical manner, a recent study using image analysis showed. A KAIST research team developed a novel image analysis method for automatically quantifying the degree of gender bias in films.

The ‘Bechdel Test’ has been the most representative and general method of evaluating gender bias in films. This test indicates the degree of gender bias in a film by measuring how active the presence of women is in a film. A film passes the Bechdel Test if the film (1) has at least two female characters, (2) who talk to each other, and (3) their conversation is not related to the male characters.

However, the Bechdel Test has fundamental limitations regarding the accuracy and practicality of the evaluation. Firstly, the Bechdel Test requires considerable human resources, as it is performed subjectively by a person. More importantly, the Bechdel Test analyzes only a single aspect of the film, the dialogues between characters in the script, and provides only a dichotomous result of passing the test, neglecting the fact that a film is a visual art form reflecting multi-layered and complicated gender bias phenomena. It is also difficult to fully represent today’s various discourse on gender bias, which is much more diverse than in 1985 when the Bechdel Test was first presented.

Inspired by these limitations, a KAIST research team led by Professor Byungjoo Lee from the Graduate School of Culture Technology proposed an advanced system that uses computer vision technology to automatically analyzes the visual information of each frame of the film. This allows the system to more accurately and practically evaluate the degree to which female and male characters are discriminatingly depicted in a film in quantitative terms, and further enables the revealing of gender bias that conventional analysis methods could not yet detect.

Professor Lee and his researchers Ji Yoon Jang and Sangyoon Lee analyzed 40 films from Hollywood and South Korea released between 2017 and 2018. They downsampled the films from 24 to 3 frames per second, and used Microsoft’s Face API facial recognition technology and object detection technology YOLO9000 to verify the details of the characters and their surrounding objects in the scenes.

Using the new system, the team computed eight quantitative indices that describe the representation of a particular gender in the films. They are: emotional diversity, spatial staticity, spatial occupancy, temporal occupancy, mean age, intellectual image, emphasis on appearance, and type and frequency of surrounding objects.

Figure 1. System Diagram

Figure 2. 40 Hollywood and Korean Films Analyzed in the Study

According to the emotional diversity index, the depicted women were found to be more prone to expressing passive emotions, such as sadness, fear, and surprise. In contrast, male characters in the same films were more likely to demonstrate active emotions, such as anger and hatred.

Figure 3. Difference in Emotional Diversity between Female and Male Characters

The type and frequency of surrounding objects index revealed that female characters and automobiles were tracked together only 55.7 % as much as that of male characters, while they were more likely to appear with furniture and in a household, with 123.9% probability.

In cases of temporal occupancy and mean age, female characters appeared less frequently in films than males at the rate of 56%, and were on average younger in 79.1% of the cases. These two indices were especially conspicuous in Korean films.

Professor Lee said, “Our research confirmed that many commercial films depict women from a stereotypical perspective. I hope this result promotes public awareness of the importance of taking prudence when filmmakers create characters in films.”

This study was supported by KAIST College of Liberal Arts and Convergence Science as part of the Venture Research Program for Master’s and PhD Students, and will be presented at the 22nd ACM Conference on Computer-Supported Cooperative Work and Social Computing (CSCW) on November 11 to be held in Austin, Texas.

Publication:

Ji Yoon Jang, Sangyoon Lee, and Byungjoo Lee. 2019. Quantification of Gender Representation Bias in Commercial Films based on Image Analysis. In Proceedings of the 22nd ACM Conference on Computer-Supported Cooperative Work and Social Computing (CSCW). ACM, New York, NY, USA, Article 198, 29 pages. https://doi.org/10.1145/3359300

Link to download the full-text paper:

https://files.cargocollective.com/611692/cscw198-jangA--1-.pdf

Profile: Prof. Byungjoo Lee, MD, PhD

byungjoo.lee@kaist.ac.kr

http://kiml.org/

Assistant Professor

Graduate School of Culture Technology (CT)

Korea Advanced Institute of Science and Technology (KAIST)

https://www.kaist.ac.kr Daejeon 34141, Korea

Profile: Ji Yoon Jang, M.S.

yoone3422@kaist.ac.kr

Interactive Media Lab

Graduate School of Culture Technology (CT)

Korea Advanced Institute of Science and Technology (KAIST)

https://www.kaist.ac.kr Daejeon 34141, Korea

Profile: Sangyoon Lee, M.S. Candidate

sl2820@kaist.ac.kr

Interactive Media Lab

Graduate School of Culture Technology (CT)

Korea Advanced Institute of Science and Technology (KAIST)

https://www.kaist.ac.kr Daejeon 34141, Korea

(END)

2019.10.17 View 26809 -

Flexible User Interface Distribution for Ubiquitous Multi-Device Interaction

< Research Group of Professor Insik Shin (center) >

KAIST researchers have developed mobile software platform technology that allows a mobile application (app) to be executed simultaneously and more dynamically on multiple smart devices. Its high flexibility and broad applicability can help accelerate a shift from the current single-device paradigm to a multiple one, which enables users to utilize mobile apps in ways previously unthinkable.

Recent trends in mobile and IoT technologies in this era of 5G high-speed wireless communication have been hallmarked by the emergence of new display hardware and smart devices such as dual screens, foldable screens, smart watches, smart TVs, and smart cars. However, the current mobile app ecosystem is still confined to the conventional single-device paradigm in which users can employ only one screen on one device at a time. Due to this limitation, the real potential of multi-device environments has not been fully explored.

A KAIST research team led by Professor Insik Shin from the School of Computing, in collaboration with Professor Steve Ko’s group from the State University of New York at Buffalo, has developed mobile software platform technology named FLUID that can flexibly distribute the user interfaces (UIs) of an app to a number of other devices in real time without needing any modifications. The proposed technology provides single-device virtualization, and ensures that the interactions between the distributed UI elements across multiple devices remain intact.

This flexible multimodal interaction can be realized in diverse ubiquitous user experiences (UX), such as using live video steaming and chatting apps including YouTube, LiveMe, and AfreecaTV. FLUID can ensure that the video is not obscured by the chat window by distributing and displaying them separately on different devices respectively, which lets users enjoy the chat function while watching the video at the same time.

In addition, the UI for the destination input on a navigation app can be migrated into the passenger’s device with the help of FLUID, so that the destination can be easily and safely entered by the passenger while the driver is at the wheel.

FLUID can also support 5G multi-view apps – the latest service that allows sports or games to be viewed from various angles on a single device. With FLUID, the user can watch the event simultaneously from different viewpoints on multiple devices without switching between viewpoints on a single screen.

PhD candidate Sangeun Oh, who is the first author, and his team implemented the prototype of FLUID on the leading open-source mobile operating system, Android, and confirmed that it can successfully deliver the new UX to 20 existing legacy apps.

“This new technology can be applied to next-generation products from South Korean companies such as LG’s dual screen phone and Samsung’s foldable phone and is expected to embolden their competitiveness by giving them a head-start in the global market.” said Professor Shin.

This study will be presented at the 25th Annual International Conference on Mobile Computing and Networking (ACM MobiCom 2019) October 21 through 25 in Los Cabos, Mexico. The research was supported by the National Science Foundation (NSF) (CNS-1350883 (CAREER) and CNS-1618531).

Figure 1. Live video streaming and chatting app scenario

Figure 2. Navigation app scenario

Figure 3. 5G multi-view app scenario

Publication: Sangeun Oh, Ahyeon Kim, Sunjae Lee, Kilho Lee, Dae R. Jeong, Steven Y. Ko, and Insik Shin. 2019. FLUID: Flexible User Interface Distribution for Ubiquitous Multi-device Interaction. To be published in Proceedings of the 25th Annual International Conference on Mobile Computing and Networking (ACM MobiCom 2019). ACM, New York, NY, USA. Article Number and DOI Name TBD.

Video Material:

https://youtu.be/lGO4GwH4enA

Profile: Prof. Insik Shin, MS, PhD

ishin@kaist.ac.kr

https://cps.kaist.ac.kr/~ishin

Professor

Cyber-Physical Systems (CPS) Lab

School of Computing

Korea Advanced Institute of Science and Technology (KAIST)

http://kaist.ac.kr Daejeon 34141, Korea

Profile: Sangeun Oh, PhD Candidate

ohsang1213@kaist.ac.kr

https://cps.kaist.ac.kr/

PhD Candidate

Cyber-Physical Systems (CPS) Lab

School of Computing

Korea Advanced Institute of Science and Technology (KAIST)

http://kaist.ac.kr Daejeon 34141, Korea

Profile: Prof. Steve Ko, PhD

stevko@buffalo.edu

https://nsr.cse.buffalo.edu/?page_id=272

Associate Professor

Networked Systems Research Group

Department of Computer Science and Engineering

State University of New York at Buffalo

http://www.buffalo.edu/ Buffalo 14260, USA

(END)

2019.07.20 View 40821

Flexible User Interface Distribution for Ubiquitous Multi-Device Interaction

< Research Group of Professor Insik Shin (center) >

KAIST researchers have developed mobile software platform technology that allows a mobile application (app) to be executed simultaneously and more dynamically on multiple smart devices. Its high flexibility and broad applicability can help accelerate a shift from the current single-device paradigm to a multiple one, which enables users to utilize mobile apps in ways previously unthinkable.

Recent trends in mobile and IoT technologies in this era of 5G high-speed wireless communication have been hallmarked by the emergence of new display hardware and smart devices such as dual screens, foldable screens, smart watches, smart TVs, and smart cars. However, the current mobile app ecosystem is still confined to the conventional single-device paradigm in which users can employ only one screen on one device at a time. Due to this limitation, the real potential of multi-device environments has not been fully explored.

A KAIST research team led by Professor Insik Shin from the School of Computing, in collaboration with Professor Steve Ko’s group from the State University of New York at Buffalo, has developed mobile software platform technology named FLUID that can flexibly distribute the user interfaces (UIs) of an app to a number of other devices in real time without needing any modifications. The proposed technology provides single-device virtualization, and ensures that the interactions between the distributed UI elements across multiple devices remain intact.

This flexible multimodal interaction can be realized in diverse ubiquitous user experiences (UX), such as using live video steaming and chatting apps including YouTube, LiveMe, and AfreecaTV. FLUID can ensure that the video is not obscured by the chat window by distributing and displaying them separately on different devices respectively, which lets users enjoy the chat function while watching the video at the same time.

In addition, the UI for the destination input on a navigation app can be migrated into the passenger’s device with the help of FLUID, so that the destination can be easily and safely entered by the passenger while the driver is at the wheel.

FLUID can also support 5G multi-view apps – the latest service that allows sports or games to be viewed from various angles on a single device. With FLUID, the user can watch the event simultaneously from different viewpoints on multiple devices without switching between viewpoints on a single screen.

PhD candidate Sangeun Oh, who is the first author, and his team implemented the prototype of FLUID on the leading open-source mobile operating system, Android, and confirmed that it can successfully deliver the new UX to 20 existing legacy apps.

“This new technology can be applied to next-generation products from South Korean companies such as LG’s dual screen phone and Samsung’s foldable phone and is expected to embolden their competitiveness by giving them a head-start in the global market.” said Professor Shin.

This study will be presented at the 25th Annual International Conference on Mobile Computing and Networking (ACM MobiCom 2019) October 21 through 25 in Los Cabos, Mexico. The research was supported by the National Science Foundation (NSF) (CNS-1350883 (CAREER) and CNS-1618531).

Figure 1. Live video streaming and chatting app scenario

Figure 2. Navigation app scenario

Figure 3. 5G multi-view app scenario

Publication: Sangeun Oh, Ahyeon Kim, Sunjae Lee, Kilho Lee, Dae R. Jeong, Steven Y. Ko, and Insik Shin. 2019. FLUID: Flexible User Interface Distribution for Ubiquitous Multi-device Interaction. To be published in Proceedings of the 25th Annual International Conference on Mobile Computing and Networking (ACM MobiCom 2019). ACM, New York, NY, USA. Article Number and DOI Name TBD.

Video Material:

https://youtu.be/lGO4GwH4enA

Profile: Prof. Insik Shin, MS, PhD

ishin@kaist.ac.kr

https://cps.kaist.ac.kr/~ishin

Professor

Cyber-Physical Systems (CPS) Lab

School of Computing

Korea Advanced Institute of Science and Technology (KAIST)

http://kaist.ac.kr Daejeon 34141, Korea

Profile: Sangeun Oh, PhD Candidate

ohsang1213@kaist.ac.kr

https://cps.kaist.ac.kr/

PhD Candidate

Cyber-Physical Systems (CPS) Lab

School of Computing

Korea Advanced Institute of Science and Technology (KAIST)

http://kaist.ac.kr Daejeon 34141, Korea

Profile: Prof. Steve Ko, PhD

stevko@buffalo.edu

https://nsr.cse.buffalo.edu/?page_id=272

Associate Professor

Networked Systems Research Group

Department of Computer Science and Engineering

State University of New York at Buffalo

http://www.buffalo.edu/ Buffalo 14260, USA

(END)

2019.07.20 View 40821 -

Play Games With No Latency

One of the most challenging issues for game players looks to be resolved soon with the introduction of a zero-latency gaming environment. A KAIST team developed a technology that helps game players maintain zero-latency performance. The new technology transforms the shapes of game design according to the amount of latency.

Latency in human-computer interactions is often caused by various factors related to the environment and performance of the devices, networks, and data processing. The term ‘lag’ is used to refer to any latency during gaming which impacts the user’s performance.

Professor Byungjoo Lee at the Graduate School of Culture Technology in collaboration with Aalto University in Finland presented a mathematical model for predicting players' behavior by understanding the effects of latency on players. This cognitive model is capable of predicting the success rate of a user when there is latency in a 'moving target selection' task which requires button input in a time constrained situation.

The model predicts the players’ task success rate when latency is added to the gaming environment. Using these predicted success rates, the design elements of the game are geometrically modified to help players maintain similar success rates as they would achieve in a zero-latency environment. In fact, this research succeeded in modifying the pillar heights of the Flappy Bird game, allowing the players to maintain their gaming performance regardless of the added latency.

Professor Lee said, "This technique is unique in the sense that it does not interfere with a player's gaming flow, unlike traditional methods which manipulate the game clock by the amount of latency. This study can be extended to various games such as reducing the size of obstacles in the latent computing environment.”

This research, in collaboration with Dr. Sunjun Kim from Aalto University and led by PhD candidate Injung Lee, was presented during the 2019 CHI Conference on Human Factors in Computing Systems last month in Glasgow in the UK.

This research was supported by the National Research Foundation of Korea (NRF) (2017R1C1B2002101, 2018R1A5A7025409), and the Aalto University Seed Funding Granted to the GamerLab respectively.

Figure 1. Overview of Geometric Compensation

Publication:

Injung Lee, Sunjun Kim, and Byungjoo Lee. 2019. Geometrically Compensating Effect of End-to-End Latency in Moving-Target Selection Games. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems (CHI’19) . ACM, New York, NY, USA, Article 560, 12 pages. https://doi.org/10.1145/3290605.3300790

Video Material:

https://youtu.be/TTi7dipAKJs

Profile: Prof. Byungjoo Lee, MD, PhD

byungjoo.lee@kaist.ac.kr

http://kiml.org/

Assistant Professor

Graduate School of Culture Technology (CT)

Korea Advanced Institute of Science and Technology (KAIST)

http://kaist.ac.kr Daejeon 34141, Korea

Profile: Injung Lee, PhD Candidate

edndn@kaist.ac.kr

PhD Candidate

Interactive Media Lab

Graduate School of Culture Technology (CT)

Korea Advanced Institute of Science and Technology (KAIST)

http://kaist.ac.kr

Daejeon 34141, Korea

Profile: Postdoc. Sunjun Kim, MD, PhD

kuaa.net@gmail.com

Postdoctoral Researcher

User Interfaces Group

Aalto University

https://www.aalto.fi Espoo 02150, Finland

(END)

2019.06.11 View 47679

Play Games With No Latency

One of the most challenging issues for game players looks to be resolved soon with the introduction of a zero-latency gaming environment. A KAIST team developed a technology that helps game players maintain zero-latency performance. The new technology transforms the shapes of game design according to the amount of latency.

Latency in human-computer interactions is often caused by various factors related to the environment and performance of the devices, networks, and data processing. The term ‘lag’ is used to refer to any latency during gaming which impacts the user’s performance.

Professor Byungjoo Lee at the Graduate School of Culture Technology in collaboration with Aalto University in Finland presented a mathematical model for predicting players' behavior by understanding the effects of latency on players. This cognitive model is capable of predicting the success rate of a user when there is latency in a 'moving target selection' task which requires button input in a time constrained situation.

The model predicts the players’ task success rate when latency is added to the gaming environment. Using these predicted success rates, the design elements of the game are geometrically modified to help players maintain similar success rates as they would achieve in a zero-latency environment. In fact, this research succeeded in modifying the pillar heights of the Flappy Bird game, allowing the players to maintain their gaming performance regardless of the added latency.

Professor Lee said, "This technique is unique in the sense that it does not interfere with a player's gaming flow, unlike traditional methods which manipulate the game clock by the amount of latency. This study can be extended to various games such as reducing the size of obstacles in the latent computing environment.”

This research, in collaboration with Dr. Sunjun Kim from Aalto University and led by PhD candidate Injung Lee, was presented during the 2019 CHI Conference on Human Factors in Computing Systems last month in Glasgow in the UK.

This research was supported by the National Research Foundation of Korea (NRF) (2017R1C1B2002101, 2018R1A5A7025409), and the Aalto University Seed Funding Granted to the GamerLab respectively.

Figure 1. Overview of Geometric Compensation

Publication:

Injung Lee, Sunjun Kim, and Byungjoo Lee. 2019. Geometrically Compensating Effect of End-to-End Latency in Moving-Target Selection Games. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems (CHI’19) . ACM, New York, NY, USA, Article 560, 12 pages. https://doi.org/10.1145/3290605.3300790

Video Material:

https://youtu.be/TTi7dipAKJs

Profile: Prof. Byungjoo Lee, MD, PhD

byungjoo.lee@kaist.ac.kr

http://kiml.org/

Assistant Professor

Graduate School of Culture Technology (CT)

Korea Advanced Institute of Science and Technology (KAIST)

http://kaist.ac.kr Daejeon 34141, Korea

Profile: Injung Lee, PhD Candidate

edndn@kaist.ac.kr

PhD Candidate

Interactive Media Lab

Graduate School of Culture Technology (CT)

Korea Advanced Institute of Science and Technology (KAIST)

http://kaist.ac.kr

Daejeon 34141, Korea

Profile: Postdoc. Sunjun Kim, MD, PhD

kuaa.net@gmail.com

Postdoctoral Researcher

User Interfaces Group

Aalto University

https://www.aalto.fi Espoo 02150, Finland

(END)

2019.06.11 View 47679 -

Deep Learning Predicts Drug-Drug and Drug-Food Interactions

A Korean research team from KAIST developed a computational framework, DeepDDI, that accurately predicts and generates 86 types of drug-drug and drug-food interactions as outputs of human-readable sentences, which allows in-depth understanding of the drug-drug and drug-food interactions.

Drug interactions, including drug-drug interactions (DDIs) and drug-food constituent interactions (DFIs), can trigger unexpected pharmacological effects, including adverse drug events (ADEs), with causal mechanisms often unknown. However, current prediction methods do not provide sufficient details beyond the chance of DDI occurrence, or require detailed drug information often unavailable for DDI prediction.

To tackle this problem, Dr. Jae Yong Ryu, Assistant Professor Hyun Uk Kim and Distinguished Professor Sang Yup Lee, all from the Department of Chemical and Biomolecular Engineering at Korea Advanced Institute of Science and Technology (KAIST), developed a computational framework, named DeepDDI, that accurately predicts 86 DDI types for a given drug pair. The research results were published online in Proceedings of the National Academy of Sciences of the United States of America (PNAS) on April 16, 2018, which is entitled “Deep learning improves prediction of drug-drug and drug-food interactions.”

DeepDDI takes structural information and names of two drugs in pair as inputs, and predicts relevant DDI types for the input drug pair. DeepDDI uses deep neural network to predict 86 DDI types with a mean accuracy of 92.4% using the DrugBank gold standard DDI dataset covering 192,284 DDIs contributed by 191,878 drug pairs. Very importantly, DDI types predicted by DeepDDI are generated in the form of human-readable sentences as outputs, which describe changes in pharmacological effects and/or the risk of ADEs as a result of the interaction between two drugs in pair. For example, DeepDDI output sentences describing potential interactions between oxycodone (opioid pain medication) and atazanavir (antiretroviral medication) were generated as follows: “The metabolism of Oxycodone can be decreased when combined with Atazanavir”; and “The risk or severity of adverse effects can be increased when Oxycodone is combined with Atazanavir”. By doing this, DeepDDI can provide more specific information on drug interactions beyond the occurrence chance of DDIs or ADEs typically reported to date.

DeepDDI was first used to predict DDI types of 2,329,561 drug pairs from all possible combinations of 2,159 approved drugs, from which DDI types of 487,632 drug pairs were newly predicted. Also, DeepDDI can be used to suggest which drug or food to avoid during medication in order to minimize the chance of adverse drug events or optimize the drug efficacy. To this end, DeepDDI was used to suggest potential causal mechanisms for the reported ADEs of 9,284 drug pairs, and also predict alternative drug candidates for 62,707 drug pairs having negative health effects to keep only the beneficial effects. Furthermore, DeepDDI was applied to 3,288,157 drug-food constituent pairs (2,159 approved drugs and 1,523 well-characterized food constituents) to predict DFIs. The effects of 256 food constituents on pharmacological effects of interacting drugs and bioactivities of 149 food constituents were also finally predicted. All these prediction results can be useful if an individual is taking medications for a specific (chronic) disease such as hypertension or diabetes mellitus type 2.

Distinguished Professor Sang Yup Lee said, “We have developed a platform technology DeepDDI that will allow precision medicine in the era of Fourth Industrial Revolution. DeepDDI can serve to provide important information on drug prescription and dietary suggestions while taking certain drugs to maximize health benefits and ultimately help maintain a healthy life in this aging society.”

Figure 1. Overall scheme of Deep DDDI and prediction of food constituents that reduce the in vivo concentration of approved drugs

2018.04.18 View 12651

Deep Learning Predicts Drug-Drug and Drug-Food Interactions

A Korean research team from KAIST developed a computational framework, DeepDDI, that accurately predicts and generates 86 types of drug-drug and drug-food interactions as outputs of human-readable sentences, which allows in-depth understanding of the drug-drug and drug-food interactions.

Drug interactions, including drug-drug interactions (DDIs) and drug-food constituent interactions (DFIs), can trigger unexpected pharmacological effects, including adverse drug events (ADEs), with causal mechanisms often unknown. However, current prediction methods do not provide sufficient details beyond the chance of DDI occurrence, or require detailed drug information often unavailable for DDI prediction.

To tackle this problem, Dr. Jae Yong Ryu, Assistant Professor Hyun Uk Kim and Distinguished Professor Sang Yup Lee, all from the Department of Chemical and Biomolecular Engineering at Korea Advanced Institute of Science and Technology (KAIST), developed a computational framework, named DeepDDI, that accurately predicts 86 DDI types for a given drug pair. The research results were published online in Proceedings of the National Academy of Sciences of the United States of America (PNAS) on April 16, 2018, which is entitled “Deep learning improves prediction of drug-drug and drug-food interactions.”

DeepDDI takes structural information and names of two drugs in pair as inputs, and predicts relevant DDI types for the input drug pair. DeepDDI uses deep neural network to predict 86 DDI types with a mean accuracy of 92.4% using the DrugBank gold standard DDI dataset covering 192,284 DDIs contributed by 191,878 drug pairs. Very importantly, DDI types predicted by DeepDDI are generated in the form of human-readable sentences as outputs, which describe changes in pharmacological effects and/or the risk of ADEs as a result of the interaction between two drugs in pair. For example, DeepDDI output sentences describing potential interactions between oxycodone (opioid pain medication) and atazanavir (antiretroviral medication) were generated as follows: “The metabolism of Oxycodone can be decreased when combined with Atazanavir”; and “The risk or severity of adverse effects can be increased when Oxycodone is combined with Atazanavir”. By doing this, DeepDDI can provide more specific information on drug interactions beyond the occurrence chance of DDIs or ADEs typically reported to date.

DeepDDI was first used to predict DDI types of 2,329,561 drug pairs from all possible combinations of 2,159 approved drugs, from which DDI types of 487,632 drug pairs were newly predicted. Also, DeepDDI can be used to suggest which drug or food to avoid during medication in order to minimize the chance of adverse drug events or optimize the drug efficacy. To this end, DeepDDI was used to suggest potential causal mechanisms for the reported ADEs of 9,284 drug pairs, and also predict alternative drug candidates for 62,707 drug pairs having negative health effects to keep only the beneficial effects. Furthermore, DeepDDI was applied to 3,288,157 drug-food constituent pairs (2,159 approved drugs and 1,523 well-characterized food constituents) to predict DFIs. The effects of 256 food constituents on pharmacological effects of interacting drugs and bioactivities of 149 food constituents were also finally predicted. All these prediction results can be useful if an individual is taking medications for a specific (chronic) disease such as hypertension or diabetes mellitus type 2.

Distinguished Professor Sang Yup Lee said, “We have developed a platform technology DeepDDI that will allow precision medicine in the era of Fourth Industrial Revolution. DeepDDI can serve to provide important information on drug prescription and dietary suggestions while taking certain drugs to maximize health benefits and ultimately help maintain a healthy life in this aging society.”

Figure 1. Overall scheme of Deep DDDI and prediction of food constituents that reduce the in vivo concentration of approved drugs

2018.04.18 View 12651 -

Sangeun Oh Recognized as a 2017 Google Fellow

Sangeun Oh, a Ph.D. candidate in the School of Computing was selected as a Google PhD Fellow in 2017. He is one of 47 awardees of the Google PhD Fellowship in the world.

The Google PhD Fellowship awards students showing outstanding performance in the field of computer science and related research. Since being established in 2009, the program has provided various benefits, including scholarships worth $10,000 USD and one-to-one research discussion with mentors from Google.

His research work on a mobile system that allows interactions among various kinds of smart devices was recognized in the field of mobile computing. He developed a mobile platform that allows smart devices to share diverse functions, including logins, payments, and sensors. This technology provides numerous user experiences that existing mobile platforms could not offer. Through cross-device functionality sharing, users can utilize multiple smart devices in a more convenient manner. The research was presented at The Annual International Conference on Mobile Systems, Applications, and Services (MobiSys) of the Association for Computing Machinery in July, 2017.

Oh said, “I would like to express my gratitude to my advisor, the professors in the School of Computing, and my lab colleagues. I will devote myself to carrying out more research in order to contribute to society.”

His advisor, Insik Shin, a professor in the School of Computing said, “Being recognized as a Google PhD Fellow is an honor to both the student as well as KAIST. I strongly anticipate and believe that Oh will make the next step by carrying out good quality research.”

2017.09.27 View 13235

Sangeun Oh Recognized as a 2017 Google Fellow

Sangeun Oh, a Ph.D. candidate in the School of Computing was selected as a Google PhD Fellow in 2017. He is one of 47 awardees of the Google PhD Fellowship in the world.

The Google PhD Fellowship awards students showing outstanding performance in the field of computer science and related research. Since being established in 2009, the program has provided various benefits, including scholarships worth $10,000 USD and one-to-one research discussion with mentors from Google.

His research work on a mobile system that allows interactions among various kinds of smart devices was recognized in the field of mobile computing. He developed a mobile platform that allows smart devices to share diverse functions, including logins, payments, and sensors. This technology provides numerous user experiences that existing mobile platforms could not offer. Through cross-device functionality sharing, users can utilize multiple smart devices in a more convenient manner. The research was presented at The Annual International Conference on Mobile Systems, Applications, and Services (MobiSys) of the Association for Computing Machinery in July, 2017.

Oh said, “I would like to express my gratitude to my advisor, the professors in the School of Computing, and my lab colleagues. I will devote myself to carrying out more research in order to contribute to society.”

His advisor, Insik Shin, a professor in the School of Computing said, “Being recognized as a Google PhD Fellow is an honor to both the student as well as KAIST. I strongly anticipate and believe that Oh will make the next step by carrying out good quality research.”

2017.09.27 View 13235 -

Professor Jinah Park Received the Prime Minister's Award

Professor Jinah Park of the School of Computing received the Prime Minister’s Citation Ribbon on April 21 at a ceremony celebrating the Day of Science and ICT. The awardee was selected by the Ministry of Science, ICT and Future Planning and Korea Communications Commission.

Professor Park was recognized for her convergence R&D of a VR simulator for dental treatment with haptic feedback, in addition to her research on understanding 3D interaction behavior in VR environments. Her major academic contributions are in the field of medical imaging, where she developed a computational technique to analyze cardiac motion from tagging data.

Professor Park said she was very pleased to see her twenty-plus years of research on ways to converge computing into medical areas finally bear fruit. She also thanked her colleagues and students in her Computer Graphics and CGV Research Lab for working together to make this achievement possible.

2017.04.26 View 10665

Professor Jinah Park Received the Prime Minister's Award

Professor Jinah Park of the School of Computing received the Prime Minister’s Citation Ribbon on April 21 at a ceremony celebrating the Day of Science and ICT. The awardee was selected by the Ministry of Science, ICT and Future Planning and Korea Communications Commission.

Professor Park was recognized for her convergence R&D of a VR simulator for dental treatment with haptic feedback, in addition to her research on understanding 3D interaction behavior in VR environments. Her major academic contributions are in the field of medical imaging, where she developed a computational technique to analyze cardiac motion from tagging data.

Professor Park said she was very pleased to see her twenty-plus years of research on ways to converge computing into medical areas finally bear fruit. She also thanked her colleagues and students in her Computer Graphics and CGV Research Lab for working together to make this achievement possible.

2017.04.26 View 10665 -

KAIST Hosts the Wearable Computer Contest 2015

Deadlines for Prototype Contest by May 30, 2015 and August 15 for Idea Contest

KAIST will hold the Wearable Computer Contest 2015 in November, which will be sponsored by Samsung Electronics Co., Ltd.

Wearable computers have emerged as next-generation mobile devices, and are gaining more popularity with the growth of the Internet of Things. KAIST has introduced wearable devices such as K-Glass 2, a smart glass with augmented reality embedded. The Glass also works on commands by blinking eyes.

This year’s contest with the theme of “Wearable Computers for Internet of Things” is divided into two parts: the Prototype Competition and Idea Contest.

With the fusion of information technology (IT) and fashion, contestants are encouraged to submit prototypes of their ideas by May 30, 2015. The ten teams that make it to the finals will receive a wearable computer platform and Human-Computer Interaction (HCI) education, along with a prize of USD 1,000 for prototype production costs. The winner of the Prototype Contest will receive a prize of USD 5,000 and an award from the Minister of Science, ICT and Future Planning (MSIP) of the Republic of Korea.

In the Idea Contest, posters containing ideas and concepts of wearable devices should be submitted by August 15, 2015. The teams that make it to the finals will have to display a life-size mockup in the final stage. The winner of the contest will receive a prize of USD 1,000 and an award from the Minister of MSIP.

Any undergraduate or graduate student in Korea can enter the Prototype Competition and anyone can participate in the Idea Contest.

The chairman of the event, Hoi-Jun Yoo, a professor of the Department of Electrical Engineering at KAIST, noted:

“There is a growing interest in wearable computers in the industry. I can easily envisage that there will be a new IT world where wearable computers are integrated into the Internet of Things, healthcare, and smart homes.”

More information on the contest can be found online at http://www.ufcom.org.

Picture: Finalists in the last year’s contest

2015.05.11 View 8718

KAIST Hosts the Wearable Computer Contest 2015

Deadlines for Prototype Contest by May 30, 2015 and August 15 for Idea Contest

KAIST will hold the Wearable Computer Contest 2015 in November, which will be sponsored by Samsung Electronics Co., Ltd.

Wearable computers have emerged as next-generation mobile devices, and are gaining more popularity with the growth of the Internet of Things. KAIST has introduced wearable devices such as K-Glass 2, a smart glass with augmented reality embedded. The Glass also works on commands by blinking eyes.

This year’s contest with the theme of “Wearable Computers for Internet of Things” is divided into two parts: the Prototype Competition and Idea Contest.

With the fusion of information technology (IT) and fashion, contestants are encouraged to submit prototypes of their ideas by May 30, 2015. The ten teams that make it to the finals will receive a wearable computer platform and Human-Computer Interaction (HCI) education, along with a prize of USD 1,000 for prototype production costs. The winner of the Prototype Contest will receive a prize of USD 5,000 and an award from the Minister of Science, ICT and Future Planning (MSIP) of the Republic of Korea.

In the Idea Contest, posters containing ideas and concepts of wearable devices should be submitted by August 15, 2015. The teams that make it to the finals will have to display a life-size mockup in the final stage. The winner of the contest will receive a prize of USD 1,000 and an award from the Minister of MSIP.

Any undergraduate or graduate student in Korea can enter the Prototype Competition and anyone can participate in the Idea Contest.

The chairman of the event, Hoi-Jun Yoo, a professor of the Department of Electrical Engineering at KAIST, noted:

“There is a growing interest in wearable computers in the industry. I can easily envisage that there will be a new IT world where wearable computers are integrated into the Internet of Things, healthcare, and smart homes.”

More information on the contest can be found online at http://www.ufcom.org.

Picture: Finalists in the last year’s contest

2015.05.11 View 8718 -

Interactions Features KAIST's Human-Computer Interaction Lab

Interactions, a bi-monthly magazine published by the Association for Computing Machinery (ACM), the largest educational and scientific computing society in the world, featured an article introducing Human-Computer Interaction (HCI) Lab at KAIST in the March/April 2015 issue (http://interactions.acm.org/archive/toc/march-april-2015).

Established in 2002, the HCI Lab (http://hcil.kaist.ac.kr/) is run by Professor Geehyuk Lee of the Computer Science Department at KAIST. The lab conducts various research projects to improve the design and operation of physical user interfaces and develops new interaction techniques for new types of computers. For the article, see the link below:

ACM Interactions, March and April 2015

Day in the Lab: Human-Computer Interaction Lab @ KAIST

http://interactions.acm.org/archive/view/march-april-2015/human-computer-interaction-lab-kaist

2015.03.02 View 11107

Interactions Features KAIST's Human-Computer Interaction Lab

Interactions, a bi-monthly magazine published by the Association for Computing Machinery (ACM), the largest educational and scientific computing society in the world, featured an article introducing Human-Computer Interaction (HCI) Lab at KAIST in the March/April 2015 issue (http://interactions.acm.org/archive/toc/march-april-2015).

Established in 2002, the HCI Lab (http://hcil.kaist.ac.kr/) is run by Professor Geehyuk Lee of the Computer Science Department at KAIST. The lab conducts various research projects to improve the design and operation of physical user interfaces and develops new interaction techniques for new types of computers. For the article, see the link below:

ACM Interactions, March and April 2015

Day in the Lab: Human-Computer Interaction Lab @ KAIST

http://interactions.acm.org/archive/view/march-april-2015/human-computer-interaction-lab-kaist

2015.03.02 View 11107 -

A KAIST Student Team Wins the ACM UIST 2014 Student Innovation Contest

A KAIST team consisted of students from the Departments of Industrial Design and Computer Science participated in the ACM UIST 2014 Student Innovation Contest and received 1st Prize in the category of People’s Choice.

The Association for Computing Machinery (ACM) Symposium on User Interface Software and Technology (UIST) is an international forum to promote innovations in human-computer interfaces, which takes place annually and is sponsored by ACM Special Interest Groups on Computer-Human Interaction (SIGCHI) and Computer Graphics (SIGGRAPH). The ACM UIST conference brings together professionals in the fields of graphical and web-user interfaces, tangible and ubiquitous computing, virtual and augmented reality, multimedia, and input and output devices.

The Student Innovation Contest has been held during the UIST conference since 2009 to innovate new interactions on state-of-the-art hardware. The participating students were given with the hardware platform to build on—this year, it was Kinoma Create, a JavaScript-powered construction kit that allows makers, professional product designers, and web developers to create personal projects, consumer electronics, and "Internet of Things" prototypes. Contestants demonstrated their creations on household interfaces, and two winners in each of three categories -- Most Creative, Most Useful, and the People’s Choice -- were awarded.

Utilizing Kinoma Create, which came with a built-in touchscreen, WiFi, Bluetooth, a front-facing sensor connector, and a 50-pin rear sensor dock, the KAIST team developed a “smart mop,” transforming the irksome task of cleaning into a fun game. The smart mop identifies target dirt and shows its location on the display built in the rod of a mop. If the user turns on a game mode, then winning scores are gained wherever the target dirt is cleaned.

The People’s Choice award was decided by conference attendees, and they voted the smart mop as their most favorite project.

Professor Tek-Jin Nam of the Department of Industrial Design at KAIST, who advised the students, said, "A total of 24 teams from such prestigious universities as Carnegie Mellon University, Georgia Institute of Technology, and the University of Tokyo joined the contest, and we are pleased with the good results. Many people, in fact, praised the integration of creativity and technical excellence our have shown through the smart mop.”

Team KAIST: pictured from right to left, Sun-Jun Kim, Se-Jin Kim, and Han-Jong Kim

The Smart Mop can clean the floor and offer users a fun game.

2014.11.12 View 12287

A KAIST Student Team Wins the ACM UIST 2014 Student Innovation Contest

A KAIST team consisted of students from the Departments of Industrial Design and Computer Science participated in the ACM UIST 2014 Student Innovation Contest and received 1st Prize in the category of People’s Choice.

The Association for Computing Machinery (ACM) Symposium on User Interface Software and Technology (UIST) is an international forum to promote innovations in human-computer interfaces, which takes place annually and is sponsored by ACM Special Interest Groups on Computer-Human Interaction (SIGCHI) and Computer Graphics (SIGGRAPH). The ACM UIST conference brings together professionals in the fields of graphical and web-user interfaces, tangible and ubiquitous computing, virtual and augmented reality, multimedia, and input and output devices.

The Student Innovation Contest has been held during the UIST conference since 2009 to innovate new interactions on state-of-the-art hardware. The participating students were given with the hardware platform to build on—this year, it was Kinoma Create, a JavaScript-powered construction kit that allows makers, professional product designers, and web developers to create personal projects, consumer electronics, and "Internet of Things" prototypes. Contestants demonstrated their creations on household interfaces, and two winners in each of three categories -- Most Creative, Most Useful, and the People’s Choice -- were awarded.

Utilizing Kinoma Create, which came with a built-in touchscreen, WiFi, Bluetooth, a front-facing sensor connector, and a 50-pin rear sensor dock, the KAIST team developed a “smart mop,” transforming the irksome task of cleaning into a fun game. The smart mop identifies target dirt and shows its location on the display built in the rod of a mop. If the user turns on a game mode, then winning scores are gained wherever the target dirt is cleaned.

The People’s Choice award was decided by conference attendees, and they voted the smart mop as their most favorite project.

Professor Tek-Jin Nam of the Department of Industrial Design at KAIST, who advised the students, said, "A total of 24 teams from such prestigious universities as Carnegie Mellon University, Georgia Institute of Technology, and the University of Tokyo joined the contest, and we are pleased with the good results. Many people, in fact, praised the integration of creativity and technical excellence our have shown through the smart mop.”

Team KAIST: pictured from right to left, Sun-Jun Kim, Se-Jin Kim, and Han-Jong Kim

The Smart Mop can clean the floor and offer users a fun game.

2014.11.12 View 12287 -

KAIST develops TransWall, a transparent touchable display wall

At a busy shopping mall, shoppers walk by store windows to find attractive items to purchase. Through the windows, shoppers can see the products displayed, but may have a hard time imagining doing something beyond just looking, such as touching the displayed items or communicating with sales assistants inside the store. With TransWall, however, window shopping could become more fun and real than ever before.

Woohun Lee, a professor of Industrial Design at KAIST, and his research team have recently developed TransWall, a two-sided, touchable, and transparent display wall that greatly enhances users' interpersonal experiences.

With an incorporated surface transducer, TransWall offers audio and vibrotactile feedback to the users. As a result, people can collaborate via a shared see-through display and communicate with one another by talking or even touching one another through the wall. A holographic screen film is inserted between the sheets of plexiglass, and beam projectors installed on each side of the wall project images that are reflected.

TransWall is touch-sensitive on both sides. Two users standing face-to-face on each side of the wall can touch the same spot at the same time without any physical interference. When this happens, TransWall provides the users with specific visual, acoustic, and vibrotactile experiences, allowing them to feel as if they are touching one another.

Professor Lee said, "TransWall concept enables people to see, hear, or even touch others through the wall while enjoying gaming and interpersonal communication. TransWall can be installed inside buildings, such as shopping centers, museums, and theme parks, for people to have an opportunity to collaborate even with strangers in a natural way."

He further added that "TransWall will be useful in places that require physical isolation for high security and safety, germ-free rooms in hospitals, for example." TransWall will allow patients to interact with family and friends without compromising medical safety.

TransWall was exhibited at the 2014 Conference on Computer-Human Interaction (CHI) held from April 26, 2014 to May 1, 2014 in Toronto, Canada.

YouTube Link:

http://www.youtube.com/watch?v=1QdYC_kOQ_w&list=PLXmuftxI6pTXuyjjrGFlcN5YFTKZinDhK

2014.07.15 View 8212

KAIST develops TransWall, a transparent touchable display wall

At a busy shopping mall, shoppers walk by store windows to find attractive items to purchase. Through the windows, shoppers can see the products displayed, but may have a hard time imagining doing something beyond just looking, such as touching the displayed items or communicating with sales assistants inside the store. With TransWall, however, window shopping could become more fun and real than ever before.

Woohun Lee, a professor of Industrial Design at KAIST, and his research team have recently developed TransWall, a two-sided, touchable, and transparent display wall that greatly enhances users' interpersonal experiences.

With an incorporated surface transducer, TransWall offers audio and vibrotactile feedback to the users. As a result, people can collaborate via a shared see-through display and communicate with one another by talking or even touching one another through the wall. A holographic screen film is inserted between the sheets of plexiglass, and beam projectors installed on each side of the wall project images that are reflected.

TransWall is touch-sensitive on both sides. Two users standing face-to-face on each side of the wall can touch the same spot at the same time without any physical interference. When this happens, TransWall provides the users with specific visual, acoustic, and vibrotactile experiences, allowing them to feel as if they are touching one another.

Professor Lee said, "TransWall concept enables people to see, hear, or even touch others through the wall while enjoying gaming and interpersonal communication. TransWall can be installed inside buildings, such as shopping centers, museums, and theme parks, for people to have an opportunity to collaborate even with strangers in a natural way."

He further added that "TransWall will be useful in places that require physical isolation for high security and safety, germ-free rooms in hospitals, for example." TransWall will allow patients to interact with family and friends without compromising medical safety.

TransWall was exhibited at the 2014 Conference on Computer-Human Interaction (CHI) held from April 26, 2014 to May 1, 2014 in Toronto, Canada.

YouTube Link:

http://www.youtube.com/watch?v=1QdYC_kOQ_w&list=PLXmuftxI6pTXuyjjrGFlcN5YFTKZinDhK

2014.07.15 View 8212 -

ACM Interactions: Demo Hour, March and April 2014 Issue

The

Association for Computing Machinery (ACM), the largest educational and scientific

computing society in the world, publishes a magazine called

Interactions

bi-monthly.

Interactions

is the flagship magazine

for the ACM’s Special Interest Group on Computer-Human Interaction (SIGCHI) with

a global circulation that includes all SIGCHI members.

In

its March and April 2014 issue, the Smart E-book was introduced. It was developed by Sangtae Kim, Jaejeung

Kim, and Soobin Lee at the Information Technology Convergence in KAIST

Institute, KAIST.

For

the article, please go to the link or download the .pdf files below:

Interactions,

March &

April 2014

Demo Hour: Bezel-Flipper

Bezel-Flipper

Interactions_Mar & Apr 2014.pdf

http://interactions.acm.org/archive/view/march-april-2014/demo-hour29

2014.03.28 View 11484

ACM Interactions: Demo Hour, March and April 2014 Issue

The

Association for Computing Machinery (ACM), the largest educational and scientific

computing society in the world, publishes a magazine called

Interactions

bi-monthly.

Interactions

is the flagship magazine

for the ACM’s Special Interest Group on Computer-Human Interaction (SIGCHI) with

a global circulation that includes all SIGCHI members.

In

its March and April 2014 issue, the Smart E-book was introduced. It was developed by Sangtae Kim, Jaejeung

Kim, and Soobin Lee at the Information Technology Convergence in KAIST

Institute, KAIST.

For

the article, please go to the link or download the .pdf files below:

Interactions,

March &

April 2014

Demo Hour: Bezel-Flipper

Bezel-Flipper

Interactions_Mar & Apr 2014.pdf

http://interactions.acm.org/archive/view/march-april-2014/demo-hour29

2014.03.28 View 11484 -

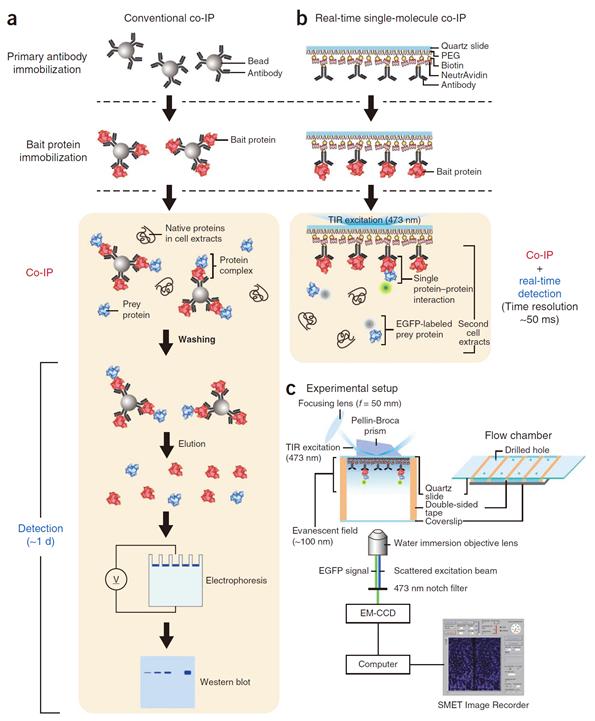

Success in Measuring Protein Interaction at the Molecular Level

Professor Tae Young Yoon

- Live observation of two protein interaction in molecular level successful- The limit in measurement and time resolution of immunoprecipitation technique improved by a hundred thousand fold

KAIST Department of Physics Professor Tae Young Yoon’s research team has successfully observed the interaction of two proteins live on molecular level and the findings were published in the October edition of Nature Protocols.

Professor Yoon’s research team developed a fluorescent microscope that can observe a single molecule. The team grafted the immunoprecipitation technique, traditionally used in protein interaction analysis, to the microscope to develop a “live molecular level immunoprecipitation technique”. The team successfully and accurately measured the reaction between two proteins by repeated momentary interactions in the unit of tens of milliseconds.

The existing immunoprecipitation technique required at least one day to detect interaction between two proteins. There were limitations in detecting momentary or weak interactions. Also, quantitative analysis of the results was difficult since the image was measured by protein-band strength. The technique could not be used for live observation.

The team aimed to drastically improve the existing technique and to develop accurate method of measurement on molecular level. The newly developed technology can enable observation of protein interaction within one hour. Also, the interaction can be measured live, thus the protein interaction phenomenon can be measured in depth.

Moreover, every programme used in the experiment was developed and distributed by the research team so source energy is secured and created the foundation for global infra.

Professor Tae Young Yoon said, “The newly developed technology does not require additional protein expression or purification. Hence, a very small sample of protein is enough to accurately analyse protein interaction on a kinetic level.” He continued, “Even cancerous protein from the tissue of a cancer patient can be analysed. Thus a platform for customised anti-cancer medicine in the future has been prepared, as well.”

Figure 1. Mimetic diagram comparing the existing immunoprecipitation technique and the newly developed live molecular level immunoprecipitation technique

2013.12.11 View 8692

Success in Measuring Protein Interaction at the Molecular Level

Professor Tae Young Yoon

- Live observation of two protein interaction in molecular level successful- The limit in measurement and time resolution of immunoprecipitation technique improved by a hundred thousand fold

KAIST Department of Physics Professor Tae Young Yoon’s research team has successfully observed the interaction of two proteins live on molecular level and the findings were published in the October edition of Nature Protocols.

Professor Yoon’s research team developed a fluorescent microscope that can observe a single molecule. The team grafted the immunoprecipitation technique, traditionally used in protein interaction analysis, to the microscope to develop a “live molecular level immunoprecipitation technique”. The team successfully and accurately measured the reaction between two proteins by repeated momentary interactions in the unit of tens of milliseconds.

The existing immunoprecipitation technique required at least one day to detect interaction between two proteins. There were limitations in detecting momentary or weak interactions. Also, quantitative analysis of the results was difficult since the image was measured by protein-band strength. The technique could not be used for live observation.

The team aimed to drastically improve the existing technique and to develop accurate method of measurement on molecular level. The newly developed technology can enable observation of protein interaction within one hour. Also, the interaction can be measured live, thus the protein interaction phenomenon can be measured in depth.

Moreover, every programme used in the experiment was developed and distributed by the research team so source energy is secured and created the foundation for global infra.

Professor Tae Young Yoon said, “The newly developed technology does not require additional protein expression or purification. Hence, a very small sample of protein is enough to accurately analyse protein interaction on a kinetic level.” He continued, “Even cancerous protein from the tissue of a cancer patient can be analysed. Thus a platform for customised anti-cancer medicine in the future has been prepared, as well.”

Figure 1. Mimetic diagram comparing the existing immunoprecipitation technique and the newly developed live molecular level immunoprecipitation technique

2013.12.11 View 8692