-

Brain-inspired Artificial Intelligence in Robots

(from left: PhD candidate Su Jin An, Dr. Jee Hang Lee and Professor Sang Wan Lee)

Research groups in KAIST, the University of Cambridge, Japan’s National Institute for Information and Communications Technology, and Google DeepMind argue that our understanding of how humans make intelligent decisions has now reached a critical point in which robot intelligence can be significantly enhanced by mimicking strategies that the human brain uses when we make decisions in our everyday lives.

In our rapidly changing world, both humans and autonomous robots constantly need to learn and adapt to new environments. But the difference is that humans are capable of making decisions according to the unique situations, whereas robots still rely on predetermined data to make decisions.

Despite the rapid progress being made in strengthening the physical capability of robots, their central control systems, which govern how robots decide what to do at any one time, are still inferior to those of humans. In particular, they often rely on pre-programmed instructions to direct their behavior, and lack the hallmark of human behavior, that is, the flexibility and capacity to quickly learn and adapt.

Applying neuroscience in robotics, Professor Sang Wan Lee from the Department of Bio and Brain Engineering, KAIST and Professor Ben Seymour from the University of Cambridge and Japan’s National Institute for Information and Communications Technology proposed a case in which robots should be designed based on the principles of the human brain. They argue that robot intelligence can be significantly enhanced by mimicking strategies that the human brain uses during decision-making processes in everyday life.

The problem with importing human-like intelligence into robots has always been a difficult task without knowing the computational principles for how the human brain makes decisions –in other words, how to translate brain activity into computer code for the robots’ ‘brains’.

However, researchers now argue that, following a series of recent discoveries in the field of computational neuroscience, there is enough of this code to effectively write it into robots. One of the examples discovered is the human brain’s ‘meta-controller’, a mechanism by which the brain decides how to switch between different subsystems to carry out complex tasks. Another example is the human pain system, which allows them to protect themselves in potentially hazardous environments. “Copying the brain’s code for these could greatly enhance the flexibility, efficiency, and safety of robots,” Professor Lee said.

The team argued that this inter-disciplinary approach will provide just as many benefits to neuroscience as to robotics. The recent explosion of interest in what lies behind psychiatric disorders such as anxiety, depression, and addiction has given rise to a set of sophisticated theories that are complex and difficult to test without some sort of advanced situation platform.

Professor Seymour explained, “We need a way of modelling the human brain to find how it interacts with the world in real-life to test whether and how different abnormalities in these models give rise to certain disorders. For instance, if we could reproduce anxiety behavior or obsessive-compulsive disorder in a robot, we could then predict what we need to do to treat it in humans.”

The team expects that producing robot models of different psychiatric disorders, in a similar way to how researchers use animal models now, will become a key future technology in clinical research.

The team also stated that there may also be other benefits to humans and intelligent robots learning, acting, and behaving in the same way. In future societies in which humans and robots live and work amongst each other, the ability to cooperate and empathize with robots might be much greater if we feel they think like us.

Professor Seymour said, “We might think that having robots with the human traits of being a bit impulsive or overcautious would be a detriment, but these traits are an unavoidable by-product of human-like intelligence. And it turns out that this is helping us to understand human behavior as human.”

The framework for achieving this brain-inspired artificial intelligence was published in two journals, Science Robotics (10.1126/scirobotics.aav2975) on January 16 and Current Opinion in Behavioral Sciences (10.1016/j.cobeha.2018.12.012) on February 6, 2019.

Figure 1. Overview of neuroscience - robotics approach for decision-making. The figure details key areas for interdisciplinary study (Current Opinion in Behavioral Sciences)

Figure 2. Brain-inspired solutions to robot learning. Neuroscientific views on various aspects of learning and cognition converge and create a new idea called prefrontal metacontrol, which can inspire researchers to design learning agents that can address various key challenges in robotics such as performance-efficiency-speed, cooperation-competition, and exploration-exploitation trade-offs (Science Robotics)

2019.02.20 View 6757

Brain-inspired Artificial Intelligence in Robots

(from left: PhD candidate Su Jin An, Dr. Jee Hang Lee and Professor Sang Wan Lee)

Research groups in KAIST, the University of Cambridge, Japan’s National Institute for Information and Communications Technology, and Google DeepMind argue that our understanding of how humans make intelligent decisions has now reached a critical point in which robot intelligence can be significantly enhanced by mimicking strategies that the human brain uses when we make decisions in our everyday lives.

In our rapidly changing world, both humans and autonomous robots constantly need to learn and adapt to new environments. But the difference is that humans are capable of making decisions according to the unique situations, whereas robots still rely on predetermined data to make decisions.

Despite the rapid progress being made in strengthening the physical capability of robots, their central control systems, which govern how robots decide what to do at any one time, are still inferior to those of humans. In particular, they often rely on pre-programmed instructions to direct their behavior, and lack the hallmark of human behavior, that is, the flexibility and capacity to quickly learn and adapt.

Applying neuroscience in robotics, Professor Sang Wan Lee from the Department of Bio and Brain Engineering, KAIST and Professor Ben Seymour from the University of Cambridge and Japan’s National Institute for Information and Communications Technology proposed a case in which robots should be designed based on the principles of the human brain. They argue that robot intelligence can be significantly enhanced by mimicking strategies that the human brain uses during decision-making processes in everyday life.

The problem with importing human-like intelligence into robots has always been a difficult task without knowing the computational principles for how the human brain makes decisions –in other words, how to translate brain activity into computer code for the robots’ ‘brains’.

However, researchers now argue that, following a series of recent discoveries in the field of computational neuroscience, there is enough of this code to effectively write it into robots. One of the examples discovered is the human brain’s ‘meta-controller’, a mechanism by which the brain decides how to switch between different subsystems to carry out complex tasks. Another example is the human pain system, which allows them to protect themselves in potentially hazardous environments. “Copying the brain’s code for these could greatly enhance the flexibility, efficiency, and safety of robots,” Professor Lee said.

The team argued that this inter-disciplinary approach will provide just as many benefits to neuroscience as to robotics. The recent explosion of interest in what lies behind psychiatric disorders such as anxiety, depression, and addiction has given rise to a set of sophisticated theories that are complex and difficult to test without some sort of advanced situation platform.

Professor Seymour explained, “We need a way of modelling the human brain to find how it interacts with the world in real-life to test whether and how different abnormalities in these models give rise to certain disorders. For instance, if we could reproduce anxiety behavior or obsessive-compulsive disorder in a robot, we could then predict what we need to do to treat it in humans.”

The team expects that producing robot models of different psychiatric disorders, in a similar way to how researchers use animal models now, will become a key future technology in clinical research.

The team also stated that there may also be other benefits to humans and intelligent robots learning, acting, and behaving in the same way. In future societies in which humans and robots live and work amongst each other, the ability to cooperate and empathize with robots might be much greater if we feel they think like us.

Professor Seymour said, “We might think that having robots with the human traits of being a bit impulsive or overcautious would be a detriment, but these traits are an unavoidable by-product of human-like intelligence. And it turns out that this is helping us to understand human behavior as human.”

The framework for achieving this brain-inspired artificial intelligence was published in two journals, Science Robotics (10.1126/scirobotics.aav2975) on January 16 and Current Opinion in Behavioral Sciences (10.1016/j.cobeha.2018.12.012) on February 6, 2019.

Figure 1. Overview of neuroscience - robotics approach for decision-making. The figure details key areas for interdisciplinary study (Current Opinion in Behavioral Sciences)

Figure 2. Brain-inspired solutions to robot learning. Neuroscientific views on various aspects of learning and cognition converge and create a new idea called prefrontal metacontrol, which can inspire researchers to design learning agents that can address various key challenges in robotics such as performance-efficiency-speed, cooperation-competition, and exploration-exploitation trade-offs (Science Robotics)

2019.02.20 View 6757 -

AI |QC ITRC Opens at KAIST

(from left: Dean of College of Engineering Jong-Hwan Kim, Director of AI│QC ITRC June-Koo Rhee, Vice President for R&DB Heekyung Park and Director General for Industrial Policy Hong Taek Yong)

Artificial Intelligence|The Quantum Computing Information Technology Research Center (AI|QC ITRC) opened at KAIST on October 2.

AI|QC ITRC, established with government funding, is the first institute specializing in quantum computing. Three universities (Seoul National University, Korea University, and Kyung Hee University), and four corporations, KT, Homomicus, Actusnetworks, and Mirae Tech are jointly participating in the center. Over four years, the institute will receive 3.2 billion KRW of research funds.

Last April, KAIST selected quantum technology as one of its flagship research areas. AI|QC ITRC will dedicate itself to developing quantum computing technology that provides the computability required for human-level artificial intelligence. It will also foster leaders in related industries by introducing industry-academic educational programs in graduate schools.

QC is receiving a great deal of attention for transcending current digital computers in terms of computability. World-class IT companies like IBM, Google, and Intel and ventures including D-Wave, Rigetti, and IonQ are currently leading the industry and investing heavily in securing source technologies.

Starting from the establishment of the ITRC, KAIST will continue to plan strategies to foster the field of QC. KAIST will carry out two-track strategies; one is to secure source technology of first-generation QC technology, and the other is to focus on basic research that can preoccupy next-generation QC technology.

Professor June-Koo Rhee, the director of AI│QC ITRC said, “I believe that QC will be the imperative technology that enables the realization of the Fourth Industrial Revolution. AIQC ITRC will foster experts required for domestic academia and industries and build a foundation to disseminate the technology to industries.”

Vice President for R&DB Heekyung Park, Director General for Industrial Policy Hong Taek Yong from the Ministry of Science and ICT, Seung Pyo Hong from the Institute for Information & communications Technology Promotion, Head of Technology Strategy Jinhyon Youn from KT, and participating companies attended and celebrated the opening of the AI│QC ITRC.

2018.10.05 View 8068

AI |QC ITRC Opens at KAIST

(from left: Dean of College of Engineering Jong-Hwan Kim, Director of AI│QC ITRC June-Koo Rhee, Vice President for R&DB Heekyung Park and Director General for Industrial Policy Hong Taek Yong)

Artificial Intelligence|The Quantum Computing Information Technology Research Center (AI|QC ITRC) opened at KAIST on October 2.

AI|QC ITRC, established with government funding, is the first institute specializing in quantum computing. Three universities (Seoul National University, Korea University, and Kyung Hee University), and four corporations, KT, Homomicus, Actusnetworks, and Mirae Tech are jointly participating in the center. Over four years, the institute will receive 3.2 billion KRW of research funds.

Last April, KAIST selected quantum technology as one of its flagship research areas. AI|QC ITRC will dedicate itself to developing quantum computing technology that provides the computability required for human-level artificial intelligence. It will also foster leaders in related industries by introducing industry-academic educational programs in graduate schools.

QC is receiving a great deal of attention for transcending current digital computers in terms of computability. World-class IT companies like IBM, Google, and Intel and ventures including D-Wave, Rigetti, and IonQ are currently leading the industry and investing heavily in securing source technologies.

Starting from the establishment of the ITRC, KAIST will continue to plan strategies to foster the field of QC. KAIST will carry out two-track strategies; one is to secure source technology of first-generation QC technology, and the other is to focus on basic research that can preoccupy next-generation QC technology.

Professor June-Koo Rhee, the director of AI│QC ITRC said, “I believe that QC will be the imperative technology that enables the realization of the Fourth Industrial Revolution. AIQC ITRC will foster experts required for domestic academia and industries and build a foundation to disseminate the technology to industries.”

Vice President for R&DB Heekyung Park, Director General for Industrial Policy Hong Taek Yong from the Ministry of Science and ICT, Seung Pyo Hong from the Institute for Information & communications Technology Promotion, Head of Technology Strategy Jinhyon Youn from KT, and participating companies attended and celebrated the opening of the AI│QC ITRC.

2018.10.05 View 8068 -

Taming AI: Engineering, Ethics, and Policy

(Professor Lee, Professor Koene, Professor Walsh, and Professor Ema (from left))

Can AI-powered robotics could be adequate companions for humans? Will the good faith of users and developers work for helping AI-powered robots become the new tribe of the digital future?

AI’s efficiency is creating new socio-economic opportunities in the global market. Despite the opportunities, challenges still remain. It is said that efficiency-enforcing algorithms through deep learning will take an eventual toll on human dignity and safety, bringing out the disastrous fiascos featured in the Terminator movies.

A research group at the Korean Flagship AI Project for Emotional Digital Companionship at KAIST Institute for AI (KI4AI) and the Fourth Industrial Intelligence Center at KAIST Institute co-hosted a seminar, “Taming AI: Engineering, Ethics, and Policy” last week to discuss ways to better employ AI technologies in ways that upholds human values.

The KI4AI has been conducting this flagship project from the end of 2016 with the support of the Ministry of Science and ICT.

The seminar brought together three speakers from Australia, Japan, and the UK to better fathom the implications of the new technology emergence from the ethical perspectives of engineering and discuss policymaking for the responsible usage of technology.

Professor Toby Walsh, an anti-autonomous weapon activist from New South Wales University in Australia continued to argue the possible risk that AI poses to malfunction. He said that an independent ethics committee or group usually monitors academic institutions’ research activities in order to avoid any possible mishaps.

However, he said there is no independent group or committee monitoring the nature of corporations’ engagement of such technologies, while its possible threats against humanity are alleged to be growing. He mentioned that Google’s and Amazon’s information collecting also pose a potent threat. He said that ethical standards similar to academic research integrity should be established to avoid the possible restricting of the dignity of humans and mass destruction. He hoped that KAIST and Google would play a leading role in establishing an international norm toward this compelling issue.

Professor Arisa Ema from the University of Tokyo provided very compelling arguments for thinking about the duplicity of technology and how technology should serve the public interest without any bias against gender, race, and social stratum. She pointed out the information dominated by several Western corporations like Google. She said that such algorithms for deep learning of data provided by several Western corporations will create very biased information, only applicable to limited races and classes.

Meanwhile, Professor Ansgar Koene from the University of Nottingham presented the IEEE’s global initiative on the ethics of autonomous and intelligence systems. He shared the cases of industry standards and ethically-aligned designs made by the IEEE Standards Association. He said more than 250 global cross-disciplinary thought leaders from around the world joined to develop ethical guidelines called Ethically Aligned Design (EAD) V2. EAD V2 includes methodologies to guide ethical research and design, embedding values into autonomous intelligence systems among others. For the next step beyond EAD V2, the association is now working for IEEE P70xx Standards Projects, detailing more technical approaches.

Professor Soo Young Lee at KAIST argued that the eventual goal of complete AI is to have human-like emotions, calling it a new paradigm for the relationship between humans and AI-robots. According to Professor Lee, AI-powered robots will serve as a good companion for humans. “Especially in aging societies affecting the globe, this will be a very viable and practical option,” he said.

He pointed out, “Kids learn from parents’ morality and social behavior. Users should have AI-robots learn morality as well. Their relationships should be based on good faith and trust, no longer that of master and slave. He said that liability issues for any mishap will need to be discussed further, but basically each user and developer should have their own responsibility when dealing with these issues.

2018.06.26 View 10887

Taming AI: Engineering, Ethics, and Policy

(Professor Lee, Professor Koene, Professor Walsh, and Professor Ema (from left))

Can AI-powered robotics could be adequate companions for humans? Will the good faith of users and developers work for helping AI-powered robots become the new tribe of the digital future?

AI’s efficiency is creating new socio-economic opportunities in the global market. Despite the opportunities, challenges still remain. It is said that efficiency-enforcing algorithms through deep learning will take an eventual toll on human dignity and safety, bringing out the disastrous fiascos featured in the Terminator movies.

A research group at the Korean Flagship AI Project for Emotional Digital Companionship at KAIST Institute for AI (KI4AI) and the Fourth Industrial Intelligence Center at KAIST Institute co-hosted a seminar, “Taming AI: Engineering, Ethics, and Policy” last week to discuss ways to better employ AI technologies in ways that upholds human values.

The KI4AI has been conducting this flagship project from the end of 2016 with the support of the Ministry of Science and ICT.

The seminar brought together three speakers from Australia, Japan, and the UK to better fathom the implications of the new technology emergence from the ethical perspectives of engineering and discuss policymaking for the responsible usage of technology.

Professor Toby Walsh, an anti-autonomous weapon activist from New South Wales University in Australia continued to argue the possible risk that AI poses to malfunction. He said that an independent ethics committee or group usually monitors academic institutions’ research activities in order to avoid any possible mishaps.

However, he said there is no independent group or committee monitoring the nature of corporations’ engagement of such technologies, while its possible threats against humanity are alleged to be growing. He mentioned that Google’s and Amazon’s information collecting also pose a potent threat. He said that ethical standards similar to academic research integrity should be established to avoid the possible restricting of the dignity of humans and mass destruction. He hoped that KAIST and Google would play a leading role in establishing an international norm toward this compelling issue.

Professor Arisa Ema from the University of Tokyo provided very compelling arguments for thinking about the duplicity of technology and how technology should serve the public interest without any bias against gender, race, and social stratum. She pointed out the information dominated by several Western corporations like Google. She said that such algorithms for deep learning of data provided by several Western corporations will create very biased information, only applicable to limited races and classes.

Meanwhile, Professor Ansgar Koene from the University of Nottingham presented the IEEE’s global initiative on the ethics of autonomous and intelligence systems. He shared the cases of industry standards and ethically-aligned designs made by the IEEE Standards Association. He said more than 250 global cross-disciplinary thought leaders from around the world joined to develop ethical guidelines called Ethically Aligned Design (EAD) V2. EAD V2 includes methodologies to guide ethical research and design, embedding values into autonomous intelligence systems among others. For the next step beyond EAD V2, the association is now working for IEEE P70xx Standards Projects, detailing more technical approaches.

Professor Soo Young Lee at KAIST argued that the eventual goal of complete AI is to have human-like emotions, calling it a new paradigm for the relationship between humans and AI-robots. According to Professor Lee, AI-powered robots will serve as a good companion for humans. “Especially in aging societies affecting the globe, this will be a very viable and practical option,” he said.

He pointed out, “Kids learn from parents’ morality and social behavior. Users should have AI-robots learn morality as well. Their relationships should be based on good faith and trust, no longer that of master and slave. He said that liability issues for any mishap will need to be discussed further, but basically each user and developer should have their own responsibility when dealing with these issues.

2018.06.26 View 10887 -

Recognizing Seven Different Face Emotions on a Mobile Platform

(Professor Hoi-Jun Yoo)

A KAIST research team succeeded in achieving face emotion recognition on a mobile platform by developing an AI semiconductor IC that processes two neural networks on a single chip.

Professor Hoi-Jun Yoo and his team (Primary researcher: Jinmook Lee Ph. D. student) from the School of Electrical Engineering developed a unified deep neural network processing unit (UNPU).

Deep learning is a technology for machine learning based on artificial neural networks, which allows a computer to learn by itself, just like a human.

The developed chip adjusts the weight precision (from 1 bit to 16 bit) of a neural network inside of the semiconductor in order to optimize energy efficiency and accuracy. With a single chip, it can process a convolutional neural network (CNN) and recurrent neural network (RNN) simultaneously. CNN is used for categorizing and recognizing images while RNN is for action recognition and speech recognition, such as time-series information.

Moreover, it enables an adjustment in energy efficiency and accuracy dynamically while recognizing objects. To realize mobile AI technology, it needs to process high-speed operations with low energy, otherwise the battery can run out quickly due to processing massive amounts of information at once. According to the team, this chip has better operation performance compared to world-class level mobile AI chips such as Google TPU. The energy efficiency of the new chip is 4 times higher than the TPU.

In order to demonstrate its high performance, the team installed UNPU in a smartphone to facilitate automatic face emotion recognition on the smartphone. This system displays a user’s emotions in real time. The research results for this system were presented at the 2018 International Solid-State Circuits Conference (ISSCC) in San Francisco on February 13.

Professor Yoo said, “We have developed a semiconductor that accelerates with low power requirements in order to realize AI on mobile platforms. We are hoping that this technology will be applied in various areas, such as object recognition, emotion recognition, action recognition, and automatic translation. Within one year, we will commercialize this technology.”

2018.03.09 View 7852

Recognizing Seven Different Face Emotions on a Mobile Platform

(Professor Hoi-Jun Yoo)

A KAIST research team succeeded in achieving face emotion recognition on a mobile platform by developing an AI semiconductor IC that processes two neural networks on a single chip.

Professor Hoi-Jun Yoo and his team (Primary researcher: Jinmook Lee Ph. D. student) from the School of Electrical Engineering developed a unified deep neural network processing unit (UNPU).

Deep learning is a technology for machine learning based on artificial neural networks, which allows a computer to learn by itself, just like a human.

The developed chip adjusts the weight precision (from 1 bit to 16 bit) of a neural network inside of the semiconductor in order to optimize energy efficiency and accuracy. With a single chip, it can process a convolutional neural network (CNN) and recurrent neural network (RNN) simultaneously. CNN is used for categorizing and recognizing images while RNN is for action recognition and speech recognition, such as time-series information.

Moreover, it enables an adjustment in energy efficiency and accuracy dynamically while recognizing objects. To realize mobile AI technology, it needs to process high-speed operations with low energy, otherwise the battery can run out quickly due to processing massive amounts of information at once. According to the team, this chip has better operation performance compared to world-class level mobile AI chips such as Google TPU. The energy efficiency of the new chip is 4 times higher than the TPU.

In order to demonstrate its high performance, the team installed UNPU in a smartphone to facilitate automatic face emotion recognition on the smartphone. This system displays a user’s emotions in real time. The research results for this system were presented at the 2018 International Solid-State Circuits Conference (ISSCC) in San Francisco on February 13.

Professor Yoo said, “We have developed a semiconductor that accelerates with low power requirements in order to realize AI on mobile platforms. We are hoping that this technology will be applied in various areas, such as object recognition, emotion recognition, action recognition, and automatic translation. Within one year, we will commercialize this technology.”

2018.03.09 View 7852 -

Sangeun Oh Recognized as a 2017 Google Fellow

Sangeun Oh, a Ph.D. candidate in the School of Computing was selected as a Google PhD Fellow in 2017. He is one of 47 awardees of the Google PhD Fellowship in the world.

The Google PhD Fellowship awards students showing outstanding performance in the field of computer science and related research. Since being established in 2009, the program has provided various benefits, including scholarships worth $10,000 USD and one-to-one research discussion with mentors from Google.

His research work on a mobile system that allows interactions among various kinds of smart devices was recognized in the field of mobile computing. He developed a mobile platform that allows smart devices to share diverse functions, including logins, payments, and sensors. This technology provides numerous user experiences that existing mobile platforms could not offer. Through cross-device functionality sharing, users can utilize multiple smart devices in a more convenient manner. The research was presented at The Annual International Conference on Mobile Systems, Applications, and Services (MobiSys) of the Association for Computing Machinery in July, 2017.

Oh said, “I would like to express my gratitude to my advisor, the professors in the School of Computing, and my lab colleagues. I will devote myself to carrying out more research in order to contribute to society.”

His advisor, Insik Shin, a professor in the School of Computing said, “Being recognized as a Google PhD Fellow is an honor to both the student as well as KAIST. I strongly anticipate and believe that Oh will make the next step by carrying out good quality research.”

2017.09.27 View 15107

Sangeun Oh Recognized as a 2017 Google Fellow

Sangeun Oh, a Ph.D. candidate in the School of Computing was selected as a Google PhD Fellow in 2017. He is one of 47 awardees of the Google PhD Fellowship in the world.

The Google PhD Fellowship awards students showing outstanding performance in the field of computer science and related research. Since being established in 2009, the program has provided various benefits, including scholarships worth $10,000 USD and one-to-one research discussion with mentors from Google.

His research work on a mobile system that allows interactions among various kinds of smart devices was recognized in the field of mobile computing. He developed a mobile platform that allows smart devices to share diverse functions, including logins, payments, and sensors. This technology provides numerous user experiences that existing mobile platforms could not offer. Through cross-device functionality sharing, users can utilize multiple smart devices in a more convenient manner. The research was presented at The Annual International Conference on Mobile Systems, Applications, and Services (MobiSys) of the Association for Computing Machinery in July, 2017.

Oh said, “I would like to express my gratitude to my advisor, the professors in the School of Computing, and my lab colleagues. I will devote myself to carrying out more research in order to contribute to society.”

His advisor, Insik Shin, a professor in the School of Computing said, “Being recognized as a Google PhD Fellow is an honor to both the student as well as KAIST. I strongly anticipate and believe that Oh will make the next step by carrying out good quality research.”

2017.09.27 View 15107 -

KAIST to Open the Meditation Research Center

KAIST announced that it will open its Meditation Research Center next June. The center will serve as a place for the wellness of KAIST community as well as for furthering the cognitive sciences and its relevant convergence studies.

For facilitating the center, KAIST signed an MOU with the Foundation Academia Platonica in Seoul, an academy working for enriching the humanities and insight meditation on Aug.31. The Venerable Misan, a Buddhist monk well-known for his ‘Heart Smile Meditation’ program, will head the center.

The center will also conduct convergence research on meditation, which will translate into brain imaging, cognitive behavior, and its psychological effects. Built upon the research, the center expects to publish textbooks on meditation and will distribute them to the public and schools in an effort to widely disseminate the benefits of meditation.

As mindful meditation has become mainstream and more extensively studied, growing evidence suggests multiple psychological and physical benefits of these mindfulness exercises as well as for similar practices. Mind-body practices like meditation have been shown to reduce the body’s stress response by strengthening the relaxation response and lowering stress hormones.

The Venerable Misan, a Ph.D in philosophy from Oxford University, also serves as the director of the Sangdo Meditation Center and a professor at Joong-Ang Sangha University, a higher educational institution for Buddhist monks.

Monk Misan said that meditation will play a crucial part in educating creative students with an empathetic mindset. He added, “Hi-tech companies in Silicon Valley such as Google and Intel have long introduced meditation programs for stress management. Such practices will enhance the wellness of employees as well as their working efficiency.”

President Sung-Chul Shin said of the opening of the center, “From long ago, many universities in foreign countries including Havard, Stanford, Oxfor universities and the Max Planck Institute in Germany have applied scientific approaches to meditation and installed meditation centers. I am pleased to open our own center next year and I believe that it will bring more diverse opportunities for advancing convergent studies in AI and cognitive sciences.

2017.08.31 View 7514

KAIST to Open the Meditation Research Center

KAIST announced that it will open its Meditation Research Center next June. The center will serve as a place for the wellness of KAIST community as well as for furthering the cognitive sciences and its relevant convergence studies.

For facilitating the center, KAIST signed an MOU with the Foundation Academia Platonica in Seoul, an academy working for enriching the humanities and insight meditation on Aug.31. The Venerable Misan, a Buddhist monk well-known for his ‘Heart Smile Meditation’ program, will head the center.

The center will also conduct convergence research on meditation, which will translate into brain imaging, cognitive behavior, and its psychological effects. Built upon the research, the center expects to publish textbooks on meditation and will distribute them to the public and schools in an effort to widely disseminate the benefits of meditation.

As mindful meditation has become mainstream and more extensively studied, growing evidence suggests multiple psychological and physical benefits of these mindfulness exercises as well as for similar practices. Mind-body practices like meditation have been shown to reduce the body’s stress response by strengthening the relaxation response and lowering stress hormones.

The Venerable Misan, a Ph.D in philosophy from Oxford University, also serves as the director of the Sangdo Meditation Center and a professor at Joong-Ang Sangha University, a higher educational institution for Buddhist monks.

Monk Misan said that meditation will play a crucial part in educating creative students with an empathetic mindset. He added, “Hi-tech companies in Silicon Valley such as Google and Intel have long introduced meditation programs for stress management. Such practices will enhance the wellness of employees as well as their working efficiency.”

President Sung-Chul Shin said of the opening of the center, “From long ago, many universities in foreign countries including Havard, Stanford, Oxfor universities and the Max Planck Institute in Germany have applied scientific approaches to meditation and installed meditation centers. I am pleased to open our own center next year and I believe that it will bring more diverse opportunities for advancing convergent studies in AI and cognitive sciences.

2017.08.31 View 7514 -

Why Don't My Document Photos Rotate Correctly?

John, an insurance planner, took several photos of a competitors’ new brochures. At a meeting, he opened a photo gallery to discuss the documents with his colleagues. He found, however, that the photos of the document had the wrong orientation; they had been rotated in 90 degrees clockwise. He then rotated his phone 90 degrees counterclockwise, but the document photos also rotated at the same time. After trying this several times, he realized that it was impossible to display the document photos correctly on his phone. Instead, he had to set his phone down on a table and move his chair to show the photos in the correct orientation. It was very frustrating for John and his colleagues, because the document photos had different patterns of orientation errors.

Professor Uichin Lee and his team at KAIST have identified the key reasons for such orientation errors and proposed novel techniques to solve this problem efficiently. Interestingly, it was due to a software glitch in screen rotation–tracking algorithms, and all smartphones on the market suffer from this error.

When taking a photo of a document, your smartphone generally becomes parallel to the flat surface, as shown in the figure above (right). Professor Lee said, “Your phone fails to track the orientation if you make any rotation changes at that moment.” This is because software engineers designed the rotation tracking software in conventional smartphones with the following assumption: people hold their phones vertically either in portrait or landscape orientations. Orientation tracking can be done by simply measuring the gravity direction using an acceleration sensor in the phone (for example, whether gravity falls into the portrait or landscape direction).

Professor Lee’s team conducted a controlled experiment to discover how often orientation errors happen in document-capturing tasks. Surprisingly, their results showed that landscape document photos had error rates of 93%. Smartphones’ camera apps display the current orientation using a camera-shaped icon, but users are unaware of this feature, nor do they notice its state when they take document photos. This is why we often encounter rotation errors in our daily lives, with no idea of why the errors are occurring.

The team developed a technique that can correct a phone’s orientation by tracking the rotation sensor in a phone. When people take document photos their smartphones become parallel to the documents on a flat surface. This intention of photographing documents can be easily recognizable because gravity falls onto the phone’s surface. The current orientation can be tracked by monitoring the occurrence of significant rotation.

In addition, the research team discovered that when taking a document photo, the user tends to tilt the phone, just slightly, towards the user (called a “micro-tilt phenomenon”). While the tilting degree is very small—almost indistinguishable to the naked eye—these distinct behavioral cues are enough to train machine-learning models that can easily learn the patterns of gravity distributions across the phone.

The team’s experimental results showed that their algorithms can accurately track phone orientation in document-capturing tasks at 93% accuracy. Their approaches can be readily integrated into both Google Android and Apple iPhones. The key benefits of their proposals are that the correction software works only when the intent of photographing documents is detected, and that it can seamlessly work with existing orientation tracking methods without conflict. The research team even suggested a novel user interface for photographing documents. Just like with photocopiers, the capture interface overlays a document shape onto a viewfinder so that the user can easily double-check possible orientation errors.

Professor Lee said, “Photographing documents is part of our daily activities, but orientation errors are so prevalent that many users have difficulties in viewing their documents on their phones without even knowing why such errors happen.” He added, “We can easily detect users’ intentions to photograph a document and automatically correct orientation changes. Our techniques not only eliminate any inconvenience with orientation errors, but also enable a range of novel applications specifically designed for document capturing.” This work, supported by the Korean Government (MSIP), was published online in the International Journal of Human-Computer Studies in March 2017. In addition, their US patent application was granted in March 2017.

(Photo caption: The team of Professor Lee and his Ph.D.student Jeungmin Oh developed a technique that can correct a phone’s orientation by tracking the rotation sensor in a phone.)

2017.06.27 View 7934

Why Don't My Document Photos Rotate Correctly?

John, an insurance planner, took several photos of a competitors’ new brochures. At a meeting, he opened a photo gallery to discuss the documents with his colleagues. He found, however, that the photos of the document had the wrong orientation; they had been rotated in 90 degrees clockwise. He then rotated his phone 90 degrees counterclockwise, but the document photos also rotated at the same time. After trying this several times, he realized that it was impossible to display the document photos correctly on his phone. Instead, he had to set his phone down on a table and move his chair to show the photos in the correct orientation. It was very frustrating for John and his colleagues, because the document photos had different patterns of orientation errors.

Professor Uichin Lee and his team at KAIST have identified the key reasons for such orientation errors and proposed novel techniques to solve this problem efficiently. Interestingly, it was due to a software glitch in screen rotation–tracking algorithms, and all smartphones on the market suffer from this error.

When taking a photo of a document, your smartphone generally becomes parallel to the flat surface, as shown in the figure above (right). Professor Lee said, “Your phone fails to track the orientation if you make any rotation changes at that moment.” This is because software engineers designed the rotation tracking software in conventional smartphones with the following assumption: people hold their phones vertically either in portrait or landscape orientations. Orientation tracking can be done by simply measuring the gravity direction using an acceleration sensor in the phone (for example, whether gravity falls into the portrait or landscape direction).

Professor Lee’s team conducted a controlled experiment to discover how often orientation errors happen in document-capturing tasks. Surprisingly, their results showed that landscape document photos had error rates of 93%. Smartphones’ camera apps display the current orientation using a camera-shaped icon, but users are unaware of this feature, nor do they notice its state when they take document photos. This is why we often encounter rotation errors in our daily lives, with no idea of why the errors are occurring.

The team developed a technique that can correct a phone’s orientation by tracking the rotation sensor in a phone. When people take document photos their smartphones become parallel to the documents on a flat surface. This intention of photographing documents can be easily recognizable because gravity falls onto the phone’s surface. The current orientation can be tracked by monitoring the occurrence of significant rotation.

In addition, the research team discovered that when taking a document photo, the user tends to tilt the phone, just slightly, towards the user (called a “micro-tilt phenomenon”). While the tilting degree is very small—almost indistinguishable to the naked eye—these distinct behavioral cues are enough to train machine-learning models that can easily learn the patterns of gravity distributions across the phone.

The team’s experimental results showed that their algorithms can accurately track phone orientation in document-capturing tasks at 93% accuracy. Their approaches can be readily integrated into both Google Android and Apple iPhones. The key benefits of their proposals are that the correction software works only when the intent of photographing documents is detected, and that it can seamlessly work with existing orientation tracking methods without conflict. The research team even suggested a novel user interface for photographing documents. Just like with photocopiers, the capture interface overlays a document shape onto a viewfinder so that the user can easily double-check possible orientation errors.

Professor Lee said, “Photographing documents is part of our daily activities, but orientation errors are so prevalent that many users have difficulties in viewing their documents on their phones without even knowing why such errors happen.” He added, “We can easily detect users’ intentions to photograph a document and automatically correct orientation changes. Our techniques not only eliminate any inconvenience with orientation errors, but also enable a range of novel applications specifically designed for document capturing.” This work, supported by the Korean Government (MSIP), was published online in the International Journal of Human-Computer Studies in March 2017. In addition, their US patent application was granted in March 2017.

(Photo caption: The team of Professor Lee and his Ph.D.student Jeungmin Oh developed a technique that can correct a phone’s orientation by tracking the rotation sensor in a phone.)

2017.06.27 View 7934 -

Crowdsourcing-Based Global Indoor Positioning System

Research team of Professor Dong-Soo Han of the School of Computing Intelligent Service Lab at KAIST developed a system for providing global indoor localization using Wi-Fi signals. The technology uses numerous smartphones to collect fingerprints of location data and label them automatically, significantly reducing the cost of constructing an indoor localization system while maintaining high accuracy.

The method can be used in any building in the world, provided the floor plan is available and there are Wi-Fi fingerprints to collect. To accurately collect and label the location information of the Wi-Fi fingerprints, the research team analyzed indoor space utilization. This led to technology that classified indoor spaces into places used for stationary tasks (resting spaces) and spaces used to reach said places (transient spaces), and utilized separate algorithms to optimally and automatically collect location labelling data.

Years ago, the team implemented a way to automatically label resting space locations from signals collected in various contexts such as homes, shops, and offices via the users’ home or office address information. The latest method allows for the automatic labelling of transient space locations such as hallways, lobbies, and stairs using unsupervised learning, without any additional location information. Testing in KAIST’s N5 building and the 7th floor of N1 building manifested the technology is capable of accuracy up to three or four meters given enough training data. The accuracy level is comparable to technology using manually-labeled location information.

Google, Microsoft, and other multinational corporations collected tens of thousands of floor plans for their indoor localization projects. Indoor radio map construction was also attempted by the firms but proved more difficult. As a result, existing indoor localization services were often plagued by inaccuracies. In Korea, COEX, Lotte World Tower, and other landmarks provide comparatively accurate indoor localization, but most buildings suffer from the lack of radio maps, preventing indoor localization services.

Professor Han said, “This technology allows the easy deployment of highly accurate indoor localization systems in any building in the world. In the near future, most indoor spaces will be able to provide localization services, just like outdoor spaces.” He further added that smartphone-collected Wi-Fi fingerprints have been unutilized and often discarded, but now they should be treated as invaluable resources, which create a new big data field of Wi-Fi fingerprints. This new indoor navigation technology is likely to be valuable to Google, Apple, or other global firms providing indoor positioning services globally. The technology will also be valuable for helping domestic firms provide positioning services.

Professor Han added that “the new global indoor localization system deployment technology will be added to KAILOS, KAIST’s indoor localization system.” KAILOS was released in 2014 as KAIST’s open platform for indoor localization service, allowing anyone in the world to add floor plans to KAILOS, and collect the building’s Wi-Fi fingerprints for a universal indoor localization service. As localization accuracy improves in indoor environments, despite the absence of GPS signals, applications such as location-based SNS, location-based IoT, and location-based O2O are expected to take off, leading to various improvements in convenience and safety. Integrated indoor-outdoor navigation services are also visible on the horizon, fusing vehicular navigation technology with indoor navigation.

Professor Han’s research was published in IEEE Transactions on Mobile Computing (TMC) in November in 2016.

For more, please visit http://ieeexplore.ieee.org/stamp/stamp.jsp?arnumber=7349230http://ieeexplore.ieee.org/document/7805133/

2017.04.06 View 11100

Crowdsourcing-Based Global Indoor Positioning System

Research team of Professor Dong-Soo Han of the School of Computing Intelligent Service Lab at KAIST developed a system for providing global indoor localization using Wi-Fi signals. The technology uses numerous smartphones to collect fingerprints of location data and label them automatically, significantly reducing the cost of constructing an indoor localization system while maintaining high accuracy.

The method can be used in any building in the world, provided the floor plan is available and there are Wi-Fi fingerprints to collect. To accurately collect and label the location information of the Wi-Fi fingerprints, the research team analyzed indoor space utilization. This led to technology that classified indoor spaces into places used for stationary tasks (resting spaces) and spaces used to reach said places (transient spaces), and utilized separate algorithms to optimally and automatically collect location labelling data.

Years ago, the team implemented a way to automatically label resting space locations from signals collected in various contexts such as homes, shops, and offices via the users’ home or office address information. The latest method allows for the automatic labelling of transient space locations such as hallways, lobbies, and stairs using unsupervised learning, without any additional location information. Testing in KAIST’s N5 building and the 7th floor of N1 building manifested the technology is capable of accuracy up to three or four meters given enough training data. The accuracy level is comparable to technology using manually-labeled location information.

Google, Microsoft, and other multinational corporations collected tens of thousands of floor plans for their indoor localization projects. Indoor radio map construction was also attempted by the firms but proved more difficult. As a result, existing indoor localization services were often plagued by inaccuracies. In Korea, COEX, Lotte World Tower, and other landmarks provide comparatively accurate indoor localization, but most buildings suffer from the lack of radio maps, preventing indoor localization services.

Professor Han said, “This technology allows the easy deployment of highly accurate indoor localization systems in any building in the world. In the near future, most indoor spaces will be able to provide localization services, just like outdoor spaces.” He further added that smartphone-collected Wi-Fi fingerprints have been unutilized and often discarded, but now they should be treated as invaluable resources, which create a new big data field of Wi-Fi fingerprints. This new indoor navigation technology is likely to be valuable to Google, Apple, or other global firms providing indoor positioning services globally. The technology will also be valuable for helping domestic firms provide positioning services.

Professor Han added that “the new global indoor localization system deployment technology will be added to KAILOS, KAIST’s indoor localization system.” KAILOS was released in 2014 as KAIST’s open platform for indoor localization service, allowing anyone in the world to add floor plans to KAILOS, and collect the building’s Wi-Fi fingerprints for a universal indoor localization service. As localization accuracy improves in indoor environments, despite the absence of GPS signals, applications such as location-based SNS, location-based IoT, and location-based O2O are expected to take off, leading to various improvements in convenience and safety. Integrated indoor-outdoor navigation services are also visible on the horizon, fusing vehicular navigation technology with indoor navigation.

Professor Han’s research was published in IEEE Transactions on Mobile Computing (TMC) in November in 2016.

For more, please visit http://ieeexplore.ieee.org/stamp/stamp.jsp?arnumber=7349230http://ieeexplore.ieee.org/document/7805133/

2017.04.06 View 11100 -

Mystery of Biological Plastic Synthesis Machinery Unveiled

Plastics and other polymers are used every day. These polymers are mostly made from fossil resources by refining petrochemicals. On the other hand, many microorganisms naturally synthesize polyesters known as polyhydroxyalkanoates (PHAs) as distinct granules inside cells.

PHAs are a family of microbial polyesters that have attracted much attention as biodegradable and biocompatible plastics and elastomers that can substitute petrochemical counterparts. There have been numerous papers and patents on gene cloning and metabolic engineering of PHA biosynthetic machineries, biochemical studies, and production of PHAs; simple Google search with “polyhydroxyalkanoates” yielded returns of 223,000 document pages. PHAs have always been considered amazing examples of biological polymer synthesis. It is astounding to see PHAs of 500 kDa to sometimes as high as 10,000 kDa can be synthesized in vivo by PHA synthase, the key polymerizing enzyme in PHA biosynthesis. They have attracted great interest in determining the crystal structure of PHA synthase over the last 30 years, but unfortunately without success. Thus, the characteristics and molecular mechanisms of PHA synthase were under a dark veil.

In two papers published back-to-back in Biotechnology Journal online on November 30, 2016, a Korean research team led by Professor Kyung-Jin Kim at Kyungpook National University and Distinguished Professor Sang Yup Lee at the Korea Advanced Institute of Science and Technology (KAIST) described the crystal structure of PHA synthase from Ralstonia eutropha, the best studied bacterium for PHA production, and reported the structural basis for the detailed molecular mechanisms of PHA biosynthesis. The crystal structure has been deposited to Protein Data Bank in February 2016. After deciphering the crystal structure of the catalytic domain of PHA synthase, in addition to other structural studies on whole enzyme and related proteins, the research team also performed experiments to elucidate the mechanisms of the enzyme reaction, validating detailed structures, enzyme engineering, and also N-terminal domain studies among others.

Through several biochemical studies based on crystal structure, the authors show that PHA synthase exists as a dimer and is divided into two distinct domains, the N-terminal domain (RePhaC1ND) and the C-terminal domain (RePhaC1CD). The RePhaC1CD catalyzes the polymerization reaction via a non-processive ping-pong mechanism using a Cys-His-Asp catalytic triad. The two catalytic sites of the RePhaC1CD dimer are positioned 33.4 Å apart, suggesting that the polymerization reaction occurs independently at each site. This study also presents the structure-based mechanisms for substrate specificities of various PHA synthases from different classes.

Professor Sang Yup Lee, who has worked on this topic for more than 20 years, said,

“The results and information presented in these two papers have long been awaited not only in the PHA community, but also metabolic engineering, bacteriology/microbiology, and in general biological sciences communities. The structural information on PHA synthase together with the recently deciphered reaction mechanisms will be valuable for understanding the detailed mechanisms of biosynthesizing this important energy/redox storage material, and also for the rational engineering of PHA synthases to produce designer bioplastics from various monomers more efficiently.”

Indeed, these two papers published in Biotechnology Journal finally reveal the 30-year mystery of machinery of biological polyester synthesis, and will serve as the essential compass in creating designer and more efficient bioplastic machineries.

References:

Jieun Kim, Yeo-Jin Kim, So Young Choi, Sang Yup Lee and Kyung-Jin Kim. “Crystal structure of Ralstonia eutropha polyhydroxyalkanoate synthase C-terminal domain and reaction mechanisms” Biotechnology Journal DOI: 10.1002/biot.201600648

http://onlinelibrary.wiley.com/doi/10.1002/biot.201600648/abstract

Yeo-Jin Kim, So Young Choi, Jieun Kim, Kyeong Sik Jin, Sang Yup Lee and Kyung-Jin Kim. “Structure and function of the N-terminal domain of Ralstonia eutropha polyhydroxyalkanoate synthase, and the proposed structure and mechanisms of the whole enzyme” Biotechnology Journal DOI: 10.1002/biot.201600649

http://onlinelibrary.wiley.com/doi/10.1002/biot.201600649/abstract

2016.12.02 View 12048

Mystery of Biological Plastic Synthesis Machinery Unveiled

Plastics and other polymers are used every day. These polymers are mostly made from fossil resources by refining petrochemicals. On the other hand, many microorganisms naturally synthesize polyesters known as polyhydroxyalkanoates (PHAs) as distinct granules inside cells.

PHAs are a family of microbial polyesters that have attracted much attention as biodegradable and biocompatible plastics and elastomers that can substitute petrochemical counterparts. There have been numerous papers and patents on gene cloning and metabolic engineering of PHA biosynthetic machineries, biochemical studies, and production of PHAs; simple Google search with “polyhydroxyalkanoates” yielded returns of 223,000 document pages. PHAs have always been considered amazing examples of biological polymer synthesis. It is astounding to see PHAs of 500 kDa to sometimes as high as 10,000 kDa can be synthesized in vivo by PHA synthase, the key polymerizing enzyme in PHA biosynthesis. They have attracted great interest in determining the crystal structure of PHA synthase over the last 30 years, but unfortunately without success. Thus, the characteristics and molecular mechanisms of PHA synthase were under a dark veil.

In two papers published back-to-back in Biotechnology Journal online on November 30, 2016, a Korean research team led by Professor Kyung-Jin Kim at Kyungpook National University and Distinguished Professor Sang Yup Lee at the Korea Advanced Institute of Science and Technology (KAIST) described the crystal structure of PHA synthase from Ralstonia eutropha, the best studied bacterium for PHA production, and reported the structural basis for the detailed molecular mechanisms of PHA biosynthesis. The crystal structure has been deposited to Protein Data Bank in February 2016. After deciphering the crystal structure of the catalytic domain of PHA synthase, in addition to other structural studies on whole enzyme and related proteins, the research team also performed experiments to elucidate the mechanisms of the enzyme reaction, validating detailed structures, enzyme engineering, and also N-terminal domain studies among others.

Through several biochemical studies based on crystal structure, the authors show that PHA synthase exists as a dimer and is divided into two distinct domains, the N-terminal domain (RePhaC1ND) and the C-terminal domain (RePhaC1CD). The RePhaC1CD catalyzes the polymerization reaction via a non-processive ping-pong mechanism using a Cys-His-Asp catalytic triad. The two catalytic sites of the RePhaC1CD dimer are positioned 33.4 Å apart, suggesting that the polymerization reaction occurs independently at each site. This study also presents the structure-based mechanisms for substrate specificities of various PHA synthases from different classes.

Professor Sang Yup Lee, who has worked on this topic for more than 20 years, said,

“The results and information presented in these two papers have long been awaited not only in the PHA community, but also metabolic engineering, bacteriology/microbiology, and in general biological sciences communities. The structural information on PHA synthase together with the recently deciphered reaction mechanisms will be valuable for understanding the detailed mechanisms of biosynthesizing this important energy/redox storage material, and also for the rational engineering of PHA synthases to produce designer bioplastics from various monomers more efficiently.”

Indeed, these two papers published in Biotechnology Journal finally reveal the 30-year mystery of machinery of biological polyester synthesis, and will serve as the essential compass in creating designer and more efficient bioplastic machineries.

References:

Jieun Kim, Yeo-Jin Kim, So Young Choi, Sang Yup Lee and Kyung-Jin Kim. “Crystal structure of Ralstonia eutropha polyhydroxyalkanoate synthase C-terminal domain and reaction mechanisms” Biotechnology Journal DOI: 10.1002/biot.201600648

http://onlinelibrary.wiley.com/doi/10.1002/biot.201600648/abstract

Yeo-Jin Kim, So Young Choi, Jieun Kim, Kyeong Sik Jin, Sang Yup Lee and Kyung-Jin Kim. “Structure and function of the N-terminal domain of Ralstonia eutropha polyhydroxyalkanoate synthase, and the proposed structure and mechanisms of the whole enzyme” Biotechnology Journal DOI: 10.1002/biot.201600649

http://onlinelibrary.wiley.com/doi/10.1002/biot.201600649/abstract

2016.12.02 View 12048 -

Dr. Demis Hassabis, the Developer of AlphaGo, Lectures at KAIST

AlphaGo, a computer program developed by Google DeepMind in London to play the traditional Chinese board game Go, had five matches against Se-Dol Lee, a professional Go player in Korea from March 8-15, 2016. AlphaGo won four out of the five games, a significant test result showcasing the advancement achieved in the field of general-purpose artificial intelligence (GAI), according to the company.

Dr. Demis Hassabis, the Chief Executive Officer of Google DeepMind, visited KAIST on March 11, 2016 and gave an hour-long talk to students and faculty. In the lecture, which was entitled “Artificial Intelligence and the Future,” he introduced an overview of GAI and some of its applications in Atari video games and Go.

He said that the ultimate goal of GAI was to become a useful tool to help society solve some of the biggest and most pressing problems facing humanity, from climate change to disease diagnosis.

2016.03.11 View 5303

Dr. Demis Hassabis, the Developer of AlphaGo, Lectures at KAIST

AlphaGo, a computer program developed by Google DeepMind in London to play the traditional Chinese board game Go, had five matches against Se-Dol Lee, a professional Go player in Korea from March 8-15, 2016. AlphaGo won four out of the five games, a significant test result showcasing the advancement achieved in the field of general-purpose artificial intelligence (GAI), according to the company.

Dr. Demis Hassabis, the Chief Executive Officer of Google DeepMind, visited KAIST on March 11, 2016 and gave an hour-long talk to students and faculty. In the lecture, which was entitled “Artificial Intelligence and the Future,” he introduced an overview of GAI and some of its applications in Atari video games and Go.

He said that the ultimate goal of GAI was to become a useful tool to help society solve some of the biggest and most pressing problems facing humanity, from climate change to disease diagnosis.

2016.03.11 View 5303 -

K-Glass 3 Offers Users a Keyboard to Type Text

KAIST researchers upgraded their smart glasses with a low-power multicore processor to employ stereo vision and deep-learning algorithms, making the user interface and experience more intuitive and convenient.

K-Glass, smart glasses reinforced with augmented reality (AR) that were first developed by KAIST in 2014, with the second version released in 2015, is back with an even stronger model. The latest version, which KAIST researchers are calling K-Glass 3, allows users to text a message or type in key words for Internet surfing by offering a virtual keyboard for text and even one for a piano.

Currently, most wearable head-mounted displays (HMDs) suffer from a lack of rich user interfaces, short battery lives, and heavy weight. Some HMDs, such as Google Glass, use a touch panel and voice commands as an interface, but they are considered merely an extension of smartphones and are not optimized for wearable smart glasses. Recently, gaze recognition was proposed for HMDs including K-Glass 2, but gaze cannot be realized as a natural user interface (UI) and experience (UX) due to its limited interactivity and lengthy gaze-calibration time, which can be up to several minutes.

As a solution, Professor Hoi-Jun Yoo and his team from the Electrical Engineering Department recently developed K-Glass 3 with a low-power natural UI and UX processor. This processor is composed of a pre-processing core to implement stereo vision, seven deep-learning cores to accelerate real-time scene recognition within 33 milliseconds, and one rendering engine for the display.

The stereo-vision camera, located on the front of K-Glass 3, works in a manner similar to three dimension (3D) sensing in human vision. The camera’s two lenses, displayed horizontally from one another just like depth perception produced by left and right eyes, take pictures of the same objects or scenes and combine these two different images to extract spatial depth information, which is necessary to reconstruct 3D environments. The camera’s vision algorithm has an energy efficiency of 20 milliwatts on average, allowing it to operate in the Glass more than 24 hours without interruption.

The research team adopted deep-learning-multi core technology dedicated for mobile devices. This technology has greatly improved the Glass’s recognition accuracy with images and speech, while shortening the time needed to process and analyze data. In addition, the Glass’s multi-core processor is advanced enough to become idle when it detects no motion from users. Instead, it executes complex deep-learning algorithms with a minimal power to achieve high performance.

Professor Yoo said, “We have succeeded in fabricating a low-power multi-core processer that consumes only 126 milliwatts of power with a high efficiency rate. It is essential to develop a smaller, lighter, and low-power processor if we want to incorporate the widespread use of smart glasses and wearable devices into everyday life. K-Glass 3’s more intuitive UI and convenient UX permit users to enjoy enhanced AR experiences such as a keyboard or a better, more responsive mouse.”

Along with the research team, UX Factory, a Korean UI and UX developer, participated in the K-Glass 3 project.

These research results entitled “A 126.1mW Real-Time Natural UI/UX Processor with Embedded Deep-Learning Core for Low-Power Smart Glasses” (lead author: Seong-Wook Park, a doctoral student in the Electrical Engineering Department, KAIST) were presented at the 2016 IEEE (Institute of Electrical and Electronics Engineers) International Solid-State Circuits Conference (ISSCC) that took place January 31-February 4, 2016 in San Francisco, California.

YouTube Link: https://youtu.be/If_anx5NerQ

Figure 1: K-Glass 3

K-Glass 3 is equipped with a stereo camera, dual microphones, a WiFi module, and eight batteries to offer higher recognition accuracy and enhanced augmented reality experiences than previous models.

Figure 2: Architecture of the Low-Power Multi-Core Processor

K-Glass 3’s processor is designed to include several cores for pre-processing, deep-learning, and graphic rendering.

Figure 3: Virtual Text and Piano Keyboard

K-Glass 3 can detect hands and recognize their movements to provide users with such augmented reality applications as a virtual text or piano keyboard.

2016.02.26 View 14818

K-Glass 3 Offers Users a Keyboard to Type Text

KAIST researchers upgraded their smart glasses with a low-power multicore processor to employ stereo vision and deep-learning algorithms, making the user interface and experience more intuitive and convenient.

K-Glass, smart glasses reinforced with augmented reality (AR) that were first developed by KAIST in 2014, with the second version released in 2015, is back with an even stronger model. The latest version, which KAIST researchers are calling K-Glass 3, allows users to text a message or type in key words for Internet surfing by offering a virtual keyboard for text and even one for a piano.

Currently, most wearable head-mounted displays (HMDs) suffer from a lack of rich user interfaces, short battery lives, and heavy weight. Some HMDs, such as Google Glass, use a touch panel and voice commands as an interface, but they are considered merely an extension of smartphones and are not optimized for wearable smart glasses. Recently, gaze recognition was proposed for HMDs including K-Glass 2, but gaze cannot be realized as a natural user interface (UI) and experience (UX) due to its limited interactivity and lengthy gaze-calibration time, which can be up to several minutes.

As a solution, Professor Hoi-Jun Yoo and his team from the Electrical Engineering Department recently developed K-Glass 3 with a low-power natural UI and UX processor. This processor is composed of a pre-processing core to implement stereo vision, seven deep-learning cores to accelerate real-time scene recognition within 33 milliseconds, and one rendering engine for the display.

The stereo-vision camera, located on the front of K-Glass 3, works in a manner similar to three dimension (3D) sensing in human vision. The camera’s two lenses, displayed horizontally from one another just like depth perception produced by left and right eyes, take pictures of the same objects or scenes and combine these two different images to extract spatial depth information, which is necessary to reconstruct 3D environments. The camera’s vision algorithm has an energy efficiency of 20 milliwatts on average, allowing it to operate in the Glass more than 24 hours without interruption.

The research team adopted deep-learning-multi core technology dedicated for mobile devices. This technology has greatly improved the Glass’s recognition accuracy with images and speech, while shortening the time needed to process and analyze data. In addition, the Glass’s multi-core processor is advanced enough to become idle when it detects no motion from users. Instead, it executes complex deep-learning algorithms with a minimal power to achieve high performance.

Professor Yoo said, “We have succeeded in fabricating a low-power multi-core processer that consumes only 126 milliwatts of power with a high efficiency rate. It is essential to develop a smaller, lighter, and low-power processor if we want to incorporate the widespread use of smart glasses and wearable devices into everyday life. K-Glass 3’s more intuitive UI and convenient UX permit users to enjoy enhanced AR experiences such as a keyboard or a better, more responsive mouse.”

Along with the research team, UX Factory, a Korean UI and UX developer, participated in the K-Glass 3 project.

These research results entitled “A 126.1mW Real-Time Natural UI/UX Processor with Embedded Deep-Learning Core for Low-Power Smart Glasses” (lead author: Seong-Wook Park, a doctoral student in the Electrical Engineering Department, KAIST) were presented at the 2016 IEEE (Institute of Electrical and Electronics Engineers) International Solid-State Circuits Conference (ISSCC) that took place January 31-February 4, 2016 in San Francisco, California.

YouTube Link: https://youtu.be/If_anx5NerQ

Figure 1: K-Glass 3

K-Glass 3 is equipped with a stereo camera, dual microphones, a WiFi module, and eight batteries to offer higher recognition accuracy and enhanced augmented reality experiences than previous models.

Figure 2: Architecture of the Low-Power Multi-Core Processor

K-Glass 3’s processor is designed to include several cores for pre-processing, deep-learning, and graphic rendering.

Figure 3: Virtual Text and Piano Keyboard

K-Glass 3 can detect hands and recognize their movements to provide users with such augmented reality applications as a virtual text or piano keyboard.

2016.02.26 View 14818 -

An App to Digitally Detox from Smartphone Addiction: Lock n' LOL

KAIST researchers have developed an application that helps people restrain themselves from using smartphones during meetings or social gatherings.

The app’s group limit mode enforces users to curtail their smartphone usage through peer-pressure while offering flexibility to use the phone in an emergency.

When a fake phone company released its line of products, NoPhones, a thin, rectangular-shaped plastic block that looked just like a smartphone but did not function, many doubted that the simulated smartphones would find any users. Surprisingly, close to 4,000 fake phones were sold to consumers who wanted to curb their phone usage.

As smartphones penetrate every facet of our daily lives, a growing number of people have expressed concern about distractions or even the addictions they suffer from overusing smartphones.

Professor Uichin Lee of the Department of Knowledge Service Engineering at the Korea Advanced Institute of Science and Technology (KAIST) and his research team have recently introduced a solution to this problem by developing an application, Lock n’ LoL (Lock Your Smartphone and Laugh Out Loud), to help people lock their smartphones altogether and keep them from using the phone while engaged in social activities such as meetings, conferences, and discussions.

Researchers note that the overuse of smartphones often results from users’ habitual checking of messages, emails, or other online contents such as status updates in social networking service (SNS). External stimuli, for example, notification alarms, add to smartphone distractions and interruptions in group interactions.

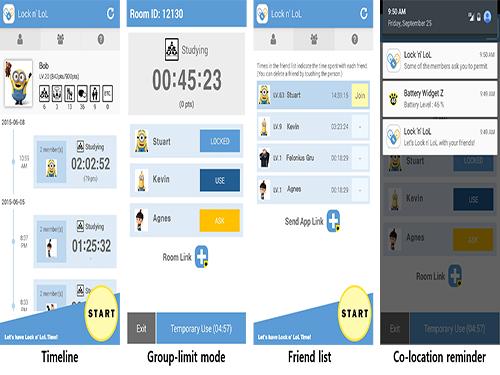

The Lock n’ LoL allows users to create a new room or join an existing room. The users then invite meeting participants or friends to the room and share its ID with them to enact the Group Limit (lock) mode. When phones are in the lock mode, all alarms and notifications are automatically muted, and users must ask permission to unlock their phones. However, in an emergency, users can access their phones for accumulative five minutes in a temporary unlimit mode.

In addition, the app’s Co-location Reminder detects and lists nearby users to encourage app users to limit their phone use. The Lock n’ LoL also displays important statistics to monitor users’ behavior such as the current week’s total limit time, the weekly average usage time, top friends ranked by time spent together, and top activities in which the users participated.

Professor Lee said,

“We conducted the Lock n’ LoL campaign throughout the campus for one month this year with 1,000 students participating. As a result, we discovered that students accumulated more than 10,000 free hours from using the app on their smartphones. The students said that they were able to focus more on their group activities. In an age of the Internet of Things, we expect that the adverse effects of mobile distractions and addictions will emerge as a social concern, and our Lock n’ LoL is a key effort to address this issue.”

He added, “This app will certainly help family members to interact more with each other during the holiday season.”

The Lock n’ LoL is available for free download on the App Store and Google Play: https://itunes.apple.com/lc/app/lock-n-lol/id1030287673?mt=8.

YouTube link: https://youtu.be/1wY2pI9qFYM

Figure 1: User Interfaces of Lock n’ LoL

This shows the final design of Lock n’ LoL, which consists of three tabs: My Info, Friends, and Group Limit Mode. Users can activate the limit mode by clicking the start button at the bottom of the screen.

Figure 2: Statistics of Field Deployment

This shows the deployment summary of Lock n’ LoL campaign in May 2015.

2015.12.17 View 11226

An App to Digitally Detox from Smartphone Addiction: Lock n' LOL

KAIST researchers have developed an application that helps people restrain themselves from using smartphones during meetings or social gatherings.

The app’s group limit mode enforces users to curtail their smartphone usage through peer-pressure while offering flexibility to use the phone in an emergency.

When a fake phone company released its line of products, NoPhones, a thin, rectangular-shaped plastic block that looked just like a smartphone but did not function, many doubted that the simulated smartphones would find any users. Surprisingly, close to 4,000 fake phones were sold to consumers who wanted to curb their phone usage.

As smartphones penetrate every facet of our daily lives, a growing number of people have expressed concern about distractions or even the addictions they suffer from overusing smartphones.