-

KAIST Develops ‘Real-Time Programmable Robotic Sheet’ That Can Grasp and Walk on Its Own

<(From left) Prof. Inkyu Park from KAIST, Prof. Yongrok Jeong from Kyungpook National University, Dr. Hyunkyu Park from KAIST and Prof.Jung Kim from KAIST>

Folding structures are widely used in robot design as an intuitive and efficient shape-morphing mechanism, with applications explored in space and aerospace robots, soft robots, and foldable grippers (hands). However, existing folding mechanisms have fixed hinges and folding directions, requiring redesign and reconstruction every time the environment or task changes. A Korean research team has now developed a “field-programmable robotic folding sheet” that can be programmed in real time according to its surroundings, significantly enhancing robots’ shape-morphing capabilities and opening new possibilities in robotics.

KAIST (President Kwang Hyung Lee) announced on the 6th that Professors Jung Kim and Inkyu Park of the Department of Mechanical Engineering have developed the foundational technology for a “field-programmable robotic folding sheet” that enables real-time shape programming.

This technology is a successful application of the “field-programmability” concept to foldable structures. It proposes an integrated material technology and programming methodology that can instantly reflect user commands—such as “where to fold, in which direction, and by how much”—onto the material's shape in real time.

The robotic sheet consists of a thin and flexible polymer substrate embedded with a micro metal resistor network. These metal resistors simultaneously serve as heaters and temperature sensors, allowing the system to sense and control its folding state without any external devices.

Furthermore, using software that combines genetic algorithms and deep neural networks, the user can input desired folding locations, directions, and intensities. The sheet then autonomously repeats heating and cooling cycles to create the precise desired shape.

In particular, closed-loop control of the temperature distribution enhances real-time folding precision and compensates for environmental changes. It also improves the traditionally slow response time of heat-based folding technologies.

The ability to program shapes in real time enables a wide variety of robotic functions to be implemented on the fly, without the need for complex hardware redesign.

In fact, the research team demonstrated an adaptive robotic hand (gripper) that can change its grasping strategy to suit various object shapes using a single material. They also placed the same robotic sheet on the ground to allow it to walk or crawl, showcasing bioinspired locomotion strategies. This presents potential for expanding into environmentally adaptive autonomous robots that can alter their form in response to surroundings.

Professor Jung Kim stated, “This study brings us a step closer to realizing ‘morphological intelligence,’ a concept where shape itself embodies intelligence and enables smart motion. In the future, we plan to evolve this into a next-generation physical AI platform with applications in disaster-response robots, customized medical assistive devices, and space exploration tools—by improving materials and structures for greater load support and faster cooling, and expanding to electrode-free, fully integrated designs of various forms and sizes.”

This research, co-led by Dr. Hyunkyu Park (currently at Samsung Advanced Institute of Technology, Samsung Electronics) and Professor Yongrok Jeong (currently at Kyungpook National University), was published in the August 2025 online edition of the international journal Nature Communications.

※ Paper title: Field-programmable robotic folding sheet ※ DOI: 10.1038/s41467-025-61838-3

This research was supported by the National Research Foundation of Korea (Ministry of Science and ICT). (RS-2021-NR059641, 2021R1A2C3008742)

Video file: https://drive.google.com/file/d/18R0oW7SJVYH-gd1Er_S-9Myar8dm8Fzp/view?usp=sharing

2025.08.06 View 149

KAIST Develops ‘Real-Time Programmable Robotic Sheet’ That Can Grasp and Walk on Its Own

<(From left) Prof. Inkyu Park from KAIST, Prof. Yongrok Jeong from Kyungpook National University, Dr. Hyunkyu Park from KAIST and Prof.Jung Kim from KAIST>

Folding structures are widely used in robot design as an intuitive and efficient shape-morphing mechanism, with applications explored in space and aerospace robots, soft robots, and foldable grippers (hands). However, existing folding mechanisms have fixed hinges and folding directions, requiring redesign and reconstruction every time the environment or task changes. A Korean research team has now developed a “field-programmable robotic folding sheet” that can be programmed in real time according to its surroundings, significantly enhancing robots’ shape-morphing capabilities and opening new possibilities in robotics.

KAIST (President Kwang Hyung Lee) announced on the 6th that Professors Jung Kim and Inkyu Park of the Department of Mechanical Engineering have developed the foundational technology for a “field-programmable robotic folding sheet” that enables real-time shape programming.

This technology is a successful application of the “field-programmability” concept to foldable structures. It proposes an integrated material technology and programming methodology that can instantly reflect user commands—such as “where to fold, in which direction, and by how much”—onto the material's shape in real time.

The robotic sheet consists of a thin and flexible polymer substrate embedded with a micro metal resistor network. These metal resistors simultaneously serve as heaters and temperature sensors, allowing the system to sense and control its folding state without any external devices.

Furthermore, using software that combines genetic algorithms and deep neural networks, the user can input desired folding locations, directions, and intensities. The sheet then autonomously repeats heating and cooling cycles to create the precise desired shape.

In particular, closed-loop control of the temperature distribution enhances real-time folding precision and compensates for environmental changes. It also improves the traditionally slow response time of heat-based folding technologies.

The ability to program shapes in real time enables a wide variety of robotic functions to be implemented on the fly, without the need for complex hardware redesign.

In fact, the research team demonstrated an adaptive robotic hand (gripper) that can change its grasping strategy to suit various object shapes using a single material. They also placed the same robotic sheet on the ground to allow it to walk or crawl, showcasing bioinspired locomotion strategies. This presents potential for expanding into environmentally adaptive autonomous robots that can alter their form in response to surroundings.

Professor Jung Kim stated, “This study brings us a step closer to realizing ‘morphological intelligence,’ a concept where shape itself embodies intelligence and enables smart motion. In the future, we plan to evolve this into a next-generation physical AI platform with applications in disaster-response robots, customized medical assistive devices, and space exploration tools—by improving materials and structures for greater load support and faster cooling, and expanding to electrode-free, fully integrated designs of various forms and sizes.”

This research, co-led by Dr. Hyunkyu Park (currently at Samsung Advanced Institute of Technology, Samsung Electronics) and Professor Yongrok Jeong (currently at Kyungpook National University), was published in the August 2025 online edition of the international journal Nature Communications.

※ Paper title: Field-programmable robotic folding sheet ※ DOI: 10.1038/s41467-025-61838-3

This research was supported by the National Research Foundation of Korea (Ministry of Science and ICT). (RS-2021-NR059641, 2021R1A2C3008742)

Video file: https://drive.google.com/file/d/18R0oW7SJVYH-gd1Er_S-9Myar8dm8Fzp/view?usp=sharing

2025.08.06 View 149 -

Vulnerability Found: One Packet Can Paralyze Smartphones

<(From left) Professor Yongdae Kim, PhD candidate Tuan Dinh Hoang, PhD candidate Taekkyung Oh from KAIST, Professor CheolJun Park from Kyung Hee University; and Professor Insu Yun from KAIST>

Smartphones must stay connected to mobile networks at all times to function properly. The core component that enables this constant connectivity is the communication modem (Baseband) inside the device. KAIST researchers, using their self-developed testing framework called 'LLFuzz (Lower Layer Fuzz),' have discovered security vulnerabilities in the lower layers of smartphone communication modems and demonstrated the necessity of standardizing 'mobile communication modem security testing.'

*Standardization: In mobile communication, conformance testing, which verifies normal operation in normal situations, has been standardized. However, standards for handling abnormal packets have not yet been established, hence the need for standardized security testing.

Professor Yongdae Kim's team from the School of Electrical Engineering at KAIST, in a joint research effort with Professor CheolJun Park's team from Kyung Hee University, announced on the 25th of July that they have discovered critical security vulnerabilities in the lower layers of smartphone communication modems. These vulnerabilities can incapacitate smartphone communication with just a single manipulated wireless packet (a data transmission unit in a network). In particular, these vulnerabilities are extremely severe as they can potentially lead to remote code execution (RCE)

The research team utilized their self-developed 'LLFuzz' analysis framework to analyze the lower layer state transitions and error handling logic of the modem to detect security vulnerabilities. LLFuzz was able to precisely extract vulnerabilities caused by implementation errors by comparing and analyzing 3GPP* standard-based state machines with actual device responses.

*3GPP: An international collaborative organization that creates global mobile communication standards.

The research team conducted experiments on 15 commercial smartphones from global manufacturers, including Apple, Samsung Electronics, Google, and Xiaomi, and discovered a total of 11 vulnerabilities. Among these, seven were assigned official CVE (Common Vulnerabilities and Exposures) numbers, and manufacturers applied security patches for these vulnerabilities. However, the remaining four have not yet been publicly disclosed.

While previous security research primarily focused on higher layers of mobile communication, such as NAS (Network Access Stratum) and RRC (Radio Resource Control), the research team concentrated on analyzing the error handling logic of mobile communication's lower layers, which manufacturers have often neglected.

These vulnerabilities occurred in the lower layers of the communication modem (RLC, MAC, PDCP, PHY*), and due to their structural characteristics where encryption or authentication is not applied, operational errors could be induced simply by injecting external signals.

*RLC, MAC, PDCP, PHY: Lower layers of LTE/5G communication, responsible for wireless resource allocation, error control, encryption, and physical layer transmission.

The research team released a demo video showing that when they injected a manipulated wireless packet (malformed MAC packet) into commercial smartphones via a Software-Defined Radio (SDR) device using packets generated on an experimental laptop, the smartphone's communication modem (Baseband) immediately crashed

※ Experiment video: https://drive.google.com/file/d/1NOwZdu_Hf4ScG7LkwgEkHLa_nSV4FPb_/view?usp=drive_link

The video shows data being normally transmitted at 23MB per second on the fast.com page, but immediately after the manipulated packet is injected, the transmission stops and the mobile communication signal disappears. This intuitively demonstrates that a single wireless packet can cripple a commercial device's communication modem.

The vulnerabilities were found in the 'modem chip,' a core component of smartphones responsible for calls, texts, and data communication, making it a very important component.

Qualcomm: Affects over 90 chipsets, including CVE-2025-21477, CVE-2024-23385.

MediaTek: Affects over 80 chipsets, including CVE-2024-20076, CVE-2024-20077, CVE-2025-20659.

Samsung: CVE-2025-26780 (targets the latest chipsets like Exynos 2400, 5400).

Apple: CVE-2024-27870 (shares the same vulnerability as Qualcomm CVE).

The problematic modem chips (communication components) are not only in premium smartphones but also in low-end smartphones, tablets, smartwatches, and IoT devices, leading to the widespread potential for user harm due to their broad diffusion.

Furthermore, the research team experimentally tested 5G vulnerabilities in the lower layers and found two vulnerabilities in just two weeks. Considering that 5G vulnerability checks have not been generally conducted, it is possible that many more vulnerabilities exist in the mobile communication lower layers of baseband chips.

Professor Yongdae Kim explained, "The lower layers of smartphone communication modems are not subject to encryption or authentication, creating a structural risk where devices can accept arbitrary signals from external sources." He added, "This research demonstrates the necessity of standardizing mobile communication modem security testing for smartphones and other IoT devices."

The research team is continuing additional analysis of the 5G lower layers using LLFuzz and is also developing tools for testing LTE and 5G upper layers. They are also pursuing collaborations for future tool disclosure. The team's stance is that "as technological complexity increases, systemic security inspection systems must evolve in parallel."

First author Tuan Dinh Hoang, a Ph.D. student in the School of Electrical Engineering, will present the research results in August at USENIX Security 2025, one of the world's most prestigious conferences in cybersecurity.

※ Paper Title: LLFuzz: An Over-the-Air Dynamic Testing Framework for Cellular Baseband Lower Layers (Tuan Dinh Hoang and Taekkyung Oh, KAIST; CheolJun Park, Kyung Hee Univ.; Insu Yun and Yongdae Kim, KAIST)

※ Usenix paper site: https://www.usenix.org/conference/usenixsecurity25/presentation/hoang (Not yet public), Lab homepage paper: https://syssec.kaist.ac.kr/pub/2025/LLFuzz_Tuan.pdf

※ Open-source repository: https://github.com/SysSec-KAIST/LLFuzz (To be released)

This research was conducted with support from the Institute of Information & Communications Technology Planning & Evaluation (IITP) funded by the Ministry of Science and ICT.

2025.07.25 View 500

Vulnerability Found: One Packet Can Paralyze Smartphones

<(From left) Professor Yongdae Kim, PhD candidate Tuan Dinh Hoang, PhD candidate Taekkyung Oh from KAIST, Professor CheolJun Park from Kyung Hee University; and Professor Insu Yun from KAIST>

Smartphones must stay connected to mobile networks at all times to function properly. The core component that enables this constant connectivity is the communication modem (Baseband) inside the device. KAIST researchers, using their self-developed testing framework called 'LLFuzz (Lower Layer Fuzz),' have discovered security vulnerabilities in the lower layers of smartphone communication modems and demonstrated the necessity of standardizing 'mobile communication modem security testing.'

*Standardization: In mobile communication, conformance testing, which verifies normal operation in normal situations, has been standardized. However, standards for handling abnormal packets have not yet been established, hence the need for standardized security testing.

Professor Yongdae Kim's team from the School of Electrical Engineering at KAIST, in a joint research effort with Professor CheolJun Park's team from Kyung Hee University, announced on the 25th of July that they have discovered critical security vulnerabilities in the lower layers of smartphone communication modems. These vulnerabilities can incapacitate smartphone communication with just a single manipulated wireless packet (a data transmission unit in a network). In particular, these vulnerabilities are extremely severe as they can potentially lead to remote code execution (RCE)

The research team utilized their self-developed 'LLFuzz' analysis framework to analyze the lower layer state transitions and error handling logic of the modem to detect security vulnerabilities. LLFuzz was able to precisely extract vulnerabilities caused by implementation errors by comparing and analyzing 3GPP* standard-based state machines with actual device responses.

*3GPP: An international collaborative organization that creates global mobile communication standards.

The research team conducted experiments on 15 commercial smartphones from global manufacturers, including Apple, Samsung Electronics, Google, and Xiaomi, and discovered a total of 11 vulnerabilities. Among these, seven were assigned official CVE (Common Vulnerabilities and Exposures) numbers, and manufacturers applied security patches for these vulnerabilities. However, the remaining four have not yet been publicly disclosed.

While previous security research primarily focused on higher layers of mobile communication, such as NAS (Network Access Stratum) and RRC (Radio Resource Control), the research team concentrated on analyzing the error handling logic of mobile communication's lower layers, which manufacturers have often neglected.

These vulnerabilities occurred in the lower layers of the communication modem (RLC, MAC, PDCP, PHY*), and due to their structural characteristics where encryption or authentication is not applied, operational errors could be induced simply by injecting external signals.

*RLC, MAC, PDCP, PHY: Lower layers of LTE/5G communication, responsible for wireless resource allocation, error control, encryption, and physical layer transmission.

The research team released a demo video showing that when they injected a manipulated wireless packet (malformed MAC packet) into commercial smartphones via a Software-Defined Radio (SDR) device using packets generated on an experimental laptop, the smartphone's communication modem (Baseband) immediately crashed

※ Experiment video: https://drive.google.com/file/d/1NOwZdu_Hf4ScG7LkwgEkHLa_nSV4FPb_/view?usp=drive_link

The video shows data being normally transmitted at 23MB per second on the fast.com page, but immediately after the manipulated packet is injected, the transmission stops and the mobile communication signal disappears. This intuitively demonstrates that a single wireless packet can cripple a commercial device's communication modem.

The vulnerabilities were found in the 'modem chip,' a core component of smartphones responsible for calls, texts, and data communication, making it a very important component.

Qualcomm: Affects over 90 chipsets, including CVE-2025-21477, CVE-2024-23385.

MediaTek: Affects over 80 chipsets, including CVE-2024-20076, CVE-2024-20077, CVE-2025-20659.

Samsung: CVE-2025-26780 (targets the latest chipsets like Exynos 2400, 5400).

Apple: CVE-2024-27870 (shares the same vulnerability as Qualcomm CVE).

The problematic modem chips (communication components) are not only in premium smartphones but also in low-end smartphones, tablets, smartwatches, and IoT devices, leading to the widespread potential for user harm due to their broad diffusion.

Furthermore, the research team experimentally tested 5G vulnerabilities in the lower layers and found two vulnerabilities in just two weeks. Considering that 5G vulnerability checks have not been generally conducted, it is possible that many more vulnerabilities exist in the mobile communication lower layers of baseband chips.

Professor Yongdae Kim explained, "The lower layers of smartphone communication modems are not subject to encryption or authentication, creating a structural risk where devices can accept arbitrary signals from external sources." He added, "This research demonstrates the necessity of standardizing mobile communication modem security testing for smartphones and other IoT devices."

The research team is continuing additional analysis of the 5G lower layers using LLFuzz and is also developing tools for testing LTE and 5G upper layers. They are also pursuing collaborations for future tool disclosure. The team's stance is that "as technological complexity increases, systemic security inspection systems must evolve in parallel."

First author Tuan Dinh Hoang, a Ph.D. student in the School of Electrical Engineering, will present the research results in August at USENIX Security 2025, one of the world's most prestigious conferences in cybersecurity.

※ Paper Title: LLFuzz: An Over-the-Air Dynamic Testing Framework for Cellular Baseband Lower Layers (Tuan Dinh Hoang and Taekkyung Oh, KAIST; CheolJun Park, Kyung Hee Univ.; Insu Yun and Yongdae Kim, KAIST)

※ Usenix paper site: https://www.usenix.org/conference/usenixsecurity25/presentation/hoang (Not yet public), Lab homepage paper: https://syssec.kaist.ac.kr/pub/2025/LLFuzz_Tuan.pdf

※ Open-source repository: https://github.com/SysSec-KAIST/LLFuzz (To be released)

This research was conducted with support from the Institute of Information & Communications Technology Planning & Evaluation (IITP) funded by the Ministry of Science and ICT.

2025.07.25 View 500 -

Development of Core NPU Technology to Improve ChatGPT Inference Performance by Over 60%

Latest generative AI models such as OpenAI's ChatGPT-4 and Google's Gemini 2.5 require not only high memory bandwidth but also large memory capacity. This is why generative AI cloud operating companies like Microsoft and Google purchase hundreds of thousands of NVIDIA GPUs. As a solution to address the core challenges of building such high-performance AI infrastructure, Korean researchers have succeeded in developing an NPU (Neural Processing Unit)* core technology that improves the inference performance of generative AI models by an average of over 60% while consuming approximately 44% less power compared to the latest GPUs.

*NPU (Neural Processing Unit): An AI-specific semiconductor chip designed to rapidly process artificial neural networks.

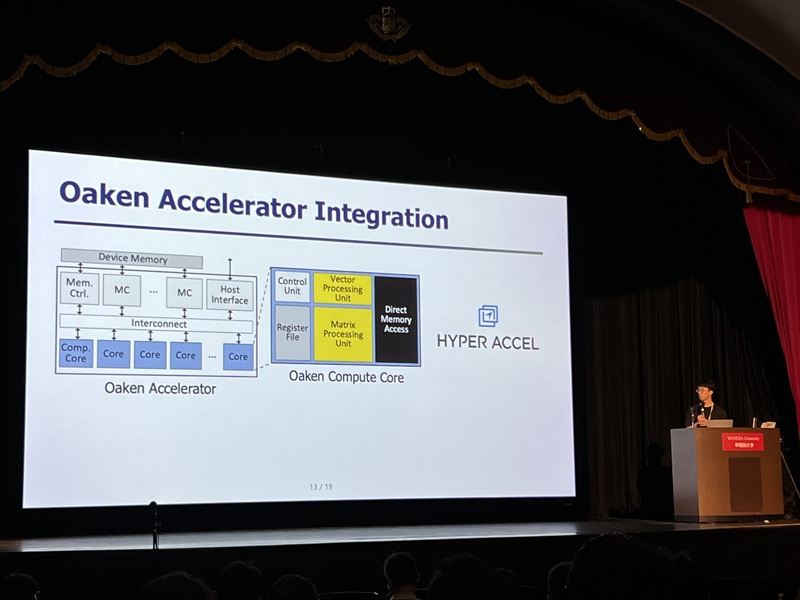

On the 4th, Professor Jongse Park's research team from KAIST School of Computing, in collaboration with HyperAccel Inc. (a startup founded by Professor Joo-Young Kim from the School of Electrical Engineering), announced that they have developed a high-performance, low-power NPU (Neural Processing Unit) core technology specialized for generative AI clouds like ChatGPT.

The technology proposed by the research team has been accepted by the '2025 International Symposium on Computer Architecture (ISCA 2025)', a top-tier international conference in the field of computer architecture.

The key objective of this research is to improve the performance of large-scale generative AI services by lightweighting the inference process, while minimizing accuracy loss and solving memory bottleneck issues. This research is highly recognized for its integrated design of AI semiconductors and AI system software, which are key components of AI infrastructure.

While existing GPU-based AI infrastructure requires multiple GPU devices to meet high bandwidth and capacity demands, this technology enables the configuration of the same level of AI infrastructure using fewer NPU devices through KV cache quantization*. KV cache accounts for most of the memory usage, thereby its quantization significantly reduces the cost of building generative AI clouds.

*KV Cache (Key-Value Cache) Quantization: Refers to reducing the data size in a type of temporary storage space used to improve performance when operating generative AI models (e.g., converting a 16-bit number to a 4-bit number reduces data size by 1/4).

The research team designed it to be integrated with memory interfaces without changing the operational logic of existing NPU architectures. This hardware architecture not only implements the proposed quantization algorithm but also adopts page-level memory management techniques* for efficient utilization of limited memory bandwidth and capacity, and introduces new encoding technique optimized for quantized KV cache.

*Page-level memory management technique: Virtualizes memory addresses, as the CPU does, to allow consistent access within the NPU.

Furthermore, when building an NPU-based AI cloud with superior cost and power efficiency compared to the latest GPUs, the high-performance, low-power nature of NPUs is expected to significantly reduce operating costs.

Professor Jongse Park stated, "This research, through joint work with HyperAccel Inc., found a solution in generative AI inference lightweighting algorithms and succeeded in developing a core NPU technology that can solve the 'memory problem.' Through this technology, we implemented an NPU with over 60% improved performance compared to the latest GPUs by combining quantization techniques that reduce memory requirements while maintaining inference accuracy, and hardware designs optimized for this".

He further emphasized, "This technology has demonstrated the possibility of implementing high-performance, low-power infrastructure specialized for generative AI, and is expected to play a key role not only in AI cloud data centers but also in the AI transformation (AX) environment represented by dynamic, executable AI such as 'Agentic AI'."

This research was presented by Ph.D. student Minsu Kim and Dr. Seongmin Hong from HyperAccel Inc. as co-first authors at the '2025 International Symposium on Computer Architecture (ISCA)' held in Tokyo, Japan, from June 21 to June 25. ISCA, a globally renowned academic conference, received 570 paper submissions this year, with only 127 papers accepted (an acceptance rate of 22.7%).

※Paper Title: Oaken: Fast and Efficient LLM Serving with Online-Offline Hybrid KV Cache Quantization

※DOI: https://doi.org/10.1145/3695053.3731019

Meanwhile, this research was supported by the National Research Foundation of Korea's Excellent Young Researcher Program, the Institute for Information & Communications Technology Planning & Evaluation (IITP), and the AI Semiconductor Graduate School Support Project.

2025.07.07 View 1116

Development of Core NPU Technology to Improve ChatGPT Inference Performance by Over 60%

Latest generative AI models such as OpenAI's ChatGPT-4 and Google's Gemini 2.5 require not only high memory bandwidth but also large memory capacity. This is why generative AI cloud operating companies like Microsoft and Google purchase hundreds of thousands of NVIDIA GPUs. As a solution to address the core challenges of building such high-performance AI infrastructure, Korean researchers have succeeded in developing an NPU (Neural Processing Unit)* core technology that improves the inference performance of generative AI models by an average of over 60% while consuming approximately 44% less power compared to the latest GPUs.

*NPU (Neural Processing Unit): An AI-specific semiconductor chip designed to rapidly process artificial neural networks.

On the 4th, Professor Jongse Park's research team from KAIST School of Computing, in collaboration with HyperAccel Inc. (a startup founded by Professor Joo-Young Kim from the School of Electrical Engineering), announced that they have developed a high-performance, low-power NPU (Neural Processing Unit) core technology specialized for generative AI clouds like ChatGPT.

The technology proposed by the research team has been accepted by the '2025 International Symposium on Computer Architecture (ISCA 2025)', a top-tier international conference in the field of computer architecture.

The key objective of this research is to improve the performance of large-scale generative AI services by lightweighting the inference process, while minimizing accuracy loss and solving memory bottleneck issues. This research is highly recognized for its integrated design of AI semiconductors and AI system software, which are key components of AI infrastructure.

While existing GPU-based AI infrastructure requires multiple GPU devices to meet high bandwidth and capacity demands, this technology enables the configuration of the same level of AI infrastructure using fewer NPU devices through KV cache quantization*. KV cache accounts for most of the memory usage, thereby its quantization significantly reduces the cost of building generative AI clouds.

*KV Cache (Key-Value Cache) Quantization: Refers to reducing the data size in a type of temporary storage space used to improve performance when operating generative AI models (e.g., converting a 16-bit number to a 4-bit number reduces data size by 1/4).

The research team designed it to be integrated with memory interfaces without changing the operational logic of existing NPU architectures. This hardware architecture not only implements the proposed quantization algorithm but also adopts page-level memory management techniques* for efficient utilization of limited memory bandwidth and capacity, and introduces new encoding technique optimized for quantized KV cache.

*Page-level memory management technique: Virtualizes memory addresses, as the CPU does, to allow consistent access within the NPU.

Furthermore, when building an NPU-based AI cloud with superior cost and power efficiency compared to the latest GPUs, the high-performance, low-power nature of NPUs is expected to significantly reduce operating costs.

Professor Jongse Park stated, "This research, through joint work with HyperAccel Inc., found a solution in generative AI inference lightweighting algorithms and succeeded in developing a core NPU technology that can solve the 'memory problem.' Through this technology, we implemented an NPU with over 60% improved performance compared to the latest GPUs by combining quantization techniques that reduce memory requirements while maintaining inference accuracy, and hardware designs optimized for this".

He further emphasized, "This technology has demonstrated the possibility of implementing high-performance, low-power infrastructure specialized for generative AI, and is expected to play a key role not only in AI cloud data centers but also in the AI transformation (AX) environment represented by dynamic, executable AI such as 'Agentic AI'."

This research was presented by Ph.D. student Minsu Kim and Dr. Seongmin Hong from HyperAccel Inc. as co-first authors at the '2025 International Symposium on Computer Architecture (ISCA)' held in Tokyo, Japan, from June 21 to June 25. ISCA, a globally renowned academic conference, received 570 paper submissions this year, with only 127 papers accepted (an acceptance rate of 22.7%).

※Paper Title: Oaken: Fast and Efficient LLM Serving with Online-Offline Hybrid KV Cache Quantization

※DOI: https://doi.org/10.1145/3695053.3731019

Meanwhile, this research was supported by the National Research Foundation of Korea's Excellent Young Researcher Program, the Institute for Information & Communications Technology Planning & Evaluation (IITP), and the AI Semiconductor Graduate School Support Project.

2025.07.07 View 1116 -

KAIST researcher Se Jin Park develops 'SpeechSSM,' opening up possibilities for a 24-hour AI voice assistant.

<(From Left)Prof. Yong Man Ro and Ph.D. candidate Sejin Park>

Se Jin Park, a researcher from Professor Yong Man Ro’s team at KAIST, has announced 'SpeechSSM', a spoken language model capable of generating long-duration speech that sounds natural and remains consistent.

An efficient processing technique based on linear sequence modeling overcomes the limitations of existing spoken language models, enabling high-quality speech generation without time constraints.

It is expected to be widely used in podcasts, audiobooks, and voice assistants due to its ability to generate natural, long-duration speech like humans.

Recently, Spoken Language Models (SLMs) have been spotlighted as next-generation technology that surpasses the limitations of text-based language models by learning human speech without text to understand and generate linguistic and non-linguistic information. However, existing models showed significant limitations in generating long-duration content required for podcasts, audiobooks, and voice assistants. Now, KAIST researcher has succeeded in overcoming these limitations by developing 'SpeechSSM,' which enables consistent and natural speech generation without time constraints.

KAIST(President Kwang Hyung Lee) announced on the 3rd of July that Ph.D. candidate Sejin Park from Professor Yong Man Ro's research team in the School of Electrical Engineering has developed 'SpeechSSM,' a spoken. a spoken language model capable of generating long-duration speech.

This research is set to be presented as an oral paper at ICML (International Conference on Machine Learning) 2025, one of the top machine learning conferences, selected among approximately 1% of all submitted papers. This not only proves outstanding research ability but also serves as an opportunity to once again demonstrate KAIST's world-leading AI research capabilities.

A major advantage of Spoken Language Models (SLMs) is their ability to directly process speech without intermediate text conversion, leveraging the unique acoustic characteristics of human speakers, allowing for the rapid generation of high-quality speech even in large-scale models.

However, existing models faced difficulties in maintaining semantic and speaker consistency for long-duration speech due to increased 'speech token resolution' and memory consumption when capturing very detailed information by breaking down speech into fine fragments.

To solve this problem, Se Jin Park developed 'SpeechSSM,' a spoken language model using a Hybrid State-Space Model, designed to efficiently process and generate long speech sequences.

This model employs a 'hybrid structure' that alternately places 'attention layers' focusing on recent information and 'recurrent layers' that remember the overall narrative flow (long-term context). This allows the story to flow smoothly without losing coherence even when generating speech for a long time. Furthermore, memory usage and computational load do not increase sharply with input length, enabling stable and efficient learning and the generation of long-duration speech.

SpeechSSM effectively processes unbounded speech sequences by dividing speech data into short, fixed units (windows), processing each unit independently, and then combining them to create long speech.

Additionally, in the speech generation phase, it uses a 'Non-Autoregressive' audio synthesis model (SoundStorm), which rapidly generates multiple parts at once instead of slowly creating one character or one word at a time, enabling the fast generation of high-quality speech.

While existing models typically evaluated short speech models of about 10 seconds, Se Jin Park created new evaluation tasks for speech generation based on their self-built benchmark dataset, 'LibriSpeech-Long,' capable of generating up to 16 minutes of speech.

Compared to PPL (Perplexity), an existing speech model evaluation metric that only indicates grammatical correctness, she proposed new evaluation metrics such as 'SC-L (semantic coherence over time)' to assess content coherence over time, and 'N-MOS-T (naturalness mean opinion score over time)' to evaluate naturalness over time, enabling more effective and precise evaluation.

Through these new evaluations, it was confirmed that speech generated by the SpeechSSM spoken language model consistently featured specific individuals mentioned in the initial prompt, and new characters and events unfolded naturally and contextually consistently, despite long-duration generation. This contrasts sharply with existing models, which tended to easily lose their topic and exhibit repetition during long-duration generation.

PhD candidate Sejin Park explained, "Existing spoken language models had limitations in long-duration generation, so our goal was to develop a spoken language model capable of generating long-duration speech for actual human use." She added, "This research achievement is expected to greatly contribute to various types of voice content creation and voice AI fields like voice assistants, by maintaining consistent content in long contexts and responding more efficiently and quickly in real time than existing methods."

This research, with Se Jin Park as the first author, was conducted in collaboration with Google DeepMind and is scheduled to be presented as an oral presentation at ICML (International Conference on Machine Learning) 2025 on July 16th.

Paper Title: Long-Form Speech Generation with Spoken Language Models

DOI: 10.48550/arXiv.2412.18603

Ph.D. candidate Se Jin Park has demonstrated outstanding research capabilities as a member of Professor Yong Man Ro's MLLM (multimodal large language model) research team, through her work integrating vision, speech, and language. Her achievements include a spotlight paper presentation at 2024 CVPR (Computer Vision and Pattern Recognition) and an Outstanding Paper Award at 2024 ACL (Association for Computational Linguistics).

For more information, you can refer to the publication and accompanying demo: SpeechSSM Publications.

2025.07.04 View 982

KAIST researcher Se Jin Park develops 'SpeechSSM,' opening up possibilities for a 24-hour AI voice assistant.

<(From Left)Prof. Yong Man Ro and Ph.D. candidate Sejin Park>

Se Jin Park, a researcher from Professor Yong Man Ro’s team at KAIST, has announced 'SpeechSSM', a spoken language model capable of generating long-duration speech that sounds natural and remains consistent.

An efficient processing technique based on linear sequence modeling overcomes the limitations of existing spoken language models, enabling high-quality speech generation without time constraints.

It is expected to be widely used in podcasts, audiobooks, and voice assistants due to its ability to generate natural, long-duration speech like humans.

Recently, Spoken Language Models (SLMs) have been spotlighted as next-generation technology that surpasses the limitations of text-based language models by learning human speech without text to understand and generate linguistic and non-linguistic information. However, existing models showed significant limitations in generating long-duration content required for podcasts, audiobooks, and voice assistants. Now, KAIST researcher has succeeded in overcoming these limitations by developing 'SpeechSSM,' which enables consistent and natural speech generation without time constraints.

KAIST(President Kwang Hyung Lee) announced on the 3rd of July that Ph.D. candidate Sejin Park from Professor Yong Man Ro's research team in the School of Electrical Engineering has developed 'SpeechSSM,' a spoken. a spoken language model capable of generating long-duration speech.

This research is set to be presented as an oral paper at ICML (International Conference on Machine Learning) 2025, one of the top machine learning conferences, selected among approximately 1% of all submitted papers. This not only proves outstanding research ability but also serves as an opportunity to once again demonstrate KAIST's world-leading AI research capabilities.

A major advantage of Spoken Language Models (SLMs) is their ability to directly process speech without intermediate text conversion, leveraging the unique acoustic characteristics of human speakers, allowing for the rapid generation of high-quality speech even in large-scale models.

However, existing models faced difficulties in maintaining semantic and speaker consistency for long-duration speech due to increased 'speech token resolution' and memory consumption when capturing very detailed information by breaking down speech into fine fragments.

To solve this problem, Se Jin Park developed 'SpeechSSM,' a spoken language model using a Hybrid State-Space Model, designed to efficiently process and generate long speech sequences.

This model employs a 'hybrid structure' that alternately places 'attention layers' focusing on recent information and 'recurrent layers' that remember the overall narrative flow (long-term context). This allows the story to flow smoothly without losing coherence even when generating speech for a long time. Furthermore, memory usage and computational load do not increase sharply with input length, enabling stable and efficient learning and the generation of long-duration speech.

SpeechSSM effectively processes unbounded speech sequences by dividing speech data into short, fixed units (windows), processing each unit independently, and then combining them to create long speech.

Additionally, in the speech generation phase, it uses a 'Non-Autoregressive' audio synthesis model (SoundStorm), which rapidly generates multiple parts at once instead of slowly creating one character or one word at a time, enabling the fast generation of high-quality speech.

While existing models typically evaluated short speech models of about 10 seconds, Se Jin Park created new evaluation tasks for speech generation based on their self-built benchmark dataset, 'LibriSpeech-Long,' capable of generating up to 16 minutes of speech.

Compared to PPL (Perplexity), an existing speech model evaluation metric that only indicates grammatical correctness, she proposed new evaluation metrics such as 'SC-L (semantic coherence over time)' to assess content coherence over time, and 'N-MOS-T (naturalness mean opinion score over time)' to evaluate naturalness over time, enabling more effective and precise evaluation.

Through these new evaluations, it was confirmed that speech generated by the SpeechSSM spoken language model consistently featured specific individuals mentioned in the initial prompt, and new characters and events unfolded naturally and contextually consistently, despite long-duration generation. This contrasts sharply with existing models, which tended to easily lose their topic and exhibit repetition during long-duration generation.

PhD candidate Sejin Park explained, "Existing spoken language models had limitations in long-duration generation, so our goal was to develop a spoken language model capable of generating long-duration speech for actual human use." She added, "This research achievement is expected to greatly contribute to various types of voice content creation and voice AI fields like voice assistants, by maintaining consistent content in long contexts and responding more efficiently and quickly in real time than existing methods."

This research, with Se Jin Park as the first author, was conducted in collaboration with Google DeepMind and is scheduled to be presented as an oral presentation at ICML (International Conference on Machine Learning) 2025 on July 16th.

Paper Title: Long-Form Speech Generation with Spoken Language Models

DOI: 10.48550/arXiv.2412.18603

Ph.D. candidate Se Jin Park has demonstrated outstanding research capabilities as a member of Professor Yong Man Ro's MLLM (multimodal large language model) research team, through her work integrating vision, speech, and language. Her achievements include a spotlight paper presentation at 2024 CVPR (Computer Vision and Pattern Recognition) and an Outstanding Paper Award at 2024 ACL (Association for Computational Linguistics).

For more information, you can refer to the publication and accompanying demo: SpeechSSM Publications.

2025.07.04 View 982 -

KAIST to Lead the Way in Nurturing Talent and Driving S&T Innovation for a G3 AI Powerhouse

* Focusing on nurturing talent and dedicating to R&D to become a G3 AI powerhouse (Top 3 AI Nations).

* Leading the realization of an "AI-driven Basic Society for All" and developing technologies that leverage AI to overcome the crisis in Korea's manufacturing sector.

* 50 years ago, South Korea emerged as a scientific and technological powerhouse from the ashes, with KAIST at its core, contributing to the development of scientific and technological talent, innovative technology, national industrial growth, and the creation of a startup innovation ecosystem.

As public interest in AI and science and technology has significantly grown with the inauguration of the new government, KAIST (President Kwang Hyung Lee) announced its plan, on June 24th, to transform into an "AI-centric, Value-Creating Science and Technology University" that leads national innovation based on science and technology and spearheads solutions to global challenges.

At a time when South Korea is undergoing a major transition to a technology-driven society, KAIST, drawing on its half-century of experience as a "Starter Kit" for national development, is preparing to leap beyond being a mere educational and research institution to become a global innovation hub that creates new social value.

In particular, KAIST has presented a vision for realizing an "AI-driven Basic Society" where all citizens can utilize AI without alienation, enabling South Korea to ascend to the top three AI nations (G3). To achieve this, through the "National AI Research Hub" project (headed by Kee Eung Kim), led by KAIST representing South Korea, the institution is dedicated to enhancing industrial competitiveness and effectively solving social problems based on AI technology.

< KAIST President Kwang Hyung Lee >

KAIST's research achievements in the AI field are garnering international attention. In the top three machine learning conferences (ICML, NeurIPS, ICLR), KAIST ranked 5th globally and 1st in Asia over the past five years (2020-2024). During the same period, based on the number of papers published in top conferences in machine learning, natural language processing, and computer vision (ICML, NeurIPS, ICLR, ACL, EMNLP, NAACL, CVPR, ICCV, ECCV), KAIST ranked 5th globally and 4th in Asia. Furthermore, KAIST has consistently demonstrated unparalleled research capabilities, ranking 1st globally in the average number of papers accepted at ISSCC (International Solid-State Circuits Conference), the world's most prestigious academic conference on semiconductor integrated circuits, for 19 years (2006-2024).

KAIST is continuously expanding its research into core AI technologies, including hyper-scale AI models (Korean LLM), neuromorphic semiconductors, and low-power AI processors, as well as various application areas such as autonomous driving, urban air mobility (UAM), precision medicine, and explainable AI (XAI).

In the manufacturing sector, KAIST's AI technologies are also driving on-site innovation. Professor Young Jae Jang's team has enhanced productivity in advanced manufacturing fields like semiconductors and displays through digital twins utilizing manufacturing site data and AI-based prediction technology. Professor Song Min Kim's team developed ultra-low power wireless tag technology capable of tracking locations with sub-centimeter precision, accelerating the implementation of smart factories. Technologies such as industrial process optimization and equipment failure prediction developed by INEEJI Co., Ltd., founded by Professor Jaesik Choi, are being rapidly applied in real industrial settings, yielding results. INEEJI was designated as a national strategic technology in the 'Explainable AI (XAI)' field by the government in March.

< Researchers performing data analysis for AI research >

Practical applications are also emerging in the robotics sector, which is closely linked to AI. Professor Jemin Hwangbo's team from the Department of Mechanical Engineering garnered attention by newly developing RAIBO 2, a quadrupedal robot usable in high-risk environments such as disaster relief and rough terrain exploration. Professor Kyoung Chul Kong's team and Angel Robotics Co., Ltd. developed the WalkOn Suit exoskeleton robot, significantly improving the quality of life for individuals with complete lower body paralysis or walking disabilities.

Additionally, remarkable research is ongoing in future core technology areas such as AI semiconductors, quantum cryptography communication, ultra-small satellites, hydrogen fuel cells, next-generation batteries, and biomimetic sensors. Notably, space exploration technology based on small satellites, asteroid exploration projects, energy harvesting, and high-speed charging technologies are gaining attention.

Particularly in advanced bio and life sciences, KAIST is collaborating with Germany's Merck company on various research initiatives, including synthetic biology and mRNA. KAIST is also contributing to the construction of a 430 billion won Merck Bio-Center in Daejeon, thereby stimulating the local economy and creating jobs.

Based on these cutting-edge research capabilities, KAIST continues to expand its influence not only within the industry but also on the global stage. It has established strategic partnerships with leading universities worldwide, including MIT, Stanford University, and New York University (NYU). Notably, KAIST and NYU have established a joint campus in New York to strengthen human exchange and collaborative research. Active industry-academia collaborations with global companies such as Google, Intel, and TSMC are also ongoing, playing a pivotal role in future technology development and the creation of an innovation ecosystem.

These activities also lead to a strong startup ecosystem that drives South Korean industries. The flow of startups, which began with companies like Qnix Computer, Nexon, and Naver, has expanded to a total of 1,914 companies to date. Their cumulative assets amount to 94 trillion won, with sales reaching 36 trillion won and employing approximately 60,000 people. Over 90% of these are technology-based startups originating from faculty and student labs, demonstrating a model that makes a tangible economic contribution based on science and technology.

< Students at work >

Having consistently generated diverse achievements, KAIST has already produced approximately 80,000 "KAISTians" who have created innovation through challenge and failure, and is currently recruiting new talent to continue driving innovation that transforms South Korea and the world.

President Kwang Hyung Lee emphasized, "KAIST will establish itself as a global leader in science and technology, designing the future of South Korea and humanity and creating tangible value." He added, "We will focus on talent nurturing and research and development to realize the new government's national agenda of becoming a G3 AI powerhouse."

He further stated, "KAIST's vision for the AI field, in which it places particular emphasis, is to strive for a society where everyone can freely utilize AI. We will contribute to significantly boosting productivity by recovering manufacturing competitiveness through AI and actively disseminating physical AI, AI robots, and AI mobility technologies to industrial sites."

2025.06.24 View 2327

KAIST to Lead the Way in Nurturing Talent and Driving S&T Innovation for a G3 AI Powerhouse

* Focusing on nurturing talent and dedicating to R&D to become a G3 AI powerhouse (Top 3 AI Nations).

* Leading the realization of an "AI-driven Basic Society for All" and developing technologies that leverage AI to overcome the crisis in Korea's manufacturing sector.

* 50 years ago, South Korea emerged as a scientific and technological powerhouse from the ashes, with KAIST at its core, contributing to the development of scientific and technological talent, innovative technology, national industrial growth, and the creation of a startup innovation ecosystem.

As public interest in AI and science and technology has significantly grown with the inauguration of the new government, KAIST (President Kwang Hyung Lee) announced its plan, on June 24th, to transform into an "AI-centric, Value-Creating Science and Technology University" that leads national innovation based on science and technology and spearheads solutions to global challenges.

At a time when South Korea is undergoing a major transition to a technology-driven society, KAIST, drawing on its half-century of experience as a "Starter Kit" for national development, is preparing to leap beyond being a mere educational and research institution to become a global innovation hub that creates new social value.

In particular, KAIST has presented a vision for realizing an "AI-driven Basic Society" where all citizens can utilize AI without alienation, enabling South Korea to ascend to the top three AI nations (G3). To achieve this, through the "National AI Research Hub" project (headed by Kee Eung Kim), led by KAIST representing South Korea, the institution is dedicated to enhancing industrial competitiveness and effectively solving social problems based on AI technology.

< KAIST President Kwang Hyung Lee >

KAIST's research achievements in the AI field are garnering international attention. In the top three machine learning conferences (ICML, NeurIPS, ICLR), KAIST ranked 5th globally and 1st in Asia over the past five years (2020-2024). During the same period, based on the number of papers published in top conferences in machine learning, natural language processing, and computer vision (ICML, NeurIPS, ICLR, ACL, EMNLP, NAACL, CVPR, ICCV, ECCV), KAIST ranked 5th globally and 4th in Asia. Furthermore, KAIST has consistently demonstrated unparalleled research capabilities, ranking 1st globally in the average number of papers accepted at ISSCC (International Solid-State Circuits Conference), the world's most prestigious academic conference on semiconductor integrated circuits, for 19 years (2006-2024).

KAIST is continuously expanding its research into core AI technologies, including hyper-scale AI models (Korean LLM), neuromorphic semiconductors, and low-power AI processors, as well as various application areas such as autonomous driving, urban air mobility (UAM), precision medicine, and explainable AI (XAI).

In the manufacturing sector, KAIST's AI technologies are also driving on-site innovation. Professor Young Jae Jang's team has enhanced productivity in advanced manufacturing fields like semiconductors and displays through digital twins utilizing manufacturing site data and AI-based prediction technology. Professor Song Min Kim's team developed ultra-low power wireless tag technology capable of tracking locations with sub-centimeter precision, accelerating the implementation of smart factories. Technologies such as industrial process optimization and equipment failure prediction developed by INEEJI Co., Ltd., founded by Professor Jaesik Choi, are being rapidly applied in real industrial settings, yielding results. INEEJI was designated as a national strategic technology in the 'Explainable AI (XAI)' field by the government in March.

< Researchers performing data analysis for AI research >

Practical applications are also emerging in the robotics sector, which is closely linked to AI. Professor Jemin Hwangbo's team from the Department of Mechanical Engineering garnered attention by newly developing RAIBO 2, a quadrupedal robot usable in high-risk environments such as disaster relief and rough terrain exploration. Professor Kyoung Chul Kong's team and Angel Robotics Co., Ltd. developed the WalkOn Suit exoskeleton robot, significantly improving the quality of life for individuals with complete lower body paralysis or walking disabilities.

Additionally, remarkable research is ongoing in future core technology areas such as AI semiconductors, quantum cryptography communication, ultra-small satellites, hydrogen fuel cells, next-generation batteries, and biomimetic sensors. Notably, space exploration technology based on small satellites, asteroid exploration projects, energy harvesting, and high-speed charging technologies are gaining attention.

Particularly in advanced bio and life sciences, KAIST is collaborating with Germany's Merck company on various research initiatives, including synthetic biology and mRNA. KAIST is also contributing to the construction of a 430 billion won Merck Bio-Center in Daejeon, thereby stimulating the local economy and creating jobs.

Based on these cutting-edge research capabilities, KAIST continues to expand its influence not only within the industry but also on the global stage. It has established strategic partnerships with leading universities worldwide, including MIT, Stanford University, and New York University (NYU). Notably, KAIST and NYU have established a joint campus in New York to strengthen human exchange and collaborative research. Active industry-academia collaborations with global companies such as Google, Intel, and TSMC are also ongoing, playing a pivotal role in future technology development and the creation of an innovation ecosystem.

These activities also lead to a strong startup ecosystem that drives South Korean industries. The flow of startups, which began with companies like Qnix Computer, Nexon, and Naver, has expanded to a total of 1,914 companies to date. Their cumulative assets amount to 94 trillion won, with sales reaching 36 trillion won and employing approximately 60,000 people. Over 90% of these are technology-based startups originating from faculty and student labs, demonstrating a model that makes a tangible economic contribution based on science and technology.

< Students at work >

Having consistently generated diverse achievements, KAIST has already produced approximately 80,000 "KAISTians" who have created innovation through challenge and failure, and is currently recruiting new talent to continue driving innovation that transforms South Korea and the world.

President Kwang Hyung Lee emphasized, "KAIST will establish itself as a global leader in science and technology, designing the future of South Korea and humanity and creating tangible value." He added, "We will focus on talent nurturing and research and development to realize the new government's national agenda of becoming a G3 AI powerhouse."

He further stated, "KAIST's vision for the AI field, in which it places particular emphasis, is to strive for a society where everyone can freely utilize AI. We will contribute to significantly boosting productivity by recovering manufacturing competitiveness through AI and actively disseminating physical AI, AI robots, and AI mobility technologies to industrial sites."

2025.06.24 View 2327 -

KAIST Turns an Unprecedented Idea into Reality: Quantum Computing with Magnets

What started as an idea under KAIST’s Global Singularity Research Project—"Can we build a quantum computer using magnets?"—has now become a scientific reality. A KAIST-led international research team has successfully demonstrated a core quantum computing technology using magnetic materials (ferromagnets) for the first time in the world.

KAIST (represented by President Kwang-Hyung Lee) announced on the 6th of May that a team led by Professor Kab-Jin Kim from the Department of Physics, in collaboration with the Argonne National Laboratory and the University of Illinois Urbana-Champaign (UIUC), has developed a “photon-magnon hybrid chip” and successfully implemented real-time, multi-pulse interference using magnetic materials—marking a global first.

< Photo 1. Dr. Moojune Song (left) and Professor Kab-Jin Kim (right) of KAIST Department of Physics >

In simple terms, the researchers developed a special chip that synchronizes light and internal magnetic vibrations (magnons), enabling the transmission of phase information between distant magnets. They succeeded in observing and controlling interference between multiple signals in real time. This marks the first experimental evidence that magnets can serve as key components in quantum computing, serving as a pivotal step toward magnet-based quantum platforms.

The N and S poles of a magnet stem from the spin of electrons inside atoms. When many atoms align, their collective spin vibrations create a quantum particle known as a “magnon.”

Magnons are especially promising because of their nonreciprocal nature—they can carry information in only one direction, which makes them suitable for quantum noise isolation in compact quantum chips. They can also couple with both light and microwaves, enabling the potential for long-distance quantum communication over tens of kilometers.

Moreover, using special materials like antiferromagnets could allow quantum computers to operate at terahertz (THz) frequencies, far surpassing today’s hardware limitations, and possibly enabling room-temperature quantum computing without the need for bulky cryogenic equipment.

To build such a system, however, one must be able to transmit, measure, and control the phase information of magnons—the starting point and propagation of their waveforms—in real time. This had not been achieved until now.

< Figure 1. Superconducting Circuit-Based Magnon-Photon Hybrid System. (a) Schematic diagram of the device. A NbN superconducting resonator circuit fabricated on a silicon substrate is coupled with spherical YIG magnets (250 μm diameter), and magnons are generated and measured in real-time via a vertical antenna. (b) Photograph of the actual device. The distance between the two YIG spheres is 12 mm, a distance at which they cannot influence each other without the superconducting circuit. >

Professor Kim’s team used two tiny magnetic spheres made of Yttrium Iron Garnet (YIG) placed 12 mm apart with a superconducting resonator in between—similar to those used in quantum processors by Google and IBM. They input pulses into one magnet and successfully observed lossless transmission of magnon vibrations to the second magnet via the superconducting circuit.

They confirmed that from single nanosecond pulses to four microwave pulses, the magnon vibrations maintained their phase information and demonstrated predictable constructive or destructive interference in real time—known as coherent interference.

By adjusting the pulse frequencies and their intervals, the researchers could also freely control the interference patterns of magnons, effectively showing for the first time that electrical signals can be used to manipulate magnonic quantum states.

This work demonstrated that quantum gate operations using multiple pulses—a fundamental technique in quantum information processing—can be implemented using a hybrid system of magnetic materials and superconducting circuits. This opens the door for the practical use of magnet-based quantum devices.

< Figure 2. Experimental Data. (a) Measurement results of magnon-magnon band anticrossing via continuous wave measurement, showing the formation of a strong coupling hybrid system. (b) Magnon pulse exchange oscillation phenomenon between YIG spheres upon single pulse application. It can be seen that magnon information is coherently transmitted at regular time intervals through the superconducting circuit. (c,d) Magnon interference phenomenon upon dual pulse application. The magnon information state can be arbitrarily controlled by adjusting the time interval and carrier frequency between pulses. >

Professor Kab-Jin Kim stated, “This project began with a bold, even unconventional idea proposed to the Global Singularity Research Program: ‘What if we could build a quantum computer with magnets?’ The journey has been fascinating, and this study not only opens a new field of quantum spintronics, but also marks a turning point in developing high-efficiency quantum information processing devices.”

The research was co-led by postdoctoral researcher Moojune Song (KAIST), Dr. Yi Li and Dr. Valentine Novosad from Argonne National Lab, and Prof. Axel Hoffmann’s team at UIUC. The results were published in Nature Communications on April 17 and npj Spintronics on April 1, 2025.

Paper 1: Single-shot magnon interference in a magnon-superconducting-resonator hybrid circuit, Nat. Commun. 16, 3649 (2025)

DOI: https://doi.org/10.1038/s41467-025-58482-2

Paper 2: Single-shot electrical detection of short-wavelength magnon pulse transmission in a magnonic ultra-thin-film waveguide, npj Spintronics 3, 12 (2025)

DOI: https://doi.org/10.1038/s44306-025-00072-5

The research was supported by KAIST’s Global Singularity Research Initiative, the National Research Foundation of Korea (including the Mid-Career Researcher, Leading Research Center, and Quantum Information Science Human Resource Development programs), and the U.S. Department of Energy.

2025.06.12 View 4023

KAIST Turns an Unprecedented Idea into Reality: Quantum Computing with Magnets

What started as an idea under KAIST’s Global Singularity Research Project—"Can we build a quantum computer using magnets?"—has now become a scientific reality. A KAIST-led international research team has successfully demonstrated a core quantum computing technology using magnetic materials (ferromagnets) for the first time in the world.

KAIST (represented by President Kwang-Hyung Lee) announced on the 6th of May that a team led by Professor Kab-Jin Kim from the Department of Physics, in collaboration with the Argonne National Laboratory and the University of Illinois Urbana-Champaign (UIUC), has developed a “photon-magnon hybrid chip” and successfully implemented real-time, multi-pulse interference using magnetic materials—marking a global first.

< Photo 1. Dr. Moojune Song (left) and Professor Kab-Jin Kim (right) of KAIST Department of Physics >

In simple terms, the researchers developed a special chip that synchronizes light and internal magnetic vibrations (magnons), enabling the transmission of phase information between distant magnets. They succeeded in observing and controlling interference between multiple signals in real time. This marks the first experimental evidence that magnets can serve as key components in quantum computing, serving as a pivotal step toward magnet-based quantum platforms.

The N and S poles of a magnet stem from the spin of electrons inside atoms. When many atoms align, their collective spin vibrations create a quantum particle known as a “magnon.”

Magnons are especially promising because of their nonreciprocal nature—they can carry information in only one direction, which makes them suitable for quantum noise isolation in compact quantum chips. They can also couple with both light and microwaves, enabling the potential for long-distance quantum communication over tens of kilometers.

Moreover, using special materials like antiferromagnets could allow quantum computers to operate at terahertz (THz) frequencies, far surpassing today’s hardware limitations, and possibly enabling room-temperature quantum computing without the need for bulky cryogenic equipment.

To build such a system, however, one must be able to transmit, measure, and control the phase information of magnons—the starting point and propagation of their waveforms—in real time. This had not been achieved until now.

< Figure 1. Superconducting Circuit-Based Magnon-Photon Hybrid System. (a) Schematic diagram of the device. A NbN superconducting resonator circuit fabricated on a silicon substrate is coupled with spherical YIG magnets (250 μm diameter), and magnons are generated and measured in real-time via a vertical antenna. (b) Photograph of the actual device. The distance between the two YIG spheres is 12 mm, a distance at which they cannot influence each other without the superconducting circuit. >

Professor Kim’s team used two tiny magnetic spheres made of Yttrium Iron Garnet (YIG) placed 12 mm apart with a superconducting resonator in between—similar to those used in quantum processors by Google and IBM. They input pulses into one magnet and successfully observed lossless transmission of magnon vibrations to the second magnet via the superconducting circuit.

They confirmed that from single nanosecond pulses to four microwave pulses, the magnon vibrations maintained their phase information and demonstrated predictable constructive or destructive interference in real time—known as coherent interference.

By adjusting the pulse frequencies and their intervals, the researchers could also freely control the interference patterns of magnons, effectively showing for the first time that electrical signals can be used to manipulate magnonic quantum states.

This work demonstrated that quantum gate operations using multiple pulses—a fundamental technique in quantum information processing—can be implemented using a hybrid system of magnetic materials and superconducting circuits. This opens the door for the practical use of magnet-based quantum devices.

< Figure 2. Experimental Data. (a) Measurement results of magnon-magnon band anticrossing via continuous wave measurement, showing the formation of a strong coupling hybrid system. (b) Magnon pulse exchange oscillation phenomenon between YIG spheres upon single pulse application. It can be seen that magnon information is coherently transmitted at regular time intervals through the superconducting circuit. (c,d) Magnon interference phenomenon upon dual pulse application. The magnon information state can be arbitrarily controlled by adjusting the time interval and carrier frequency between pulses. >

Professor Kab-Jin Kim stated, “This project began with a bold, even unconventional idea proposed to the Global Singularity Research Program: ‘What if we could build a quantum computer with magnets?’ The journey has been fascinating, and this study not only opens a new field of quantum spintronics, but also marks a turning point in developing high-efficiency quantum information processing devices.”

The research was co-led by postdoctoral researcher Moojune Song (KAIST), Dr. Yi Li and Dr. Valentine Novosad from Argonne National Lab, and Prof. Axel Hoffmann’s team at UIUC. The results were published in Nature Communications on April 17 and npj Spintronics on April 1, 2025.

Paper 1: Single-shot magnon interference in a magnon-superconducting-resonator hybrid circuit, Nat. Commun. 16, 3649 (2025)

DOI: https://doi.org/10.1038/s41467-025-58482-2

Paper 2: Single-shot electrical detection of short-wavelength magnon pulse transmission in a magnonic ultra-thin-film waveguide, npj Spintronics 3, 12 (2025)

DOI: https://doi.org/10.1038/s44306-025-00072-5

The research was supported by KAIST’s Global Singularity Research Initiative, the National Research Foundation of Korea (including the Mid-Career Researcher, Leading Research Center, and Quantum Information Science Human Resource Development programs), and the U.S. Department of Energy.

2025.06.12 View 4023 -

KAIST Research Team Breaks Down Musical Instincts with AI

Music, often referred to as the universal language, is known to be a common component in all cultures. Then, could ‘musical instinct’ be something that is shared to some degree despite the extensive environmental differences amongst cultures?

On January 16, a KAIST research team led by Professor Hawoong Jung from the Department of Physics announced to have identified the principle by which musical instincts emerge from the human brain without special learning using an artificial neural network model.

Previously, many researchers have attempted to identify the similarities and differences between the music that exist in various different cultures, and tried to understand the origin of the universality. A paper published in Science in 2019 had revealed that music is produced in all ethnographically distinct cultures, and that similar forms of beats and tunes are used. Neuroscientist have also previously found out that a specific part of the human brain, namely the auditory cortex, is responsible for processing musical information.

Professor Jung’s team used an artificial neural network model to show that cognitive functions for music forms spontaneously as a result of processing auditory information received from nature, without being taught music. The research team utilized AudioSet, a large-scale collection of sound data provided by Google, and taught the artificial neural network to learn the various sounds. Interestingly, the research team discovered that certain neurons within the network model would respond selectively to music. In other words, they observed the spontaneous generation of neurons that reacted minimally to various other sounds like those of animals, nature, or machines, but showed high levels of response to various forms of music including both instrumental and vocal.

The neurons in the artificial neural network model showed similar reactive behaviours to those in the auditory cortex of a real brain. For example, artificial neurons responded less to the sound of music that was cropped into short intervals and were rearranged. This indicates that the spontaneously-generated music-selective neurons encode the temporal structure of music. This property was not limited to a specific genre of music, but emerged across 25 different genres including classic, pop, rock, jazz, and electronic.

< Figure 1. Illustration of the musicality of the brain and artificial neural network (created with DALL·E3 AI based on the paper content) >

Furthermore, suppressing the activity of the music-selective neurons was found to greatly impede the cognitive accuracy for other natural sounds. That is to say, the neural function that processes musical information helps process other sounds, and that ‘musical ability’ may be an instinct formed as a result of an evolutionary adaptation acquired to better process sounds from nature.

Professor Hawoong Jung, who advised the research, said, “The results of our study imply that evolutionary pressure has contributed to forming the universal basis for processing musical information in various cultures.” As for the significance of the research, he explained, “We look forward for this artificially built model with human-like musicality to become an original model for various applications including AI music generation, musical therapy, and for research in musical cognition.” He also commented on its limitations, adding, “This research however does not take into consideration the developmental process that follows the learning of music, and it must be noted that this is a study on the foundation of processing musical information in early development.”

< Figure 2. The artificial neural network that learned to recognize non-musical natural sounds in the cyber space distinguishes between music and non-music. >

This research, conducted by first author Dr. Gwangsu Kim of the KAIST Department of Physics (current affiliation: MIT Department of Brain and Cognitive Sciences) and Dr. Dong-Kyum Kim (current affiliation: IBS) was published in Nature Communications under the title, “Spontaneous emergence of rudimentary music detectors in deep neural networks”.

This research was supported by the National Research Foundation of Korea.

2024.01.23 View 9018

KAIST Research Team Breaks Down Musical Instincts with AI

Music, often referred to as the universal language, is known to be a common component in all cultures. Then, could ‘musical instinct’ be something that is shared to some degree despite the extensive environmental differences amongst cultures?

On January 16, a KAIST research team led by Professor Hawoong Jung from the Department of Physics announced to have identified the principle by which musical instincts emerge from the human brain without special learning using an artificial neural network model.

Previously, many researchers have attempted to identify the similarities and differences between the music that exist in various different cultures, and tried to understand the origin of the universality. A paper published in Science in 2019 had revealed that music is produced in all ethnographically distinct cultures, and that similar forms of beats and tunes are used. Neuroscientist have also previously found out that a specific part of the human brain, namely the auditory cortex, is responsible for processing musical information.

Professor Jung’s team used an artificial neural network model to show that cognitive functions for music forms spontaneously as a result of processing auditory information received from nature, without being taught music. The research team utilized AudioSet, a large-scale collection of sound data provided by Google, and taught the artificial neural network to learn the various sounds. Interestingly, the research team discovered that certain neurons within the network model would respond selectively to music. In other words, they observed the spontaneous generation of neurons that reacted minimally to various other sounds like those of animals, nature, or machines, but showed high levels of response to various forms of music including both instrumental and vocal.

The neurons in the artificial neural network model showed similar reactive behaviours to those in the auditory cortex of a real brain. For example, artificial neurons responded less to the sound of music that was cropped into short intervals and were rearranged. This indicates that the spontaneously-generated music-selective neurons encode the temporal structure of music. This property was not limited to a specific genre of music, but emerged across 25 different genres including classic, pop, rock, jazz, and electronic.

< Figure 1. Illustration of the musicality of the brain and artificial neural network (created with DALL·E3 AI based on the paper content) >

Furthermore, suppressing the activity of the music-selective neurons was found to greatly impede the cognitive accuracy for other natural sounds. That is to say, the neural function that processes musical information helps process other sounds, and that ‘musical ability’ may be an instinct formed as a result of an evolutionary adaptation acquired to better process sounds from nature.