CO

-

KAIST Takes the Lead in Developing Core Technologies for Generative AI National R&D Project

KAIST (President Kwang Hyung Lee) is leading the transition to AI Transformation (AX) by advancing research topics based on the practical technological demands of industries, fostering AI talent, and demonstrating research outcomes in industrial settings. In this context, KAIST announced on the 13th of August that it is at the forefront of strengthening the nation's AI technology competitiveness by developing core AI technologies via national R&D projects for generative AI led by the Ministry of Science and ICT.

In the 'Generative AI Leading Talent Cultivation Project,' KAIST was selected as a joint research institution for all three projects—two led by industry partners and one by a research institution—and will thus be tasked with the dual challenge of developing core generative AI technologies and cultivating practical, core talent through industry-academia collaborations.

Moreover, in the 'Development of a Proprietary AI Foundation Model' project, KAIST faculty members are participating as key researchers in four out of five consortia, establishing the university as a central hub for domestic generative AI research.

Each project in the Generative AI Leading Talent Cultivation Project will receive 6.7 billion won, while each consortium in the proprietary AI foundation model development project will receive a total of 200 billion won in government support, including GPU infrastructure.

As part of the 'Generative AI Leading Talent Cultivation Project,' which runs until the end of 2028, KAIST is collaborating with LG AI Research. Professor Noseong Park from the School of Computing will participate as the principal investigator for KAIST, conducting research in the field of physics-based generative AI (Physical AI). This project focuses on developing image and video generation technologies based on physical laws and developing a 'World Model.'

<(From Left) Professor Noseong Park, Professor Jae-gil Lee, Professor Jiyoung Whang, Professor Sung-Eui Yoon, Professor Hyunwoo Kim>

In particular, research being conducted by Professor Noseong Park's team and Professor Sung-Eui Yoon's team proposes a model structure designed to help AI learn the real-world rules of the physical world more precisely. This is considered a core technology for Physical AI.

Professors Noseong Park, Jae-gil Lee, Jiyoung Hwang, Sung-Eui Yoon, and Hyun-Woo Kim from the School of Computing, who have been globally recognized for their achievements in the AI field, are jointly participating in this project. This year, they have presented work at top AI conferences such as ICLR, ICRA, ICCV, and ICML, including: ▲ Research on physics-based Ollivier Ricci-flow (ICLR 2025, Prof. Noseong Park) ▲ Technology to improve the navigation efficiency of quadruped robots (ICRA 2025, Prof. Sung-Eui Yoon) ▲ A multimodal large language model for text-video retrieval (ICCV 2025, Prof. Hyun-Woo Kim) ▲ Structured representation learning for knowledge generation (ICML 2025, Prof. Jiyoung Whang).

In the collaboration with NC AI, Professor Tae-Kyun Kim from the School of Computing is participating as the principal investigator to develop multimodal AI agent technology. The research will explore technologies applicable to the entire gaming industry, such as 3D modeling, animation, avatar expression generation, and character AI. It is expected to contribute to training practical AI talents by giving them hands-on experience in the industrial field and making the game production pipeline more efficient.

As the principal investigator, Professor Tae-Kyun Kim, a renowned scholar in 3D computer vision and generative AI, is developing key technologies for creating immersive avatars in the virtual and gaming industries. He will apply a first-person full-body motion diffusion model, which he developed through a joint research project with Meta, to VR and AR environments.

<Professor Tae-Kyun Kim, Minhyeok Seong, and Tae-Hyun Oh from the School of Computing, and Professor Sung-Hee Lee, Woon-Tack Woo, Jun-Yong Noh, and Kyung-Tae Lim from the Graduate School of Culture Technology, Professor Ki-min Lee, Seungryong Kim from the Kim Jae-chul Graduate School of AI>

Professor Tae-Kyun Kim, Minhyeok Seong, and Tae-Hyun Oh from the School of Computing, and Professors Sung-Hee Lee, Woon-Tack Woo, Jun-Yong Noh, and Kyung-Tae Lim from the Graduate School of Culture Technology, are participating in the NC AI project. They have presented globally recognized work at CVPR 2025 and ICLR 2025, including: ▲ A first-person full-body motion diffusion model (CVPR 2025, Prof. Tae-Kyun Kim) ▲ Stochastic diffusion synchronization technology for image generation (ICLR 2025, Prof. Minhyeok Seong) ▲ The creation of a large-scale 3D facial mesh video dataset (ICLR 2025, Prof. Tae-Hyun Oh) ▲ Object-adaptive agent motion generation technology, InterFaceRays (Eurographics 2025, Prof. Sung-Hee Lee) ▲ 3D neural face editing technology (CVPR 2025, Prof. Jun-Yong Noh) ▲ Research on selective search augmentation for multilingual vision-language models (COLING 2025, Prof. Kyung-Tae Lim).

In the project led by the Korea Electronics Technology Institute (KETI), Professor Seungryong Kim from the Kim Jae-chul Graduate School of AI is participating in generative AI technology development. His team recently developed new technology for extracting robust point-tracking information from video data in collaboration with Adobe Research and Google DeepMind, proposing a key technology for clearly understanding and generating videos.

Each industry partner will open joint courses with KAIST and provide their generative AI foundation models for education and research. Selected outstanding students will be dispatched to these companies to conduct practical research, and KAIST faculty will also serve as adjunct professors at the in-house AI graduate school established by LG AI Research.

<Egocentric Whole-Body Motion Diffusion (CVPR 2025, Prof. Taekyun Kim's Lab), Stochastic Diffusion Synchronization for Image Generation (ICLR 2025, Prof. Minhyuk Sung's Lab), A Large-Scale 3D Face Mesh Video Dataset (ICLR 2025, Prof. Taehyun Oh's Lab), InterFaceRays: Object-Adaptive Agent Action Generation (Eurographics 2025, Prof. Sunghee Lee's Lab), 3D Neural Face Editing (CVPR 2025, Prof. Junyong Noh's Lab), and Selective Retrieval Augmentation for Multilingual Vision-Language Models (COLING 2025, Prof. Kyeong-tae Lim's Lab)>

Meanwhile, KAIST showed an unrivaled presence by participating in four consortia for the Ministry of Science and ICT's 'Proprietary AI Foundation Model Development' project.

In the NC AI Consortium, Professors Tae-Kyun Kim, Sung-Eui Yoon, Noseong Park, Jiyoung Hwang, and Minhyeok Seong from the School of Computing are participating, focusing on the development of multimodal foundation models (LMMs) and robot-based models. They are particularly concentrating on developing LMMs that learn common sense about space, physics, and time. They have formed a research team optimized for developing next-generation, multimodal AI models that can understand and interact with the physical world, equipped with an 'all-purpose AI brain' capable of simultaneously understanding and processing diverse information such as text, images, video, and sound.

In the Upstage Consortium, Professors Jae-gil Lee and Hyeon-eon Oh from the School of Computing, both renowned scholars in data AI and NLP (natural language processing), along with Professor Kyung-Tae Lim from the Graduate School of Culture Technology, an LLM expert, are responsible for developing vertical models for industries such as finance, law, and manufacturing. The KAIST researchers will concentrate on developing practical AI models that are directly applicable to industrial settings and tailored to each specific industry.

The Naver Consortium includes Professor Tae-Hyun Oh from the School of Computing, who has developed key technology for multimodal learning and compositional language-vision models, Professor Hyun-Woo Kim, who has proposed video reasoning and generation methods using language models, and faculty from the Kim Jae-chul Graduate School of AI and the Department of Electrical Engineering.

In the SKT Consortium, Professor Ki-min Lee from the Kim Jae-chul Graduate School of AI, who has achieved outstanding results in text-to-image generation, human preference modeling, and visual robotic manipulation technology development, is participating. This technology is expected to play a key role in developing personalized services and customized AI solutions for telecommunications companies.

This outcome is considered a successful culmination of KAIST's strategy for developing AI technology based on industry demand and centered on on-site demonstrations.

KAIST President Kwang Hyung Lee said, "For AI technology to go beyond academic achievements and be connected to and practical for industry, continuous government support, research, and education centered on industry-academia collaboration are essential. KAIST will continue to strive to solve problems in industrial settings and make a real contribution to enhancing the competitiveness of the AI ecosystem."

He added that while the project led by Professor Sung-Ju Hwang from the Kim Jae-chul Graduate School of AI, which had applied as a lead institution for the proprietary foundation model development project, was unfortunately not selected, it was a meaningful challenge that stood out for its original approach and bold attempts. President Lee further commented, "Regardless of whether it was selected or not, such attempts will accumulate and make the Korean AI ecosystem even richer."

2025.08.13 View 33

KAIST Takes the Lead in Developing Core Technologies for Generative AI National R&D Project

KAIST (President Kwang Hyung Lee) is leading the transition to AI Transformation (AX) by advancing research topics based on the practical technological demands of industries, fostering AI talent, and demonstrating research outcomes in industrial settings. In this context, KAIST announced on the 13th of August that it is at the forefront of strengthening the nation's AI technology competitiveness by developing core AI technologies via national R&D projects for generative AI led by the Ministry of Science and ICT.

In the 'Generative AI Leading Talent Cultivation Project,' KAIST was selected as a joint research institution for all three projects—two led by industry partners and one by a research institution—and will thus be tasked with the dual challenge of developing core generative AI technologies and cultivating practical, core talent through industry-academia collaborations.

Moreover, in the 'Development of a Proprietary AI Foundation Model' project, KAIST faculty members are participating as key researchers in four out of five consortia, establishing the university as a central hub for domestic generative AI research.

Each project in the Generative AI Leading Talent Cultivation Project will receive 6.7 billion won, while each consortium in the proprietary AI foundation model development project will receive a total of 200 billion won in government support, including GPU infrastructure.

As part of the 'Generative AI Leading Talent Cultivation Project,' which runs until the end of 2028, KAIST is collaborating with LG AI Research. Professor Noseong Park from the School of Computing will participate as the principal investigator for KAIST, conducting research in the field of physics-based generative AI (Physical AI). This project focuses on developing image and video generation technologies based on physical laws and developing a 'World Model.'

<(From Left) Professor Noseong Park, Professor Jae-gil Lee, Professor Jiyoung Whang, Professor Sung-Eui Yoon, Professor Hyunwoo Kim>

In particular, research being conducted by Professor Noseong Park's team and Professor Sung-Eui Yoon's team proposes a model structure designed to help AI learn the real-world rules of the physical world more precisely. This is considered a core technology for Physical AI.

Professors Noseong Park, Jae-gil Lee, Jiyoung Hwang, Sung-Eui Yoon, and Hyun-Woo Kim from the School of Computing, who have been globally recognized for their achievements in the AI field, are jointly participating in this project. This year, they have presented work at top AI conferences such as ICLR, ICRA, ICCV, and ICML, including: ▲ Research on physics-based Ollivier Ricci-flow (ICLR 2025, Prof. Noseong Park) ▲ Technology to improve the navigation efficiency of quadruped robots (ICRA 2025, Prof. Sung-Eui Yoon) ▲ A multimodal large language model for text-video retrieval (ICCV 2025, Prof. Hyun-Woo Kim) ▲ Structured representation learning for knowledge generation (ICML 2025, Prof. Jiyoung Whang).

In the collaboration with NC AI, Professor Tae-Kyun Kim from the School of Computing is participating as the principal investigator to develop multimodal AI agent technology. The research will explore technologies applicable to the entire gaming industry, such as 3D modeling, animation, avatar expression generation, and character AI. It is expected to contribute to training practical AI talents by giving them hands-on experience in the industrial field and making the game production pipeline more efficient.

As the principal investigator, Professor Tae-Kyun Kim, a renowned scholar in 3D computer vision and generative AI, is developing key technologies for creating immersive avatars in the virtual and gaming industries. He will apply a first-person full-body motion diffusion model, which he developed through a joint research project with Meta, to VR and AR environments.

<Professor Tae-Kyun Kim, Minhyeok Seong, and Tae-Hyun Oh from the School of Computing, and Professor Sung-Hee Lee, Woon-Tack Woo, Jun-Yong Noh, and Kyung-Tae Lim from the Graduate School of Culture Technology, Professor Ki-min Lee, Seungryong Kim from the Kim Jae-chul Graduate School of AI>

Professor Tae-Kyun Kim, Minhyeok Seong, and Tae-Hyun Oh from the School of Computing, and Professors Sung-Hee Lee, Woon-Tack Woo, Jun-Yong Noh, and Kyung-Tae Lim from the Graduate School of Culture Technology, are participating in the NC AI project. They have presented globally recognized work at CVPR 2025 and ICLR 2025, including: ▲ A first-person full-body motion diffusion model (CVPR 2025, Prof. Tae-Kyun Kim) ▲ Stochastic diffusion synchronization technology for image generation (ICLR 2025, Prof. Minhyeok Seong) ▲ The creation of a large-scale 3D facial mesh video dataset (ICLR 2025, Prof. Tae-Hyun Oh) ▲ Object-adaptive agent motion generation technology, InterFaceRays (Eurographics 2025, Prof. Sung-Hee Lee) ▲ 3D neural face editing technology (CVPR 2025, Prof. Jun-Yong Noh) ▲ Research on selective search augmentation for multilingual vision-language models (COLING 2025, Prof. Kyung-Tae Lim).

In the project led by the Korea Electronics Technology Institute (KETI), Professor Seungryong Kim from the Kim Jae-chul Graduate School of AI is participating in generative AI technology development. His team recently developed new technology for extracting robust point-tracking information from video data in collaboration with Adobe Research and Google DeepMind, proposing a key technology for clearly understanding and generating videos.

Each industry partner will open joint courses with KAIST and provide their generative AI foundation models for education and research. Selected outstanding students will be dispatched to these companies to conduct practical research, and KAIST faculty will also serve as adjunct professors at the in-house AI graduate school established by LG AI Research.

<Egocentric Whole-Body Motion Diffusion (CVPR 2025, Prof. Taekyun Kim's Lab), Stochastic Diffusion Synchronization for Image Generation (ICLR 2025, Prof. Minhyuk Sung's Lab), A Large-Scale 3D Face Mesh Video Dataset (ICLR 2025, Prof. Taehyun Oh's Lab), InterFaceRays: Object-Adaptive Agent Action Generation (Eurographics 2025, Prof. Sunghee Lee's Lab), 3D Neural Face Editing (CVPR 2025, Prof. Junyong Noh's Lab), and Selective Retrieval Augmentation for Multilingual Vision-Language Models (COLING 2025, Prof. Kyeong-tae Lim's Lab)>

Meanwhile, KAIST showed an unrivaled presence by participating in four consortia for the Ministry of Science and ICT's 'Proprietary AI Foundation Model Development' project.

In the NC AI Consortium, Professors Tae-Kyun Kim, Sung-Eui Yoon, Noseong Park, Jiyoung Hwang, and Minhyeok Seong from the School of Computing are participating, focusing on the development of multimodal foundation models (LMMs) and robot-based models. They are particularly concentrating on developing LMMs that learn common sense about space, physics, and time. They have formed a research team optimized for developing next-generation, multimodal AI models that can understand and interact with the physical world, equipped with an 'all-purpose AI brain' capable of simultaneously understanding and processing diverse information such as text, images, video, and sound.

In the Upstage Consortium, Professors Jae-gil Lee and Hyeon-eon Oh from the School of Computing, both renowned scholars in data AI and NLP (natural language processing), along with Professor Kyung-Tae Lim from the Graduate School of Culture Technology, an LLM expert, are responsible for developing vertical models for industries such as finance, law, and manufacturing. The KAIST researchers will concentrate on developing practical AI models that are directly applicable to industrial settings and tailored to each specific industry.

The Naver Consortium includes Professor Tae-Hyun Oh from the School of Computing, who has developed key technology for multimodal learning and compositional language-vision models, Professor Hyun-Woo Kim, who has proposed video reasoning and generation methods using language models, and faculty from the Kim Jae-chul Graduate School of AI and the Department of Electrical Engineering.

In the SKT Consortium, Professor Ki-min Lee from the Kim Jae-chul Graduate School of AI, who has achieved outstanding results in text-to-image generation, human preference modeling, and visual robotic manipulation technology development, is participating. This technology is expected to play a key role in developing personalized services and customized AI solutions for telecommunications companies.

This outcome is considered a successful culmination of KAIST's strategy for developing AI technology based on industry demand and centered on on-site demonstrations.

KAIST President Kwang Hyung Lee said, "For AI technology to go beyond academic achievements and be connected to and practical for industry, continuous government support, research, and education centered on industry-academia collaboration are essential. KAIST will continue to strive to solve problems in industrial settings and make a real contribution to enhancing the competitiveness of the AI ecosystem."

He added that while the project led by Professor Sung-Ju Hwang from the Kim Jae-chul Graduate School of AI, which had applied as a lead institution for the proprietary foundation model development project, was unfortunately not selected, it was a meaningful challenge that stood out for its original approach and bold attempts. President Lee further commented, "Regardless of whether it was selected or not, such attempts will accumulate and make the Korean AI ecosystem even richer."

2025.08.13 View 33 -

KAIST Develops World’s First Wireless OLED Contact Lens for Retinal Diagnostics

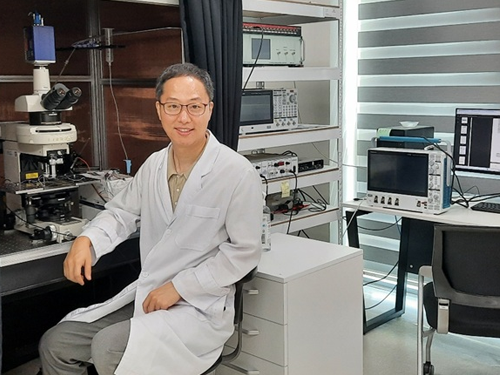

<ID-style photograph against a laboratory background featuring an OLED contact lens sample (center), flanked by the principal authors (left: Professor Seunghyup Yoo ; right: Dr. Jee Hoon Sim). Above them (from top to bottom) are: Professor Se Joon Woo, Professor Sei Kwang Hahn, Dr. Su-Bon Kim, and Dr. Hyeonwook Chae>

Electroretinography (ERG) is an ophthalmic diagnostic method used to determine whether the retina is functioning normally. It is widely employed for diagnosing hereditary retinal diseases or assessing retinal function decline.

A team of Korean researchers has developed a next-generation wireless ophthalmic diagnostic technology that replaces the existing stationary, darkroom-based retinal testing method by incorporating an “ultrathin OLED” into a contact lens. This breakthrough is expected to have applications in diverse fields such as myopia treatment, ocular biosignal analysis, augmented-reality (AR) visual information delivery, and light-based neurostimulation.

On the 12th, KAIST (President Kwang Hyung Lee) announced that a research team led by Professor Seunghyup Yoo from the School of Electrical Engineering, in collaboration with Professor Se Joon Woo of Seoul National University Bundang Hospital (Director Jeong-Han Song), Professor Sei Kwang Hahn of POSTECH (President Sung-Keun Kim) and CEO of PHI Biomed Co., and the Electronics and Telecommunications Research Institute (ETRI, President Seungchan Bang) under the National Research Council of Science & Technology (NST, Chairman Youngshik Kim), has developed the world’s first wireless contact lens-based wearable retinal diagnostic platform using organic light-emitting diodes (OLEDs).

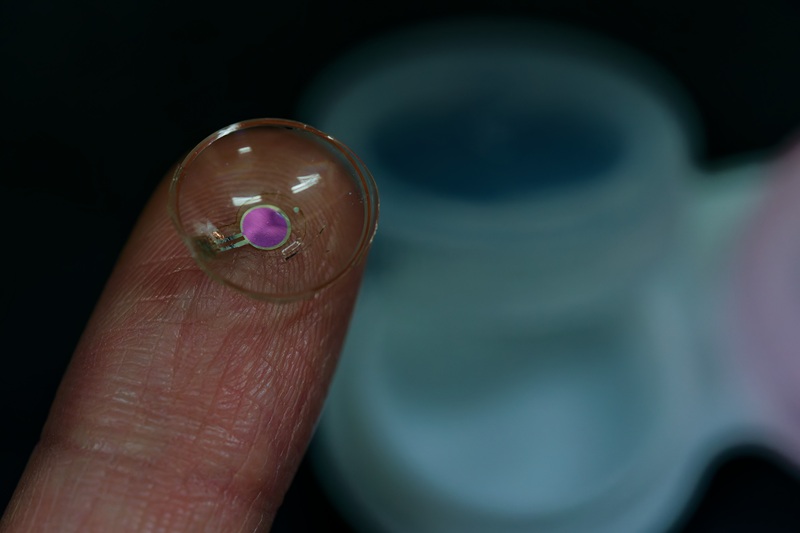

<Figure 1. Schematic and photograph of the wireless OLED contact lens>

This technology enables ERG simply by wearing the lens, eliminating the need for large specialized light sources and dramatically simplifying the conventional, complex ophthalmic diagnostic environment.

Traditionally, ERG requires the use of a stationary Ganzfeld device in a dark room, where patients must keep their eyes open and remain still during the test. This setup imposes spatial constraints and can lead to patient fatigue and compliances challenges.

To overcome these limitations, the joint research team integrated an ultrathin flexible OLED —approximately 12.5 μm thick, or 6–8 times thinner than a human hair— into a contact lens electrode for ERG. They also equipped it with a wireless power receiving antenna and a control chip, completing a system capable of independent operation.

For power transmission, the team adopted a wireless power transfer method using a 433 MHz resonant frequency suitable for stable wireless communication. This was also demonstrated in the form of a wireless controller embedded in a sleep mask, which can be linked to a smartphone —further enhancing practical usability.

<Figure 2. Schematic of the electroretinography (ERG) testing system using a wireless OLED contact lens and an example of an actual test in progress>

While most smart contact lens–type light sources developed for ocular illumination have used inorganic LEDs, these rigid devices emit light almost from a single point, which can lead to excessive heat accumulation and thus usable light intensity. In contrast, OLEDs are areal light sources and were shown to induce retinal responses even under low luminance conditions. In this study, under a relatively low luminance* of 126 nits, the OLED contact lens successfully induced stable ERG signals, producing diagnostic results equivalent to those obtained with existing commercial light sources.

*Luminance: A value indicating how brightly a surface or screen emits light; for reference, the luminance of a smartphone screen is about 300–600 nits (can exceed 1000 nits at maximum).

Animal tests confirmed that the surface temperature of a rabbit’s eye wearing the OLED contact lens remained below 27°C, avoiding corneal heat damage, and that the light-emitting performance was maintained even in humid environments—demonstrating its effectiveness and safety as an ERG diagnostic tool in real clinical settings.

Professor Seunghyup Yoo stated that “integrating the flexibility and diffusive light characteristics of ultrathin OLEDs into a contact lens is a world-first attempt,” and that “this research can help expand smart contact lens technology into on-eye optical diagnostic and phototherapeutic platforms, contributing to the advancement of digital healthcare technology.”

< Wireless operation of the OLED contact lens >

Jee Hoon Sim, Hyeonwook Chae, and Su-Bon Kim, PhD researchers at KAIST, played a key role as co-first authors alongside Dr. Sangbaie Shin of PHI Biomed Co.. Corresponding authors are Professor Seunghyup Yoo (School of Electrical Engineering, KAIST), Professor Sei Kwang Hahn (Department of Materials Science and Engineering, POSTECH), and Professor Se Joon Woo (Seoul National University Bundang Hospital). The results were published online in the internationally renowned journal ACS Nano on May 1st.

● Paper title: Wireless Organic Light-Emitting Diode Contact Lenses for On-Eye Wearable Light Sources and Their Application to Personalized Health Monitoring

● DOI: https://doi.org/10.1021/acsnano.4c18563

● Related video clip: http://bit.ly/3UGg6R8

< Close-up of the OLED contact lens sample >

2025.08.12 View 540

KAIST Develops World’s First Wireless OLED Contact Lens for Retinal Diagnostics

<ID-style photograph against a laboratory background featuring an OLED contact lens sample (center), flanked by the principal authors (left: Professor Seunghyup Yoo ; right: Dr. Jee Hoon Sim). Above them (from top to bottom) are: Professor Se Joon Woo, Professor Sei Kwang Hahn, Dr. Su-Bon Kim, and Dr. Hyeonwook Chae>

Electroretinography (ERG) is an ophthalmic diagnostic method used to determine whether the retina is functioning normally. It is widely employed for diagnosing hereditary retinal diseases or assessing retinal function decline.

A team of Korean researchers has developed a next-generation wireless ophthalmic diagnostic technology that replaces the existing stationary, darkroom-based retinal testing method by incorporating an “ultrathin OLED” into a contact lens. This breakthrough is expected to have applications in diverse fields such as myopia treatment, ocular biosignal analysis, augmented-reality (AR) visual information delivery, and light-based neurostimulation.

On the 12th, KAIST (President Kwang Hyung Lee) announced that a research team led by Professor Seunghyup Yoo from the School of Electrical Engineering, in collaboration with Professor Se Joon Woo of Seoul National University Bundang Hospital (Director Jeong-Han Song), Professor Sei Kwang Hahn of POSTECH (President Sung-Keun Kim) and CEO of PHI Biomed Co., and the Electronics and Telecommunications Research Institute (ETRI, President Seungchan Bang) under the National Research Council of Science & Technology (NST, Chairman Youngshik Kim), has developed the world’s first wireless contact lens-based wearable retinal diagnostic platform using organic light-emitting diodes (OLEDs).

<Figure 1. Schematic and photograph of the wireless OLED contact lens>

This technology enables ERG simply by wearing the lens, eliminating the need for large specialized light sources and dramatically simplifying the conventional, complex ophthalmic diagnostic environment.

Traditionally, ERG requires the use of a stationary Ganzfeld device in a dark room, where patients must keep their eyes open and remain still during the test. This setup imposes spatial constraints and can lead to patient fatigue and compliances challenges.

To overcome these limitations, the joint research team integrated an ultrathin flexible OLED —approximately 12.5 μm thick, or 6–8 times thinner than a human hair— into a contact lens electrode for ERG. They also equipped it with a wireless power receiving antenna and a control chip, completing a system capable of independent operation.

For power transmission, the team adopted a wireless power transfer method using a 433 MHz resonant frequency suitable for stable wireless communication. This was also demonstrated in the form of a wireless controller embedded in a sleep mask, which can be linked to a smartphone —further enhancing practical usability.

<Figure 2. Schematic of the electroretinography (ERG) testing system using a wireless OLED contact lens and an example of an actual test in progress>

While most smart contact lens–type light sources developed for ocular illumination have used inorganic LEDs, these rigid devices emit light almost from a single point, which can lead to excessive heat accumulation and thus usable light intensity. In contrast, OLEDs are areal light sources and were shown to induce retinal responses even under low luminance conditions. In this study, under a relatively low luminance* of 126 nits, the OLED contact lens successfully induced stable ERG signals, producing diagnostic results equivalent to those obtained with existing commercial light sources.

*Luminance: A value indicating how brightly a surface or screen emits light; for reference, the luminance of a smartphone screen is about 300–600 nits (can exceed 1000 nits at maximum).

Animal tests confirmed that the surface temperature of a rabbit’s eye wearing the OLED contact lens remained below 27°C, avoiding corneal heat damage, and that the light-emitting performance was maintained even in humid environments—demonstrating its effectiveness and safety as an ERG diagnostic tool in real clinical settings.

Professor Seunghyup Yoo stated that “integrating the flexibility and diffusive light characteristics of ultrathin OLEDs into a contact lens is a world-first attempt,” and that “this research can help expand smart contact lens technology into on-eye optical diagnostic and phototherapeutic platforms, contributing to the advancement of digital healthcare technology.”

< Wireless operation of the OLED contact lens >

Jee Hoon Sim, Hyeonwook Chae, and Su-Bon Kim, PhD researchers at KAIST, played a key role as co-first authors alongside Dr. Sangbaie Shin of PHI Biomed Co.. Corresponding authors are Professor Seunghyup Yoo (School of Electrical Engineering, KAIST), Professor Sei Kwang Hahn (Department of Materials Science and Engineering, POSTECH), and Professor Se Joon Woo (Seoul National University Bundang Hospital). The results were published online in the internationally renowned journal ACS Nano on May 1st.

● Paper title: Wireless Organic Light-Emitting Diode Contact Lenses for On-Eye Wearable Light Sources and Their Application to Personalized Health Monitoring

● DOI: https://doi.org/10.1021/acsnano.4c18563

● Related video clip: http://bit.ly/3UGg6R8

< Close-up of the OLED contact lens sample >

2025.08.12 View 540 -

2025 APEC Youth STEM Science Exchange Program Successfully Completed

<Photo1. Group photo at the end of the program>

KAIST (President Kwang Hyung Lee) announced on the 11thof August that it successfully hosted the 'APEC Youth STEM Conference KAIST Academic Program,' a global science exchange program for 28 youth researchers from 10 countries and over 30 experts who participated in the '2025 APEC Youth STEM* Collaborative Research and Competition.' The event was held at the main campus in Daejeon on Saturday, August 9.

STEM (Science, Technology, Engineering, Math) refers to the fields of science and engineering.

The competition was hosted by the Ministry of Science and ICT and organized by the APEC Science Gifted Mentoring Center. It took place from Wednesday, August 6, to Saturday, August 9, 2025, at KAIST in Daejeon and the Korea Science Academy of KAIST in Busan. The KAIST program was organized by the APEC Science Gifted Mentoring Center and supported by the KAIST Institute for the Gifted and Talented in Science Education.

Participants had the opportunity to experience Korea's cutting-edge research infrastructure firsthand, broaden their horizons in science and technology, and collaborate and exchange ideas with future science talents from the APEC region.

As the 2025 APEC chair, Korea is promoting various international collaborations to discover and nurture the next generation of talent in the STEM fields. The KAIST academic exchange program was particularly meaningful as it was designed with the international goal of revitalizing science gifted exchanges and expanding the basis for cooperation among APEC member countries. It moved beyond the traditional online-centric research collaboration model to focus on hands-on, on-site, and convergence research experiences.

The global science exchange program at KAIST introduced participants to KAIST's world-class educational and research environment and provided various academic content to allow them to experience real-world examples of convergence technology-based research.

<Photo2. Program Activities>

First, the KAIST Admissions Office participated, introducing KAIST's admissions system and its educational and research environment to outstanding international students, providing an opportunity to attract global talent. Following this, Dr. Tae-kyun Kwon of the Music and Audio Computing Lab at the Graduate School of Culture Technology presented a convergence art project based on musical artificial intelligence data, including a research demonstration in an anechoic chamber.

<Photo3. Participation in a music AI research demonstration>

Furthermore, a Climate Talk Concert program was organized under the leadership of the Graduate School of Green Growth and Sustainability, in connection with the theme of the APEC Youth STEM Collaborative Research: 'Youth-led STEM Solutions: Enhancing Climate Resilience.'

The program was planned and hosted by Dean Jiyong Eom. It provided a platform for young people to explore creative and practical STEM-based solutions to the climate crisis and seek opportunities for international cooperation.

<Photo4. Participation in Music AI Research Demonstration >

The program was a meaningful time for APEC youth researchers, offering practical support for their research through special lectures and Q&A sessions on:

Interdisciplinary Research and Education in the Era of Climate Crisis (Dean Jiyong Eom)

Energy Transition Technology in the Carbon Neutral Era (Professor Jeongrak Son)

Policies for Energy System Change (Professor Jihyo Kim)

Carbon Neutral Bio-technology (Professor Gyeongrok Choi)

After the afternoon talk concert, Lee Jing Jing, a student from Brunei, shared her thoughts, saying, "The lectures by the four professors were very meaningful and insightful. I was able to think about energy transition plans to solve climate change from various perspectives."

Si-jong Kwak, Director of the KAIST Global Institute for Talented Education, stated, "I hope that young people from all over the world will directly experience KAIST's research areas and environment, expand their interest in KAIST, and continue to grow as outstanding talents in the fields of science and engineering."

KAIST President Kwang Hyung Lee said, "KAIST will be at the center of science and technology-based international cooperation and will spare no effort to support future talents in developing creative and practical problem-solving skills. I hope this event served as an opportunity for young people to understand the value of global cooperation and grow into future science leaders."

2025.08.11 View 72

2025 APEC Youth STEM Science Exchange Program Successfully Completed

<Photo1. Group photo at the end of the program>

KAIST (President Kwang Hyung Lee) announced on the 11thof August that it successfully hosted the 'APEC Youth STEM Conference KAIST Academic Program,' a global science exchange program for 28 youth researchers from 10 countries and over 30 experts who participated in the '2025 APEC Youth STEM* Collaborative Research and Competition.' The event was held at the main campus in Daejeon on Saturday, August 9.

STEM (Science, Technology, Engineering, Math) refers to the fields of science and engineering.

The competition was hosted by the Ministry of Science and ICT and organized by the APEC Science Gifted Mentoring Center. It took place from Wednesday, August 6, to Saturday, August 9, 2025, at KAIST in Daejeon and the Korea Science Academy of KAIST in Busan. The KAIST program was organized by the APEC Science Gifted Mentoring Center and supported by the KAIST Institute for the Gifted and Talented in Science Education.

Participants had the opportunity to experience Korea's cutting-edge research infrastructure firsthand, broaden their horizons in science and technology, and collaborate and exchange ideas with future science talents from the APEC region.

As the 2025 APEC chair, Korea is promoting various international collaborations to discover and nurture the next generation of talent in the STEM fields. The KAIST academic exchange program was particularly meaningful as it was designed with the international goal of revitalizing science gifted exchanges and expanding the basis for cooperation among APEC member countries. It moved beyond the traditional online-centric research collaboration model to focus on hands-on, on-site, and convergence research experiences.

The global science exchange program at KAIST introduced participants to KAIST's world-class educational and research environment and provided various academic content to allow them to experience real-world examples of convergence technology-based research.

<Photo2. Program Activities>

First, the KAIST Admissions Office participated, introducing KAIST's admissions system and its educational and research environment to outstanding international students, providing an opportunity to attract global talent. Following this, Dr. Tae-kyun Kwon of the Music and Audio Computing Lab at the Graduate School of Culture Technology presented a convergence art project based on musical artificial intelligence data, including a research demonstration in an anechoic chamber.

<Photo3. Participation in a music AI research demonstration>

Furthermore, a Climate Talk Concert program was organized under the leadership of the Graduate School of Green Growth and Sustainability, in connection with the theme of the APEC Youth STEM Collaborative Research: 'Youth-led STEM Solutions: Enhancing Climate Resilience.'

The program was planned and hosted by Dean Jiyong Eom. It provided a platform for young people to explore creative and practical STEM-based solutions to the climate crisis and seek opportunities for international cooperation.

<Photo4. Participation in Music AI Research Demonstration >

The program was a meaningful time for APEC youth researchers, offering practical support for their research through special lectures and Q&A sessions on:

Interdisciplinary Research and Education in the Era of Climate Crisis (Dean Jiyong Eom)

Energy Transition Technology in the Carbon Neutral Era (Professor Jeongrak Son)

Policies for Energy System Change (Professor Jihyo Kim)

Carbon Neutral Bio-technology (Professor Gyeongrok Choi)

After the afternoon talk concert, Lee Jing Jing, a student from Brunei, shared her thoughts, saying, "The lectures by the four professors were very meaningful and insightful. I was able to think about energy transition plans to solve climate change from various perspectives."

Si-jong Kwak, Director of the KAIST Global Institute for Talented Education, stated, "I hope that young people from all over the world will directly experience KAIST's research areas and environment, expand their interest in KAIST, and continue to grow as outstanding talents in the fields of science and engineering."

KAIST President Kwang Hyung Lee said, "KAIST will be at the center of science and technology-based international cooperation and will spare no effort to support future talents in developing creative and practical problem-solving skills. I hope this event served as an opportunity for young people to understand the value of global cooperation and grow into future science leaders."

2025.08.11 View 72 -

KAIST’s Wearable Robot Design Wins ‘2025 Red Dot Award Best of the Best’

<Professor Hyunjoon Park, M.S candidate Eun-ju Kang, Prospective M.S candidate Jae-seong Kim, undergraduate student Min-su Kim>

A team led by Professor Hyunjoon Park from the Department of Industrial Design won the ‘Best of the Best’ award at the 2025 Red Dot Design Awards, one of the world's top three design awards, for their 'Angel Robotics WSF1 VISION Concept.'

The design for the next-generation wearable robot for people with paraplegia successfully implements functionality, aesthetics, and social inclusion. This latest achievement follows the team's iF Design Award win for the WalkON Suit F1 prototype, which also won a gold medal at the Cybathlon last year. This marks consecutive wins at top-tier international design awards.

KAIST (President Kwang-hyung Lee) announced on the 8th of August that Move Lab, a research team led by Professor Hyunjoon Park from the Department of Industrial Design, won the 'Best of the Best' award in the Design Concept-Professional category at the prestigious '2025 Red Dot Design Awards' for their next-generation wearable robot design, the ‘Angel Robotics WSF1 VISION Concept.’

The German 'Red Dot Design Awards' is one of the world's most well-known design competitions. It is considered one of the world's top three design awards along with Germany’s iF Design Awards and America’s IDEA. The ‘Best of the Best’ award is given to the best design in a category and is awarded only to a very select few of the top designs (within the top 1%) among all Red Dot Award winners.

Professor Hyunjoon Park’s team was honored with the ‘Best of the Best’ award for a user-friendly follow-up development of the ‘WalkON Suit F1 prototype,’ which won a gold medal at the 2024 Cybathlon and an iF Design Award in 2025.

<Figure 1. WSF1 Vision Concept Main Image>

This award-winning design is the result of industry-academic cooperation with Angel Robotics Inc., founded by Professor Kyoungchul Kong from the KAIST Department of Mechanical Engineering. It is a concept design that proposes a next-generation wearable robot (an ultra-personal mobility device) that can be used by people with paraplegia in their daily lives.

The research team focused on transforming Angel Robotics Inc.'s advanced engineering platform into an intuitive and emotional, user-centric experience, implementing a design solution that simultaneously possesses functionality, aesthetics, and social inclusion.

<Figure 2. WSF1 Vision Concept Full Exterior (Front View)>

The WSF1 VISION Concept includes innovative features implemented in Professor Kyoungchul Kong’s Exo Lab, such as:

An autonomous access function where the robot finds the user on its own.

A front-loading mechanism designed for the user to put it on alone while seated.

Multi-directional walking functionality realized through 12 powerful torque actuators and the latest control algorithms.

AI vision technology, along with a multi-visual display system that provides navigation and omnidirectional vision.

This provides users with a safer and more convenient mobility experience.

The strong yet elegant silhouette was achieved through a design process that pursued perfection in proportion, surfaces, and details not seen in existing wearable robots. In particular, the fabric cover that wraps around the entire thigh from the robot's hip joint is a stylish element that respects the wearer's self-esteem and individuality, like fashionable athletic wear. It also acts as a device for the wearer to psychologically feel safe in interacting with the robot and blending in with the general public. This presents a new aesthetic for wearable robots where function and form are harmonized.

<Figure 3. WSF1 Vision Concept's Operating Principle. It walks autonomously and is worn from the front while the user is seated.>

KAIST Professor Hyunjoon Park said of the award, "We are focusing on using technology, aesthetics, and human-centered innovation to present advanced technical solutions as easy, enjoyable, and cool experiences for users. Based on Angel Robotics Inc.'s vision of 'recreating human ability with technology,' the WSF1 VISION Concept aimed to break away from the traditional framework of wearable robots and deliver a design experience that adds dignity, independence, and new style to the user's life."

<Figure 4. WSF1 Vision Concept Detail Image>

A physical model of the WSF1 VISION Concept is scheduled to be unveiled in the Future Hall of the 2025 Gwangju Design Biennale from August 30 to November 2. The theme is 'Po-yong-ji-deok' (the virtue of inclusion), and it will showcase the role of design language in creating an inclusive future society.

<Figure 5. WSF1 Vision Concept: Image of a Person Wearing and Walking>

2025.08.09 View 96

KAIST’s Wearable Robot Design Wins ‘2025 Red Dot Award Best of the Best’

<Professor Hyunjoon Park, M.S candidate Eun-ju Kang, Prospective M.S candidate Jae-seong Kim, undergraduate student Min-su Kim>

A team led by Professor Hyunjoon Park from the Department of Industrial Design won the ‘Best of the Best’ award at the 2025 Red Dot Design Awards, one of the world's top three design awards, for their 'Angel Robotics WSF1 VISION Concept.'

The design for the next-generation wearable robot for people with paraplegia successfully implements functionality, aesthetics, and social inclusion. This latest achievement follows the team's iF Design Award win for the WalkON Suit F1 prototype, which also won a gold medal at the Cybathlon last year. This marks consecutive wins at top-tier international design awards.

KAIST (President Kwang-hyung Lee) announced on the 8th of August that Move Lab, a research team led by Professor Hyunjoon Park from the Department of Industrial Design, won the 'Best of the Best' award in the Design Concept-Professional category at the prestigious '2025 Red Dot Design Awards' for their next-generation wearable robot design, the ‘Angel Robotics WSF1 VISION Concept.’

The German 'Red Dot Design Awards' is one of the world's most well-known design competitions. It is considered one of the world's top three design awards along with Germany’s iF Design Awards and America’s IDEA. The ‘Best of the Best’ award is given to the best design in a category and is awarded only to a very select few of the top designs (within the top 1%) among all Red Dot Award winners.

Professor Hyunjoon Park’s team was honored with the ‘Best of the Best’ award for a user-friendly follow-up development of the ‘WalkON Suit F1 prototype,’ which won a gold medal at the 2024 Cybathlon and an iF Design Award in 2025.

<Figure 1. WSF1 Vision Concept Main Image>

This award-winning design is the result of industry-academic cooperation with Angel Robotics Inc., founded by Professor Kyoungchul Kong from the KAIST Department of Mechanical Engineering. It is a concept design that proposes a next-generation wearable robot (an ultra-personal mobility device) that can be used by people with paraplegia in their daily lives.

The research team focused on transforming Angel Robotics Inc.'s advanced engineering platform into an intuitive and emotional, user-centric experience, implementing a design solution that simultaneously possesses functionality, aesthetics, and social inclusion.

<Figure 2. WSF1 Vision Concept Full Exterior (Front View)>

The WSF1 VISION Concept includes innovative features implemented in Professor Kyoungchul Kong’s Exo Lab, such as:

An autonomous access function where the robot finds the user on its own.

A front-loading mechanism designed for the user to put it on alone while seated.

Multi-directional walking functionality realized through 12 powerful torque actuators and the latest control algorithms.

AI vision technology, along with a multi-visual display system that provides navigation and omnidirectional vision.

This provides users with a safer and more convenient mobility experience.

The strong yet elegant silhouette was achieved through a design process that pursued perfection in proportion, surfaces, and details not seen in existing wearable robots. In particular, the fabric cover that wraps around the entire thigh from the robot's hip joint is a stylish element that respects the wearer's self-esteem and individuality, like fashionable athletic wear. It also acts as a device for the wearer to psychologically feel safe in interacting with the robot and blending in with the general public. This presents a new aesthetic for wearable robots where function and form are harmonized.

<Figure 3. WSF1 Vision Concept's Operating Principle. It walks autonomously and is worn from the front while the user is seated.>

KAIST Professor Hyunjoon Park said of the award, "We are focusing on using technology, aesthetics, and human-centered innovation to present advanced technical solutions as easy, enjoyable, and cool experiences for users. Based on Angel Robotics Inc.'s vision of 'recreating human ability with technology,' the WSF1 VISION Concept aimed to break away from the traditional framework of wearable robots and deliver a design experience that adds dignity, independence, and new style to the user's life."

<Figure 4. WSF1 Vision Concept Detail Image>

A physical model of the WSF1 VISION Concept is scheduled to be unveiled in the Future Hall of the 2025 Gwangju Design Biennale from August 30 to November 2. The theme is 'Po-yong-ji-deok' (the virtue of inclusion), and it will showcase the role of design language in creating an inclusive future society.

<Figure 5. WSF1 Vision Concept: Image of a Person Wearing and Walking>

2025.08.09 View 96 -

KAIST Develops AI ‘MARIOH’ to Uncover and Reconstruct Hidden Multi-Entity Relationships

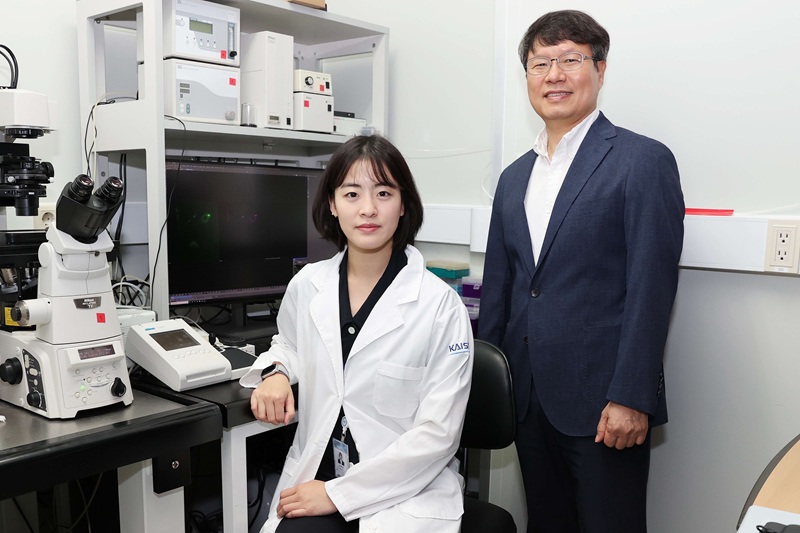

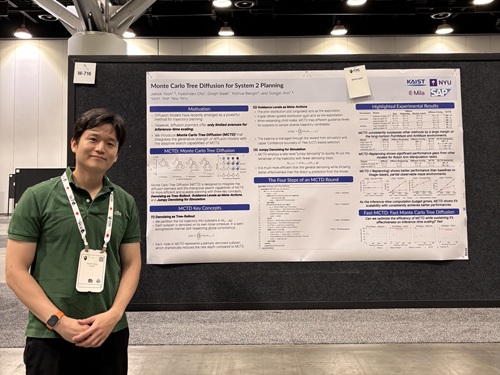

<(From Left) Professor Kijung Shin, Ph.D candidate Kyuhan Lee, and Ph.D candidate Geon Lee>

Just like when multiple people gather simultaneously in a meeting room, higher-order interactions—where many entities interact at once—occur across various fields and reflect the complexity of real-world relationships. However, due to technical limitations, in many fields, only low-order pairwise interactions between entities can be observed and collected, which results in the loss of full context and restricts practical use. KAIST researchers have developed the AI model “MARIOH,” which can accurately reconstruct* higher-order interactions from such low-order information, opening up innovative analytical possibilities in fields like social network analysis, neuroscience, and life sciences.

*Reconstruction: Estimating/reconstructing the original structure that has disappeared or was not observed.

KAIST (President Kwang Hyung Lee) announced on the 5th that Professor Kijung Shin’s research team at the Kim Jaechul Graduate School of AI has developed an AI technology called “MARIOH” (Multiplicity-Aware Hypergraph Reconstruction), which can reconstruct higher-order interaction structures with high accuracy using only low-order interaction data.

Reconstructing higher-order interactions is challenging because a vast number of higher-order interactions can arise from the same low-order structure.

The key idea behind MARIOH, developed by the research team, is to utilize multiplicity information of low-order interactions to drastically reduce the number of candidate higher-order interactions that could stem from a given structure.

In addition, by employing efficient search techniques, MARIOH quickly identifies promising interaction candidates and uses multiplicity-based deep learning to accurately predict the likelihood that each candidate represents an actual higher-order interaction.

<Figure 1. An example of recovering high-dimensional relationships (right) from low-dimensional paper co-authorship relationships (left) with 100% accuracy, using MARIOH technology.>

Through experiments on ten diverse real-world datasets, the research team showed that MARIOH reconstructed higher-order interactions with up to 74% greater accuracy compared to existing methods.

For instance, in a dataset on co-authorship relations (source: DBLP), MARIOH achieved a reconstruction accuracy of over 98%, significantly outperforming existing methods, which reached only about 86%. Furthermore, leveraging the reconstructed higher-order structures led to improved performance in downstream tasks, including prediction and classification.

According to Kijung, “MARIOH moves beyond existing approaches that rely solely on simplified connection information, enabling precise analysis of the complex interconnections found in the real world.” Furthermore, “it has broad potential applications in fields such as social network analysis for group chats or collaborative networks, life sciences for studying protein complexes or gene interactions, and neuroscience for tracking simultaneous activity across multiple brain regions.”

The research was conducted by Kyuhan Lee (Integrated M.S.–Ph.D. program at the Kim Jaechul Graduate School of AI at KAIST; currently a software engineer at GraphAI), Geon Lee (Integrated M.S.–Ph.D. program at KAIST), and Professor Kijung Shin. It was presented at the 41st IEEE International Conference on Data Engineering (IEEE ICDE), held in Hong Kong this past May.

※ Paper title: MARIOH: Multiplicity-Aware Hypergraph Reconstruction ※ DOI: https://doi.ieeecomputersociety.org/10.1109/ICDE65448.2025.00233

<Figure 2. An example of the process of recovering high-dimensional relationships using MARIOH technology>

This research was supported by the Institute of Information & Communications Technology Planning & Evaluation (IITP) through the project “EntireDB2AI: Foundational technologies and software for deep representation learning and prediction using complete relational databases,” as well as by the National Research Foundation of Korea through the project “Graph Foundation Model: Graph-based machine learning applicable across various modalities and domains.”

2025.08.05 View 292

KAIST Develops AI ‘MARIOH’ to Uncover and Reconstruct Hidden Multi-Entity Relationships

<(From Left) Professor Kijung Shin, Ph.D candidate Kyuhan Lee, and Ph.D candidate Geon Lee>

Just like when multiple people gather simultaneously in a meeting room, higher-order interactions—where many entities interact at once—occur across various fields and reflect the complexity of real-world relationships. However, due to technical limitations, in many fields, only low-order pairwise interactions between entities can be observed and collected, which results in the loss of full context and restricts practical use. KAIST researchers have developed the AI model “MARIOH,” which can accurately reconstruct* higher-order interactions from such low-order information, opening up innovative analytical possibilities in fields like social network analysis, neuroscience, and life sciences.

*Reconstruction: Estimating/reconstructing the original structure that has disappeared or was not observed.

KAIST (President Kwang Hyung Lee) announced on the 5th that Professor Kijung Shin’s research team at the Kim Jaechul Graduate School of AI has developed an AI technology called “MARIOH” (Multiplicity-Aware Hypergraph Reconstruction), which can reconstruct higher-order interaction structures with high accuracy using only low-order interaction data.

Reconstructing higher-order interactions is challenging because a vast number of higher-order interactions can arise from the same low-order structure.

The key idea behind MARIOH, developed by the research team, is to utilize multiplicity information of low-order interactions to drastically reduce the number of candidate higher-order interactions that could stem from a given structure.

In addition, by employing efficient search techniques, MARIOH quickly identifies promising interaction candidates and uses multiplicity-based deep learning to accurately predict the likelihood that each candidate represents an actual higher-order interaction.

<Figure 1. An example of recovering high-dimensional relationships (right) from low-dimensional paper co-authorship relationships (left) with 100% accuracy, using MARIOH technology.>

Through experiments on ten diverse real-world datasets, the research team showed that MARIOH reconstructed higher-order interactions with up to 74% greater accuracy compared to existing methods.

For instance, in a dataset on co-authorship relations (source: DBLP), MARIOH achieved a reconstruction accuracy of over 98%, significantly outperforming existing methods, which reached only about 86%. Furthermore, leveraging the reconstructed higher-order structures led to improved performance in downstream tasks, including prediction and classification.

According to Kijung, “MARIOH moves beyond existing approaches that rely solely on simplified connection information, enabling precise analysis of the complex interconnections found in the real world.” Furthermore, “it has broad potential applications in fields such as social network analysis for group chats or collaborative networks, life sciences for studying protein complexes or gene interactions, and neuroscience for tracking simultaneous activity across multiple brain regions.”

The research was conducted by Kyuhan Lee (Integrated M.S.–Ph.D. program at the Kim Jaechul Graduate School of AI at KAIST; currently a software engineer at GraphAI), Geon Lee (Integrated M.S.–Ph.D. program at KAIST), and Professor Kijung Shin. It was presented at the 41st IEEE International Conference on Data Engineering (IEEE ICDE), held in Hong Kong this past May.

※ Paper title: MARIOH: Multiplicity-Aware Hypergraph Reconstruction ※ DOI: https://doi.ieeecomputersociety.org/10.1109/ICDE65448.2025.00233

<Figure 2. An example of the process of recovering high-dimensional relationships using MARIOH technology>

This research was supported by the Institute of Information & Communications Technology Planning & Evaluation (IITP) through the project “EntireDB2AI: Foundational technologies and software for deep representation learning and prediction using complete relational databases,” as well as by the National Research Foundation of Korea through the project “Graph Foundation Model: Graph-based machine learning applicable across various modalities and domains.”

2025.08.05 View 292 -

Immune Signals Directly Modulate Brain's Emotional Circuits: Unraveling the Mechanism Behind Anxiety-Inducing Behaviors

KAIST's Department of Brain and Cognitive Sciences, led by Professor Jeong-Tae Kwon, has collaborated with MIT and Harvard Medical School to make a groundbreaking discovery. For the first time globally, their joint research has revealed that cytokines, released during immune responses, directly influence the brain's emotional circuits to regulate anxiety behavior.

The study provided experimental evidence for a bidirectional regulatory mechanism: inflammatory cytokines IL-17A and IL-17C act on specific neurons in the amygdala, a region known for emotional regulation, increasing their excitability and consequently inducing anxiety. Conversely, the anti-inflammatory cytokine IL-10 was found to suppress excitability in these very same neurons, thereby contributing to anxiety alleviation.

In a mouse model, the research team observed that while skin inflammation was mitigated by immunotherapy (IL-17RA antibody), anxiety levels paradoxically rose. This was attributed to elevated circulating IL-17 family cytokines leading to the overactivation of amygdala neurons.

Key finding: Inflammatory cytokines IL-17A/17C promote anxiety by acting on excitable amygdala neurons (via IL-17RA/RE receptors), whereas anti-inflammatory cytokine IL-10 alleviates anxiety by suppressing excitability through IL-10RA receptors on the same neurons.

The researchers further elucidated that the anti-inflammatory cytokine IL-10 works to reduce the excitability of these amygdala neurons, thereby mitigating anxiety responses.

This research marks the first instance of demonstrating that immune responses, such as infections or inflammation, directly impact emotional regulation at the level of brain circuits, extending beyond simple physical reactions. This is a profoundly significant achievement, as it proposes a crucial biological mechanism that interlinks immunity, emotion, and behavior through identical neurons within the brain.

The findings of this research were published in the esteemed international journal Cell on April 17th of this year.

Paper Information:

Title: Inflammatory and anti-inflammatory cytokines bidirectionally modulate amygdala circuits regulating anxiety

Journal: Cell (Vol. 188, 2190–2220), April 17, 2025

DOI: https://doi.org/10.1016/j.cell.2025.03.005

Corresponding Authors: Professor Gloria Choi (MIT), Professor Jun R. Huh (Harvard Medical School)

2025.07.24 View 462

Immune Signals Directly Modulate Brain's Emotional Circuits: Unraveling the Mechanism Behind Anxiety-Inducing Behaviors

KAIST's Department of Brain and Cognitive Sciences, led by Professor Jeong-Tae Kwon, has collaborated with MIT and Harvard Medical School to make a groundbreaking discovery. For the first time globally, their joint research has revealed that cytokines, released during immune responses, directly influence the brain's emotional circuits to regulate anxiety behavior.

The study provided experimental evidence for a bidirectional regulatory mechanism: inflammatory cytokines IL-17A and IL-17C act on specific neurons in the amygdala, a region known for emotional regulation, increasing their excitability and consequently inducing anxiety. Conversely, the anti-inflammatory cytokine IL-10 was found to suppress excitability in these very same neurons, thereby contributing to anxiety alleviation.

In a mouse model, the research team observed that while skin inflammation was mitigated by immunotherapy (IL-17RA antibody), anxiety levels paradoxically rose. This was attributed to elevated circulating IL-17 family cytokines leading to the overactivation of amygdala neurons.

Key finding: Inflammatory cytokines IL-17A/17C promote anxiety by acting on excitable amygdala neurons (via IL-17RA/RE receptors), whereas anti-inflammatory cytokine IL-10 alleviates anxiety by suppressing excitability through IL-10RA receptors on the same neurons.

The researchers further elucidated that the anti-inflammatory cytokine IL-10 works to reduce the excitability of these amygdala neurons, thereby mitigating anxiety responses.

This research marks the first instance of demonstrating that immune responses, such as infections or inflammation, directly impact emotional regulation at the level of brain circuits, extending beyond simple physical reactions. This is a profoundly significant achievement, as it proposes a crucial biological mechanism that interlinks immunity, emotion, and behavior through identical neurons within the brain.

The findings of this research were published in the esteemed international journal Cell on April 17th of this year.

Paper Information:

Title: Inflammatory and anti-inflammatory cytokines bidirectionally modulate amygdala circuits regulating anxiety

Journal: Cell (Vol. 188, 2190–2220), April 17, 2025

DOI: https://doi.org/10.1016/j.cell.2025.03.005

Corresponding Authors: Professor Gloria Choi (MIT), Professor Jun R. Huh (Harvard Medical School)

2025.07.24 View 462 -

KAIST Team Develops Optogenetic Platform for Spatiotemporal Control of Protein and mRNA Storage and Release

<Dr. Chaeyeon Lee, Professor Won Do Heo from Department of Biological Sciences>

A KAIST research team led by Professor Won Do Heo (Department of Biological Sciences) has developed an optogenetic platform, RELISR (REversible LIght-induced Store and Release), that enables precise spatiotemporal control over the storage and release of proteins and mRNAs in living cells and animals.

Traditional optogenetic condensate systems have been limited by their reliance on non-specific multivalent interactions, which can lead to unintended sequestration or release of endogenous molecules. RELISR overcomes these limitations by employing highly specific protein–protein (nanobody–antigen) and protein–RNA (MCP–MS2) interactions, enabling the selective and reversible compartmentalization of target proteins or mRNAs within engineered, membrane-less condensates.

In the dark, RELISR stably sequesters target molecules within condensates, physically isolating them from the cellular environment. Upon blue light stimulation, the condensates rapidly dissolve, releasing the stored proteins or mRNAs, which immediately regain their cellular functions or translational competency. This allows for reversible and rapid modulation of molecular activities in response to optical cues.

< Figure 1. Overview of the Artificial Condensate System (RELISR). The artificial condensate system, RELISR, includes "Protein-RELISR" for storing proteins and "mRNA-RELISR" for storing mRNA. These artificial condensates can be disassembled by blue light irradiation and reassembled in a dark state>

The research team demonstrated that RELISR enables temporal and spatial regulation of protein activity and mRNA translation in various cell types, including cultured neurons and mouse liver tissue. Comparative studies showed that RELISR provides more robust and reversible control of translation than previous systems based on spatial translocation.

While previous optogenetic systems such as LARIAT (Lee et al., Nature Methods, 2014) and mRNA-LARIAT (Kim et al., Nat. Cell Biol., 2019) enabled the selective sequestration of proteins or mRNAs into membrane-less condensates in response to light, they were primarily limited to the trapping phase. The RELISR platform introduced in this study establishes a new paradigm by enabling both the targeted storage of proteins and mRNAs and their rapid, light-triggered release. This approach allows researchers to not only confine molecular function on demand, but also to restore activity with precise temporal control.

< Figure 2. Cell shape change using the artificial condensate system (RELISR). A target protein, Vav2, which contributes to cell shape, was stored within the artificial condensate and then released after light irradiation. This release activated the target protein Vav2, causing a change in cell shape. It was confirmed that the storage, release, and activation of various proteins were effectively achieved>

Professor Heo stated, “RELISR is a versatile optogenetic tool that enables the precise control of protein and mRNA function at defined times and locations in living systems. We anticipate this platform will be broadly applicable for studies of cell signaling, neural circuits, and therapeutic development. Furthermore, the combination of RELISR with genome editing or tissue-targeted delivery could further expand its utility for molecular medicine.”

< Figure 3. Expression of a target mRNA using the artificial condensate system (RELISR) in mice. The genetic material for the artificial condensate system, RELISR, was injected into a living mouse. Using this system, a target mRNA was stored within the mouse's liver. Upon light irradiation, the mRNA was released, which induced the translation of a luminescent protein>

This research was conducted by first author Dr. Chaeyeon Lee, under the supervision of Professor Heo, with contributions from Dr. Daseuli Yu (co-corresponding author) and Professor YongKeun Park (co-corresponding author, Department of Physics), whose group performed quantitative imaging analyses of biophysical changes induced by RELISR in cells.

The findings were published in Nature Communications (July 7, 2025; DOI: 10.1038/s41467-025-61322-y). This work was supported by the Samsung Future Technology Foundation and the National Research Foundation of Korea.

2025.07.23 View 343

KAIST Team Develops Optogenetic Platform for Spatiotemporal Control of Protein and mRNA Storage and Release

<Dr. Chaeyeon Lee, Professor Won Do Heo from Department of Biological Sciences>

A KAIST research team led by Professor Won Do Heo (Department of Biological Sciences) has developed an optogenetic platform, RELISR (REversible LIght-induced Store and Release), that enables precise spatiotemporal control over the storage and release of proteins and mRNAs in living cells and animals.

Traditional optogenetic condensate systems have been limited by their reliance on non-specific multivalent interactions, which can lead to unintended sequestration or release of endogenous molecules. RELISR overcomes these limitations by employing highly specific protein–protein (nanobody–antigen) and protein–RNA (MCP–MS2) interactions, enabling the selective and reversible compartmentalization of target proteins or mRNAs within engineered, membrane-less condensates.

In the dark, RELISR stably sequesters target molecules within condensates, physically isolating them from the cellular environment. Upon blue light stimulation, the condensates rapidly dissolve, releasing the stored proteins or mRNAs, which immediately regain their cellular functions or translational competency. This allows for reversible and rapid modulation of molecular activities in response to optical cues.

< Figure 1. Overview of the Artificial Condensate System (RELISR). The artificial condensate system, RELISR, includes "Protein-RELISR" for storing proteins and "mRNA-RELISR" for storing mRNA. These artificial condensates can be disassembled by blue light irradiation and reassembled in a dark state>

The research team demonstrated that RELISR enables temporal and spatial regulation of protein activity and mRNA translation in various cell types, including cultured neurons and mouse liver tissue. Comparative studies showed that RELISR provides more robust and reversible control of translation than previous systems based on spatial translocation.

While previous optogenetic systems such as LARIAT (Lee et al., Nature Methods, 2014) and mRNA-LARIAT (Kim et al., Nat. Cell Biol., 2019) enabled the selective sequestration of proteins or mRNAs into membrane-less condensates in response to light, they were primarily limited to the trapping phase. The RELISR platform introduced in this study establishes a new paradigm by enabling both the targeted storage of proteins and mRNAs and their rapid, light-triggered release. This approach allows researchers to not only confine molecular function on demand, but also to restore activity with precise temporal control.

< Figure 2. Cell shape change using the artificial condensate system (RELISR). A target protein, Vav2, which contributes to cell shape, was stored within the artificial condensate and then released after light irradiation. This release activated the target protein Vav2, causing a change in cell shape. It was confirmed that the storage, release, and activation of various proteins were effectively achieved>

Professor Heo stated, “RELISR is a versatile optogenetic tool that enables the precise control of protein and mRNA function at defined times and locations in living systems. We anticipate this platform will be broadly applicable for studies of cell signaling, neural circuits, and therapeutic development. Furthermore, the combination of RELISR with genome editing or tissue-targeted delivery could further expand its utility for molecular medicine.”

< Figure 3. Expression of a target mRNA using the artificial condensate system (RELISR) in mice. The genetic material for the artificial condensate system, RELISR, was injected into a living mouse. Using this system, a target mRNA was stored within the mouse's liver. Upon light irradiation, the mRNA was released, which induced the translation of a luminescent protein>

This research was conducted by first author Dr. Chaeyeon Lee, under the supervision of Professor Heo, with contributions from Dr. Daseuli Yu (co-corresponding author) and Professor YongKeun Park (co-corresponding author, Department of Physics), whose group performed quantitative imaging analyses of biophysical changes induced by RELISR in cells.

The findings were published in Nature Communications (July 7, 2025; DOI: 10.1038/s41467-025-61322-y). This work was supported by the Samsung Future Technology Foundation and the National Research Foundation of Korea.

2025.07.23 View 343 -

Why Do Plants Attack Themselves? The Secret of Genetic Conflict Revealed

<Professor Ji-Joon Song of the KAIST Department of Biological Sciences>

Plants, with their unique immune systems, sometimes launch 'autoimmune responses' by mistakenly identifying their own protein structures as pathogens. In particular, 'hybrid necrosis,' a phenomenon where descendant plants fail to grow healthily and perish after cross-breeding different varieties, has long been a difficult challenge for botanists and agricultural researchers. In response, an international research team has successfully elucidated the mechanism inducing plant autoimmune responses and proposed a novel strategy for cultivar improvement that can predict and avoid these reactions.

Professor Ji-Joon Song's research team at KAIST, in collaboration with teams from the National University of Singapore (NUS) and the University of Oxford, announced on the 21st of July that they have elucidated the structure and function of the 'DM3' protein complex, which triggers plant autoimmune responses, using cryo-electron microscopy (Cryo-EM) technology.

This research is drawing attention because it identifies defects in protein structure as the cause of hybrid necrosis, which occurs due to an abnormal reaction of immune receptors during cross-breeding between plant hybrids.

This protein (DM3) is originally an enzyme involved in the plant's immune response, but problems arise when the structure of the DM3 protein is damaged in a specific protein combination called 'DANGEROUS MIX (DM)'.

Notably, one variant of DM3, the 'DM3Col-0' variant, forms a stable complex with six proteins and is recognized as normal, thus not triggering an immune response. In contrast, another 'DM3Hh-0' variant has improper binding between its six proteins, causing the plant to recognize it as an 'abnormal state' and trigger an immune alarm, leading to autoimmunity.

The research team visualized this structure using atomic-resolution cryo-electron microscopy (Cryo-EM) and revealed that the immune-inducing ability is not due to the enzymatic function of the DM3 protein, but rather to 'differences in protein binding affinity.'

<Figure 1. Mechanism of Plant Autoimmunity Triggered by the Collapse of the DM3 Protein Complex>

This demonstrates that plants can initiate an immune response by recognizing not only 'external pathogens' but also 'internal protein structures' when they undergo abnormal changes, treating them as if they were pathogens.

The study shows how sensitively the plant immune system changes and triggers autoimmune responses when genes are mixed and protein structures change during the cross-breeding of different plant varieties. It significantly advanced the understanding of genetic incompatibility that can occur during natural cross-breeding and cultivar improvement processes.

Dr. Gijeong Kim, the co-first author, stated, "Through international research collaboration, we presented a new perspective on understanding the plant immune system by leveraging the autoimmune phenomenon, completing a high-quality study that encompasses structural biochemistry, genetics, and cell biological experiments."

Professor Ji-Joon Song of the KAIST Department of Biological Sciences, who led the research, said, "The fact that the immune system can detect not only external pathogens but also structural abnormalities in its own proteins will set a new standard for plant biotechnology and crop breeding strategies. Cryo-electron microscopy-based structural analysis will be an important tool for understanding the essence of gene interactions."

This research, with Professor Ji-Joon Song and Professor Eunyoung Chae of the University of Oxford as co-corresponding authors, Dr. Gijeong Kim (currently a postdoctoral researcher at the University of Zurich) and Dr. Wei-Lin Wan of the National University of Singapore as co-first authors, and Ph.D candidate Nayun Kim, as the second author, was published on July 17th in Molecular Cell, a sister journal of the international academic journal Cell.

This research was supported by the KAIST Grand Challenge 30 project.

Article Title: Structural determinants of DANGEROUS MIX 3, an alpha/beta hydrolase that triggers NLR-mediated genetic incompatibility in plants DOI: https://doi.org/10.1016/j.molcel.2025.06.021

2025.07.21 View 505

Why Do Plants Attack Themselves? The Secret of Genetic Conflict Revealed

<Professor Ji-Joon Song of the KAIST Department of Biological Sciences>

Plants, with their unique immune systems, sometimes launch 'autoimmune responses' by mistakenly identifying their own protein structures as pathogens. In particular, 'hybrid necrosis,' a phenomenon where descendant plants fail to grow healthily and perish after cross-breeding different varieties, has long been a difficult challenge for botanists and agricultural researchers. In response, an international research team has successfully elucidated the mechanism inducing plant autoimmune responses and proposed a novel strategy for cultivar improvement that can predict and avoid these reactions.

Professor Ji-Joon Song's research team at KAIST, in collaboration with teams from the National University of Singapore (NUS) and the University of Oxford, announced on the 21st of July that they have elucidated the structure and function of the 'DM3' protein complex, which triggers plant autoimmune responses, using cryo-electron microscopy (Cryo-EM) technology.

This research is drawing attention because it identifies defects in protein structure as the cause of hybrid necrosis, which occurs due to an abnormal reaction of immune receptors during cross-breeding between plant hybrids.

This protein (DM3) is originally an enzyme involved in the plant's immune response, but problems arise when the structure of the DM3 protein is damaged in a specific protein combination called 'DANGEROUS MIX (DM)'.

Notably, one variant of DM3, the 'DM3Col-0' variant, forms a stable complex with six proteins and is recognized as normal, thus not triggering an immune response. In contrast, another 'DM3Hh-0' variant has improper binding between its six proteins, causing the plant to recognize it as an 'abnormal state' and trigger an immune alarm, leading to autoimmunity.

The research team visualized this structure using atomic-resolution cryo-electron microscopy (Cryo-EM) and revealed that the immune-inducing ability is not due to the enzymatic function of the DM3 protein, but rather to 'differences in protein binding affinity.'